The Collapse of LangChain? Decline in Agent Frameworks Signals a Shift to Leaner LLM Architectures

The developer community is quietly abandoning ship. According to the LLM Development Landscape 2.0 report from Ant Open Source, LangChain, LlamaIndex, and AutoGen now rank among the “steepest declining” projects by community activity over the past six months. The diagnosis is blunt: “reduced community investment from once dominant projects.” Meanwhile, performance-optimized inference engines like vLLM and SGLang continue their upward climb.

This isn’t just another framework falling out of fashion. It signals a deeper recalibration in how engineers approach LLM application architecture, one that prioritizes direct control, predictable performance, and lean abstractions over the promise of magical frameworks.

The Abstraction Hangover

The backlash against LangChain isn’t new, but it has reached a tipping point. Developer forums are filled with engineers recounting their “LangChain horror stories”, tales of fighting with opaque abstractions, debugging impossible error stacks, and ultimately ripping out the framework to call APIs directly. The result, consistently reported across dozens of threads: codebases cut in half and debugging that becomes actually possible again.

The technical critiques are specific and damning. LangChain’s “pipe” operator drew immediate skepticism from developers who saw it as reinventing Python syntax for no clear benefit. The framework’s tendency to wrap simple string operations in layers of abstraction, things easily handled with f-strings, made code harder to read and reason about. Security and performance considerations, both crucial for production deployments, were described as “vague” at best in the documentation.

The Rise of the Lean Stack

What’s replacing the monolithic frameworks? A composable stack of specialized tools that each do one thing well. At the inference layer, vLLM has emerged as the performance leader through its PagedAttention mechanism, which manages GPU memory more efficiently than standard approaches. For context management and tool use, developers are gravitating toward lighter-weight alternatives like PydanticAI, which provides type checking and structured output without the bloat.

The shift reflects a maturing understanding of LLM application patterns. Early frameworks promised to abstract away the complexity of working with language models, but they ended up abstracting away control and predictability. As one developer put it: “I don’t need frameworks to invent new operators. Just stick with pythonic code.”

The Data Tells the Story

The numbers paint a stark picture of this architectural pivot:

- Community decline: LangChain, LlamaIndex, and AutoGen show the steepest drop in community activity among major LLM projects

- Enterprise readiness gap: 62% of enterprises exploring AI agents lack a clear starting point, while 44% lack robust systems to move data effectively for AI

- Production reality vs. hype: 51% of respondents report using agents in production, but 69% of AI projects never make it to live operational use

- Performance metrics: vLLM’s throughput advantage ranges from 1.8x to 2.7x over alternatives, with latency improvements of 2x to 5x

The market is also speaking clearly. The AI agents market is projected to grow at a 45% CAGR over the next five years, but this growth is increasingly captured by lean, focused tools rather than monolithic frameworks. BCG reports that a leading consumer packaged goods company used intelligent agents to create blog posts, reducing costs by 95% and improving speed by 50x. These aren’t gains achieved through abstraction layers, they’re the result of direct, optimized implementations.

When Frameworks Still Make Sense (But Probably Not the Ones You Think)

To be fair, the “collapse” narrative has nuance. Framework maintainers themselves acknowledge the scope problem. A LlamaIndex maintainer admitted that “the breadth and scope of a lot of projects, including LlamaIndex, is too wide” and expressed hope to “bring more focus in the new year.” This honesty is refreshing but also revealing, it confirms that the kitchen-sink approach has failed.

The question isn’t whether abstraction has value, but what kind and how much. PydanticAI has gained traction because it provides specific value, type checking and structured output, without trying to own the entire stack. Haystack is favored for RAG tasks because it focuses on that specific problem rather than aspiring to be everything to everyone.

The Architectural Implications

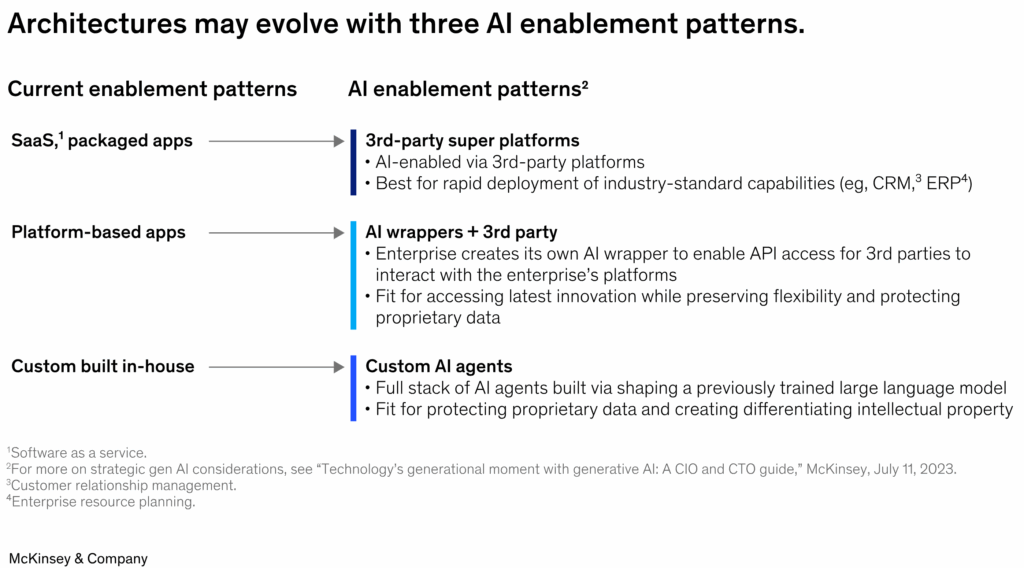

This shift has profound implications for LLM architecture design. McKinsey’s analysis of AI enablement patterns suggests architectures are evolving toward three distinct patterns: API-first direct integration, optimized inference layers, and lightweight orchestration. The middle layer, heavyweight frameworks, is being squeezed out.

The decline of LangChain and similar frameworks isn’t a failure of the projects themselves, it’s a failure of the abstraction-heavy approach they represent. As one developer succinctly put it: “Every time I see a tutorial using LangChain now I just skip it.” This isn’t laziness, it’s learned wisdom.

The data supports a leaner approach:

– Direct API integration reduces codebase size and debugging complexity

– Performance-optimized inference (vLLM, TensorRT-LLM) delivers 2-5x better throughput and latency

– Focused tools (PydanticAI, Haystack) provide specific value without framework bloat

– State-of-the-art serving architectures (vLLM Router with prefill/decode disaggregation) offer 25-100% better throughput than traditional load balancing

The future belongs to architectures that are explicit, composable, and performance-conscious. This means:

1. Direct integration with LLM APIs for most use cases

2. Performance-optimized inference engines for production deployments

3. Lightweight, focused libraries for specific needs (type checking, structured output, RAG)

4. Proper infrastructure for state management and caching

The “collapse” of LangChain isn’t a disaster, it’s a necessary correction. The LLM ecosystem is maturing, and developers are voting with their feet for leaner, more direct approaches. For those still building on heavyweight frameworks, the question isn’t if they’ll migrate, but when. The performance gap, developer experience difference, and operational complexity will only become more pronounced.

The frameworks that survive will be those that embrace this reality: focus, performance, and interoperability over abstraction and lock-in. Everything else is heading for the same fate as the JavaScript frameworks that dominated 2015 and are now footnotes in web development history.

The frameworks that survive will be those that embrace this reality: focus, performance, and interoperability over abstraction and lock-in.