AI Is About to Make Formal Verification Mandatory, Ready or Not

For decades, formal verification has been the software engineering equivalent of a space program, technically awe-inspiring, but so expensive and specialized that only a handful of organizations could justify the investment. The seL4 microkernel, a gold standard in formally verified systems, required 20 person-years and 200,000 lines of Isabelle proof code to verify 8,700 lines of C. That’s a 23:1 ratio of proof to implementation, demanding PhD-level expertise from maybe a few hundred people worldwide. The economics were brutally simple: for most systems, the expected cost of bugs remained lower than the expected cost of eliminating them.

That calculus is about to shatter. Large language models are rapidly mastering proof assistants like Rocq, Isabelle, Lean, and F*, and the implications reach far beyond academia. As Martin Kleppmann argues in his recent analysis, AI is poised to drag formal verification from the fringes into the mainstream, not as a luxury, but as a necessity for anyone using AI-generated code.

The Cost Equation Just Flipped

The historical bottleneck wasn’t the theory. Tools like TLA+, Rocq, and Isabelle have been mature for years. The problem was labor. Writing proofs requires arcane knowledge of proof systems and an obsessive attention to edge cases that most developers simply don’t have time for. As Hillel Wayne famously noted, “TLA+ is one of the more popular formal specification languages and you can probably fit every TLA+ expert in the world in a large schoolbus.”

But LLMs don’t get bored. They don’t miss edge cases due to deadline pressure. And they can now generate proof scripts with increasing reliability. The research shows these models are getting “pretty good” at writing proofs in various languages, with human specialists still guiding the process, but that guidance requirement is shrinking fast. When proof generation becomes fully automated, the 20 person-year price tag for seL4-style verification could collapse to weeks or even days.

This isn’t theoretical. Amazon’s Bedrock team is already using formal verification methods borrowed from aerospace and defense to mathematically prove model behavior under defined conditions. Their neuro-symbolic AI approach combines neural pattern recognition with symbolic logic, achieving up to 99% verification accuracy in detecting hallucinations and ensuring outputs are grounded in verified facts.

Why AI-Generated Code Demands Formal Verification

The rise of AI coding assistants creates a paradox: the cheaper code becomes to generate, the more expensive it becomes to trust. Human review doesn’t scale when you’re producing thousands of lines per hour. As Ben Congdon points out in his analysis, the traditional response, more testing, misses the point. Unit tests are “squarely in-distribution” for LLMs, meaning they’re good at generating tests for scenarios they’ve seen. But they can’t guarantee exhaustiveness.

Formal verification offers something tests cannot: mathematical proof that code satisfies its specification for all inputs, including the weird edge cases no human thought to test. When an LLM generates both the implementation and the proof, you get a level of confidence that handcrafted code with artisanal bugs can never match.

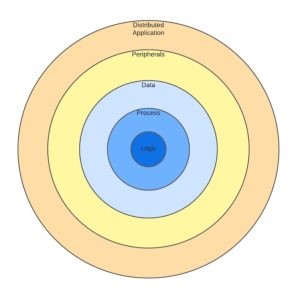

The architecture becomes elegantly simple:

1. Specify what the code should do in a high-level, declarative way

2. Let the AI generate both the implementation and the proof

3. Run the proof through a verified proof checker

4. If it passes, deploy without ever reading the machine-generated code

The proof checker acts as an unforgeable seal. It doesn’t matter if the LLM hallucinates nonsense, the checker rejects invalid proofs. This is the crucial difference between trusting AI output and trusting verified AI output.

The Neuro-Symbolic Bridge

The most promising path forward isn’t raw LLM power, but neuro-symbolic AI. As Amazon’s implementation demonstrates, combining neural networks with symbolic reasoning creates systems that don’t just appear correct, they can demonstrate correctness through verifiable logic.

In their warehouse automation systems, neuro-symbolic AI combines DeepFleet foundation models with logical rules and machine learning, improving robot-fleet travel efficiency by 10% while providing provable guarantees about safety constraints. For customer-facing applications like Rufus, their shopping assistant, the approach enables step-by-step reasoning that’s auditable and explainable.

This isn’t just about code verification. It’s about creating AI systems that can show their work. When analyzing financial data, a neuro-symbolic system might describe trends accurately and provide the mathematical proof for its calculations, eliminating the “trust gap” that currently prevents AI deployment in high-stakes decisions.

The Real Bottleneck: Specification

If verification becomes cheap, the hard part shifts from “how do we prove this?” to “what exactly are we proving?” Writing correct specifications requires deep domain expertise and careful thought. A proof that your code matches a flawed specification is worse than useless, it’s dangerous.

This is where AI assistance becomes a force multiplier. LLMs can help translate between formal specifications and natural language, making specifications more accessible to domain experts who aren’t proof system specialists. The risk of translation errors exists, but it’s manageable compared to the alternative of untrusted AI code in production.

The workflow evolves naturally:

– Product owners and domain experts define high-level system behavior in English

– AI helps spin out formal TLA+ models at various specificity levels

– These models identify which components are “load-bearing” for system correctness

– Critical components get full formal verification in Rocq or similar

– Less critical components still get AI-assisted auditing against their TLA+ specs

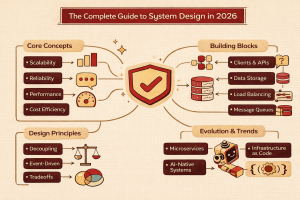

Enterprise Architecture Implications

For technology leaders, this shift demands immediate attention. The AWS GenAIOps framework provides a blueprint for how organizations should adapt their DevOps practices. Traditional CI/CD pipelines assume deterministic outputs, AI-generated code requires new quality gates focused on correctness, safety, and statistical validation.

The three-pillar model of verifiable AI, data provenance, model integrity, and output accountability, becomes non-negotiable. As one CIO article emphasizes, without continuous verification, even accurate models lose relevance fast. The solution involves formal verification methods borrowed from aerospace and defense, mathematically proving model behavior under defined conditions.

This means rethinking procurement. When evaluating AI vendors, the checklist must extend beyond cost and performance to include:

– Evidence of model traceability

– Documentation on training data lineage

– Methodology for ongoing monitoring

– Integration with formal verification pipelines

The Road Ahead: From Exploration to Reinvention

Organizations will move through three stages:

1. Exploration: Small teams experiment with AI-assisted verification for isolated components

2. Production: Formal verification becomes standard for AI-generated code in critical paths

3. Reinvention: The entire software lifecycle reorients around specification-first development

At each stage, the architecture must evolve. The baseline multi-account DevOps setup needs extensions for:

– Versioned prompt catalogs and guardrail configurations

– Automated evaluation pipelines that run hundreds of tests in seconds

– Centralized AI gateways that standardize access and enable load balancing across models

– Comprehensive observability that tracks not just metrics, but belief stability and uncertainty coherence

The Cognitive Architecture Perspective

The most forward-thinking research suggests we’re not just automating proofs, we’re building systems that reason about their own correctness. The QICA (Quantum-Inspired Cognitive Architecture) framework from Artificial Brain Labs proposes evaluating intelligence not by output accuracy, but by cognitive robustness: belief persistence, uncertainty coherence, contradiction detection, memory integration, and stability over time.

This aligns perfectly with formal verification’s goals. A system that can detect its own contradictions and maintain stable beliefs under uncertainty is precisely what we need when AI generates code at scale. The probabilistic and priority-gated neurons (PPGNs) in QICA emit beliefs rather than values, creating a natural foundation for verifiable AI systems.

Practical Steps for Engineering Teams

If you’re building systems today, here’s what to do:

- Start small but strategic: Identify your most critical component boundaries, places where bugs would cause catastrophic failure. Apply formal verification to these first.

- Invest in specification skills: Train your team in TLA+ or similar formal specification languages. As Martin Kleppmann suggests, undergraduate CS programs should allocate curriculum to formal verification, your internal training should too.

- Build verification into your pipeline: Don’t treat proofs as a separate phase. Integrate automated proof checking into your CI/CD pipeline, just like unit tests.

- Leverage AI assistance: Use LLMs to generate proof scripts, but always verify through a trusted proof checker. The combination of AI speed and formal rigor is unbeatable.

- Monitor belief stability: For AI-generated components, track not just code coverage, but the stability and coherence of the system’s “beliefs” about correctness over time.

The Inevitable Mandate

The convergence is clear: AI makes code generation cheap, which makes verification expensive not to do. Regulatory pressure is mounting, the EU AI Act, NIST AI Risk Management Framework, and ISO/IEC 42001 all place accountability on enterprises, not vendors. A 2025 transparency index found leading AI developers scored just 37 out of 100 on disclosure metrics.

Formal verification is no longer the academic curiosity that delays shipping. It’s becoming the only scalable way to trust the code you’re shipping. The question isn’t whether your organization will adopt formal verification, but whether you’ll be ready before your competitors are, before regulators require it, and before your first AI-generated bug costs more than a full verification effort would have.

The technology is here. The economics have flipped. The mandate is coming. The only thing left is execution.

Next Steps

Start by reading Martin Kleppmann’s full analysis and experimenting with Lean4 or Rocq on a small, critical component. The future belongs to systems that can prove they’re correct.