The microservices revolution promised independent teams, faster deployments, and infinite scalability. What it delivered for most early-stage companies was a distributed nightmare of network failures, coordination overhead, and infrastructure costs that dwarf engineering salaries. The kicker? Even the companies that pioneered this architecture are now quietly walking it back.

The industry has been running a decade-long experiment in premature optimization, and the bill just came due.

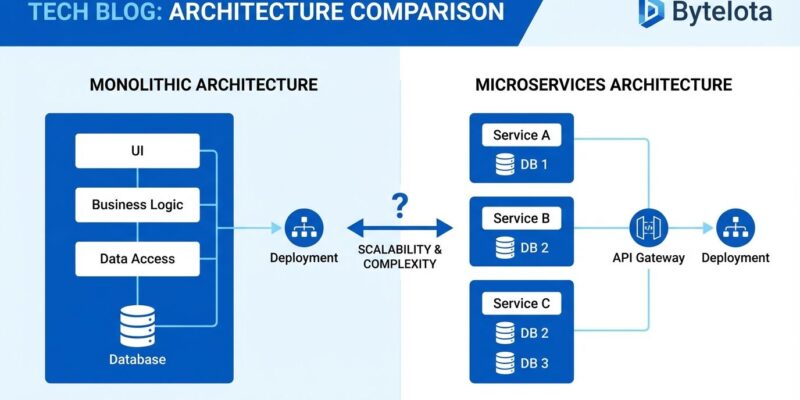

The Microservice Premium Isn’t a Theory, It’s Math

Martin Fowler coined the term “Microservice Premium” to describe the productivity cost of distributed systems, but the 2025 consensus has turned this abstract concept into concrete thresholds. The math is brutal: microservices require 3 to 5 developers per service to maintain properly. A 15-person team managing 12 services isn’t being agile, they’re drowning in operational complexity that has nothing to do with customer value.

Here’s what the data actually shows:

- Teams under 10 developers: Monolithic architecture consistently outperforms microservices in velocity and reliability

- 10-50 developer range: Modular monoliths shine, enforced boundaries without distributed system complexity

- 50+ developers or $10M+ revenue: Microservices benefits finally justify their operational costs

A five-person startup running Kubernetes is like buying a cargo ship to cross a river. The vessel might be impressive, but you’ll spend more time maintaining it than moving forward.

The $10 Million Reversal Nobody’s Talking About

Amazon Prime Video’s Video Quality Analysis team didn’t just tweak their microservices, they eliminated them entirely, migrating from AWS Step Functions to a single-process monolith. The result? A 90% infrastructure cost reduction and improved scaling capabilities. They cut expensive S3 calls for intermediate frame storage by moving data transfer in-memory.

This wasn’t a struggling startup cutting corners. This is Amazon, the company that invented modern microservices architecture, proving that monoliths work at scale when the use case fits. The irony is thicker than a region-failed API call.

The lesson isn’t that microservices are bad, it’s that architectural decisions should be driven by actual constraints, not Netflix’s blog posts. Amazon’s reversal validates what many developers have whispered but hesitated to say publicly: we’ve been over-engineering solutions to problems we don’t have.

The Operational Overhead That Eats Your Roadmap

Let’s talk about what microservices actually cost you, line by line:

A monolith needs:

– One deployment pipeline

– One database connection pool

– One monitoring dashboard

– One mental model for debugging

Microservices need:

– Service discovery (Consul, Eureka, or Kubernetes DNS)

– API gateways (Kong, Zuul, AWS API Gateway)

– Distributed tracing (OpenTelemetry, Jaeger)

– Centralized logging (ELK Stack, Splunk)

– Circuit breakers (Hystrix, Resilience4j)

– Kubernetes expertise (or equivalent orchestration)

The result? You’re spending 40% of engineering time maintaining infrastructure instead of building features. Simple changes touch four repositories. Deployments require coordinating version compatibility across services. Debugging a single user request means tracing through five logs and correlating trace IDs.

As one engineering leader put it: “We chased microservices until our roadmap stalled. Incidents multiplied, deploys dragged, and simple changes touched four repos. The problem wasn’t scale. It was coordination cost.”

The Modular Monolith: Pragmatism Over Dogma

The emerging consensus isn’t “monoliths good, microservices bad”, it’s modular monoliths as the pragmatic default. This pattern structures applications into independent modules with enforced boundaries, all within a single deployable artifact. You get modularity without distributed system complexity.

Shopify maintains a 2.8-million-line Ruby monolith serving millions of merchants worldwide. Their “podded architecture” splits databases, not services, using tools like Packwerk to enforce module boundaries. They extract microservices only for specific needs like checkout and fraud detection.

GitHub runs a 2-million-line Rails monolith handling 50 million developers, 100 million repositories, and 1 billion API calls per day. They deploy 20 times per day and maintain their own Ruby version. Their gradual transition to a hybrid architecture keeps the monolith as core while extracting services only when data proves the need.

These aren’t legacy systems limping along, they’re proof that monoliths scale massively when built with discipline. The difference between a “Big Ball of Mud” and a scalable monolith is enforced boundaries, not physical service separation.

Conway’s Law Is Not a Suggestion

The Reddit discussion around microservices hits a critical point: this is fundamentally a management and organizational pattern, not just a technical one. Microservices don’t just split codebases, they split responsibility, coordination, communication, and architectural decisions across teams.

Conway’s Law and Domain-Driven Design’s bounded contexts aren’t theoretical exercises. When you have 50+ engineers organized into autonomous teams with clear business domains, microservices align the architecture with the organization. When you have 5 engineers in a single team, microservices create artificial boundaries that slow everything down.

The organizational benefit only outweighs the architectural cost when team coordination becomes the bottleneck, not code complexity. Most early-stage startups have the opposite problem: they need to move fast and change direction weekly, not optimize for independent team deployments.

The Technical Debt Time Bomb

Here’s what migration articles won’t tell you: moving to microservices without fixing your monolith’s culture just distributes your technical debt. If you lack code review discipline, testing standards, and domain understanding, you’ll replicate those problems across 12 services instead of one codebase.

The Stackademic analysis of monolith-to-microservices migrations emphasizes that root causes matter more than architecture. Common failure patterns include:

– Business delivery pressure leading to shortcuts in every service

– Weak large-scale design capability multiplied across repositories

– Lack of code review culture creating “dirty code” in 12 places instead of one

– Technical debt influx from postponed cleanup and inadequate processes

You can’t microservice your way out of a culture problem. If anything, poor culture decays faster in distributed systems where ownership is unclear and refactoring requires cross-service coordination.

When Microservices Actually Make Sense (The Checklist)

Extract services only when you have measured evidence of these conditions:

- Measured performance bottlenecks: One service handles 100x more traffic than others

- Dramatically different scaling requirements: ML models needing GPU vs. CPU-bound APIs

- Genuine technology diversity needs: Python data science with Java API layer

- Team coordination is provably the bottleneck: Conway’s Law in action with 50+ engineers

- $10M+ revenue to justify operational overhead: You can afford dedicated DevOps/SRE teams

Don’t extract because “we might need to scale this differently someday.” That’s premature optimization dressed up as architecture.

The 2025 Decision Framework

The religious wars are over. Pragmatism won. Here’s the simple framework:

- Under 10 developers: Monolith, full stop. Focus on product-market fit, not infrastructure.

- 10-50 developers: Modular monolith with strict boundaries. Use tools like Packwerk or Java 9 modules.

- 50+ developers: Consider microservices for specific domains that meet the checklist above.

Start simple. Evolve when data proves you need complexity, not because everyone else is doing it. Companies like Shopify and GitHub prove that “later” might never need to come.

Amazon’s 90% cost cut isn’t an anomaly, it’s a correction. The microservices tax has been levied on startups for too long. It’s time we stopped paying it.

Your turn: Have you experienced the microservices tax? Did you migrate back to a monolith? Share your war stories below. The community needs real data, not more cargo-cult architecture.