The microservices gospel has preached a seductive narrative for over a decade: split your monolith, and you’ll unlock independent evolution, faster deployments, and team autonomy. But the industry’s collective experience is revealing a heretical truth, refactoring across service boundaries is often an order of magnitude harder than evolving code inside a well-structured monolith. The architectural culture war isn’t heating up, it’s quietly ending in favor of pragmatism, and the winners are wielding IDE shortcuts instead of service meshes.

The IDE Shortcut vs. The Cross-Team Nightmare

A senior architect recently observed that the industry has landed on a “Golden Stack” of pragmatism, where the default starting point is a modular monolith. The reasoning is brutally simple: refactoring a boundary inside a monolith is an IDE shortcut, refactoring it between services is a cross-team nightmare.

When you need to move a function from one module to another within the same process, you press F6 in IntelliJ and let static analysis handle the ripple effects. The compiler catches broken references in seconds. Your test suite runs in one pass. The entire operation completes before your coffee cools.

Now try moving that same function to a separate microservice. You’re immediately drafting API contracts, versioning strategies, and backward compatibility guarantees. You’re coordinating deployment windows across three teams. You’re implementing the Outbox Pattern to maintain eventual consistency, adding circuit breakers and retries, and praying your idempotency logic holds under network partitions. What took seconds now consumes weeks and involves a cast of dozens.

This isn’t theoretical. One engineer described their AWS serverless nightmare: “Lambdas calling lambdas, Rube Goldberg would be proud!” They spent months just understanding the call graph before reverting to a containerized monolith. The hidden costs of microservices compound until your AWS bill becomes “a work of fiction, dominated not by compute, but by NAT Gateway fees and cross-AZ data transfer.”

The Distribution Tax: Paying for Complexity You Don’t Need

Every microservice extraction triggers a mandatory tax bill. The moment you split, you must implement:

- Contract versioning and compatibility layers (because you can’t atomically refactor both sides)

- Distributed transaction patterns (Outbox, Saga, Two-Phase Commit)

- Resilience primitives (circuit breakers, retries, timeouts, bulkheads)

- Observability infrastructure (distributed tracing, correlation IDs, service mesh)

- Network security (mTLS, service-to-service auth, secret rotation)

One team learned this the hard way at 3:14 AM when a memory leak in a minor “Notification Preference” service triggered an mTLS handshake retry storm that cascaded through their mesh, eventually OOM-killing their core “Order Management” service. Twelve hops of JSON serialization and mTLS overhead created a system so brittle that a single config change flattened production.

The kicker? They were paying this tax for features they didn’t have and traffic they didn’t serve. They weren’t scaling, they were funding complexity for its own sake. This operational visibility crisis means most teams can’t even predict what will break when they change a single line of code in one service.

Data Refactoring: The Point of No Return

While code refactoring gets the headlines, data refactoring is where migrations truly succeed or fail. One engineering team faced a multi-terabyte legacy database that felt impossible to untangle without massive downtime. Their microservices architecture, 45 services for 30 engineers, had turned into “Resume-Driven Development” where Istio sidecars outnumbered business logic.

The fundamental problem: in a monolith, you can refactor database schema within a single transaction. Add a column, backfill data, drop the old column, all atomic, all rollback-able. In microservices, splitting a table requires:

- Creating a new service with its own database

- Setting up dual-write logic (write to both old and new)

- Implementing a backfill job to migrate historical data

- Maintaining read reconciliation between systems

- Coordinating a cutover moment where writes stop going to the old system

- Finally, after months, dropping the original table

Each step introduces new failure modes. The “Notification Preference” service’s memory leak started because they were maintaining dual-writes to three different data stores for compliance reasons. The shared database that microservices advocates deride as an anti-pattern was actually the only thing keeping their data consistent.

Modular Monoliths: The Boundary Incubator

The pragmatic solution isn’t to abandon modularity, it’s to validate boundaries at runtime inside one deployable. This “modular monolith” or “modulith” approach gives you structural clarity without distribution costs.

Spring Boot developers are formalizing this with Spring Modulith. You define modules as top-level packages:

@org.springframework.modulith.ApplicationModule(displayName = "Orders")

package com.example.shop.order;

Other modules access only the api subpackage. The build enforces this:

class ModulithStructureTests {

@Test

void modulesStayWithinBoundaries() {

ApplicationModules modules = ApplicationModules.of(ShopApplication.class);

modules.verify(), // Fails if modules reach into internal packages

}

}

Compare calling another module versus another service:

Microservice call:

class BillingClient {

private final WebClient webClient;

Mono<BillingSummary> fetchBillingSummary(String customerId) {

return webClient.get()

.uri("http://billing-service/api/billing/{id}", customerId)

.retrieve()

.bodyToMono(BillingSummary.class);

}

}

Modular monolith call:

class OrderPriceCalculator {

private final BillingAccess billingAccess;

Money calculatePrice(String customerId, OrderLines lines) {

BillingSummary summary = billingAccess.loadSummary(customerId);

// ... pricing logic

}

}

The second version is a plain method call. No network. No serialization. No HTTP timeouts. Testing requires a simple stub, not a WireMock server. When you need to refactor, you use your IDE, not your DevOps pipeline.

The Organizational Scaling Trap

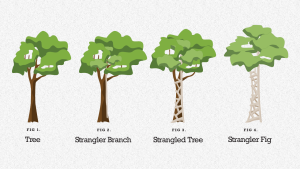

Microservices advocates correctly note that they solve “the too many cooks in the kitchen problem.” But this reveals the uncomfortable truth: microservices are primarily an organizational tool, not a technical one. They enable team independence by making cross-team changes so painful that people avoid them.

One Hacker News commenter put it bluntly: “Microservices are the most efficient way to integrate CNCF projects deeply with your platform at any size.” The ecosystem has become self-justifying, you need service mesh, observability stacks, and orchestration platforms because you have microservices, and you have microservices because you need to manage team coordination.

But this creates a new problem: when a feature request cuts across service boundaries (which happens constantly), you’re forced into “distributed monolith territory.” The neat story of team ownership falls apart when Team A gets dissolved and Team B inherits a service they didn’t build. Maintenance work, security vulnerabilities, stack modernization, migrations, becomes siloed rather than shared. Blast radius analysis becomes impossible because no one can trace dependencies across 500 microservices.

Meanwhile, teams running modular monoliths with 29 developers deploy 20+ times per day to production. The “independent deployments” argument evaporates when you have proper CI/CD and feature flags. The real constraint isn’t technical, it’s organizational maturity.

The AI Development Multiplier

Here’s the cutting-edge twist that makes this heresy timely: large language models struggle with microservices. As one developer noted, “LLMs in their default state without purpose-designed tooling suck at microservices compared to a monorepo.”

An AI assistant can reason about a single codebase, tracing call graphs and refactoring across modules in one shot. But ask it to coordinate changes across five repositories with OpenAPI specs, and it degrades to “language translation on the fly”, taxing its attention and producing inconsistent results. The cognitive overhead of stitching together distributed systems doesn’t just slow humans, it breaks AI agents.

This is pushing some teams toward “FaaS + shared libraries” as a hybrid: you get isolation without losing code sharing. The irony? We’re rediscovering the principles of modular monoliths, just with different packaging.

When to Actually Split (The Honest Answer)

None of this means microservices are always wrong. The legitimate use cases are specific:

- Netflix-scale problems where horizontal scaling is mandatory and components have wildly different resource profiles

- Heterogeneous workloads requiring different tech stacks (e.g., Python ML models alongside Go APIs)

- Organizational constraints where Conway’s Law is immutable and teams genuinely cannot coordinate

- Principle of least privilege for third-party API interactions, where blast radius containment is critical

But even then, the pattern is modular monolith first, extract second. You don’t split until you know your boundaries are stable. As one architect advised: “Only carve out a module if it has unique resource demands or requires a different tech stack. But you pay the Distribution Tax.”

The Pragmatic Consolidation

The industry is reaching what some call “Peak Backend Architecture.” The core principles, modular boundaries, hexagonal architecture, observability, are stabilizing. The .NET world has settled on this. Java/Spring Boot has Spring Modulith. Even Node.js frameworks like NestJS are pushing Clean Architecture patterns.

The debate isn’t monolith vs. microservices anymore. It’s how to validate boundaries cheaply before paying the distribution tax. The answer is increasingly: use your IDE, not your infrastructure budget.

Teams are quietly reverting from microservices to monoliths when they realize the complexity outweighs the benefits. The bleeding edge isn’t about splitting systems into smaller pieces, it’s about knowing when to keep them together.

The simplest thing that could possibly work is often a modular monolith with a single database, horizontal scaling behind a load balancer, and extraction as a last resort. This isn’t architectural cowardice, it’s economic rationality. Your AWS bill will thank you. Your 3 AM pager will thank you. And your IDE’s refactoring tools will finally get the workout they were built for.

The microservices revolution promised freedom but delivered coordination overhead. The counter-revolution isn’t about returning to big balls of mud, it’s about recognizing that the best distributed system is the one you don’t build until you absolutely have to.