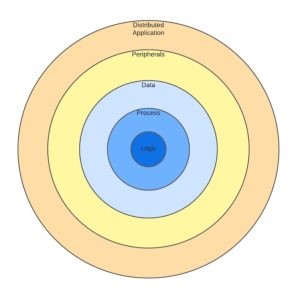

offset and limit parameters to your domain interface constitute UI leakage? A developer working with hexagonal architecture posted exactly this dilemma, whether listPlayers(int offset, int limit) pollutes the core with presentation concerns. The answer that emerged from the discussion cuts through dogma and reveals something more nuanced: pagination isn’t UI leakage, it’s essential complexity at the boundary, but your implementation probably leaks storage semantics anyway.

The Boundary Where Purity Meets Reality

The original concern is understandable. In hexagonal architecture, we zealously protect the core domain from external concerns. UI details, database schemas, and network protocols belong in adapters, not in the heart of our business logic. So when a UI designer asks for pagination, our reflex is to push it as far outward as possible.

But here’s the architectural tension: inbound ports exist to serve primary drivers. The port isn’t representing the domain’s internal worldview, it’s representing the minimal contract the outside world needs to interact with your system. If your system has a million players, returning all of them in a single List<Player> isn’t just impractical, it’s a denial-of-service attack waiting to happen.

Why Offset/Limit Is the Actual Culprit

Consider what happens when you implement pagination naively:

interface ForListingPlayers {

List<Player> listPlayers(int offset, int limit);

}This looks innocent, but it encodes several storage-layer assumptions:

- Stable ordering: Your results must maintain consistent order between requests, or pages will drift

- Contiguous data: Missing rows cause duplication or skipped results

- Database-specific semantics: The concept of “offset” is meaningless for many NoSQL stores or event streams

Essential Complexity Isn’t Identical to Domain Complexity

A critical insight from the discussion is that essential complexity ≠ domain complexity. Your domain model of “players” doesn’t inherently include pagination, players don’t care about pages. But your system has essential complexity that comes from constraints: memory limits, network latency, human attention spans.

The CQRS Distraction

Some commenters suggested routing reads around the hexagon entirely, a CQRS approach where the UI queries a separate read model directly. This can work, but it’s complexity relocation, not elimination.

// Command side (in the hexagon)

interface ForManagingPlayers {

void registerPlayer(PlayerCommand command);

}

// Query side (bypassing the hexagon)

interface PlayerReadModel {

Page<PlayerView> listPlayers(PageRequest request);

}When Pagination Actually Belongs in the UI

There’s a legitimate case where pagination is purely presentational: when your data volume is provably small. If your system will never exceed 100 players, implementing pagination in the application layer is premature optimization. You could fetch all players and slice them in the UI adapter:

// In the UI adapter, not the domain

const page = allPlayers.slice(offset, offset + limit);

Implementation Patterns That Preserve Boundaries

1. Define an Opaque Pagination Contract

// In the application core (inbound port)

public record PageRequest(int size, Optional<String> cursor) { }

public record Page<T>(List<T> items, Optional<String> nextCursor) { }

public interface PlayerListingPort {

Page<Player> listPlayers(PageRequest request);

}2. Let the Storage Adapter Translate

// In the database adapter

public class SqlPlayerRepository implements PlayerListingPort {

public Page<Player> listPlayers(PageRequest request) {

// Decode cursor as "offset:timestamp" or whatever your DB needs

int offset = decodeCursor(request.cursor());

List<Player> players = jdbc.query(/* ... OFFSET ? LIMIT ? */, offset, request.size());

String nextCursor = encodeCursor(offset + players.size());

return new Page<>(players, Optional.of(nextCursor));

}

}3. The UI Remains Agnostic

// UI layer knows nothing about offsets

async function loadPlayers(pageSize: number, cursor?: string) {

const response = await api.post('/players', { size: pageSize, cursor });

return {

players: response.items,

nextCursor: response.nextCursor

};

}The Scale Threshold Matters

| Volume | Pagination Location | Rationale |

|---|---|---|

| < 1,000 rows | UI adapter | Memory overhead is negligible, simplicity wins |

| 1,000 – 100,000 | Application core | Essential for performance, use cursor-based abstraction |

| > 100,000 | Consider CQRS | Independent read scaling may become necessary |

Key Takeaways for Architects

- Stop treating all non-domain concerns as UI leakage. Some concerns, like pagination, emerge from operational realities, not presentation whims.

- The implementation detail matters more than the presence.

offset/limitleaks storage semantics, cursor-based pagination hides them. - Essential complexity includes environmental constraints. A system that doesn’t account for memory, latency, and scale isn’t complete.

- CQRS is a scaling pattern, not a purity pattern. Don’t reach for it just to keep your domain “clean”, you’re trading one complexity for another.

- Know your scale threshold. Optimize for your actual constraints, not hypothetical ones. Document why pagination lives where it does.

The original question, “is pagination UI leakage?”, turns out to be the wrong question. The right question is: “Does my pagination contract hide implementation details while acknowledging operational realities?” When you frame it that way, the path forward becomes clear: keep pagination in the core, but make it opaque.