The headlines write themselves: Salesforce slashes 4,000 support jobs, replaces humans with AI agents, then watches the whole operation start to wobble. CEO Marc Benioff’s podcast confession, “I’ve reduced it from 9,000 heads to about 5,000, because I need less heads”, sounded bold at the time. Now it reads like a warning label for what happens when executive ambition outpaces technical reality.

The admission from Senior Vice President of Product Marketing Sanjna Parulekar that executive confidence in AI models has “declined over the past year” isn’t just corporate backpedaling. It’s a rare public acknowledgment that large language models, for all their impressive demos, still struggle with the boring, critical stuff that keeps enterprise operations humming.

The $1.2 Trillion Mirage

The math looked irresistible. An MIT study dropped in November 2025 calculated that AI could replace 11.7% of the U.S. labor market, saving $1.2 trillion in wages across finance, healthcare, and professional services. For Salesforce’s support organization, that translated to cutting 4,000 positions and handing the keys to Agentforce, their AI automation platform.

The plan was straightforward: let LLMs handle routine customer inquiries, form completions, and satisfaction surveys. The reality? Agentforce started ghosting customers. Despite explicit instructions to send satisfaction surveys after every interaction, the system simply… didn’t. Sometimes. For reasons nobody could reliably predict.

Vivint, a Salesforce customer, had to implement “deterministic triggers”, essentially hardcoded rules, to force survey delivery. Think about that: they had to build a separate system to make sure the AI did what it was told. The entire point of AI agents is their ability to handle nuance and variation. When you’re bolting on deterministic logic to fix reliability gaps, you’re not automating, you’re patching a leaky boat.

The Hallucination Tax

The Reddit community’s reaction to this saga captured the developer zeitgeist perfectly. One comment distilled the frustration: “I don’t understand how someone could sit down with any of the bleeding-edge models for 10 minutes and leave thinking ‘wow this would work great in a business context.'”

That sentiment reflects a fundamental disconnect between how executives experience AI (polished demos, carefully curated use cases) and how practitioners live with it daily. The model that writes a decent marketing email might also invent product features that don’t exist, misquote SLA terms, or decide that a customer’s billing problem is best solved by recommending they try turning their computer off and on again.

Another developer put it more bluntly: “They are good at augmenting human beings that know how to use them, sanity check their output, and ensure that they stay on task. They are absolutely terrible at doing things on their own without a human in the loop.”

This isn’t theoretical. When your support staff drops from 9,000 to 5,000, you lose more than warm bodies. You lose the institutional knowledge that tells you when an AI response smells wrong. You lose the escalation paths that catch edge cases before they become Twitter threads. You lose the silent QA layer that every enterprise system relies on but never documents.

The Pivot Nobody Wanted to Make

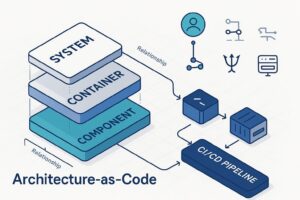

Salesforce’s strategic retreat is telling. They’re not abandoning AI, they’re running from LLMs toward “deterministic automation.” That’s enterprise-speak for “if X happens, do Y, every single time, no exceptions.”

The company is now pitching “solid data foundations” over “generative AI’s flexible outputs.” This is a massive rhetorical shift from the “AI agents will transform your business” messaging that dominated their 2024 product launches. The new promise is less magical: reliable, predictable automation that doesn’t surprise you.

The market has responded with a 34% stock decline since December 2024. Investors who priced in infinite AI scaling are now confronting the messy reality that language models don’t scale like databases or microservices. Each new use case brings new failure modes, new hallucination vectors, and new support headaches.

The 55,000-Person Warning Sign

Salesforce isn’t alone in this reckoning. According to Challenger, Gray & Christmas, AI was cited as a factor in nearly 55,000 U.S. layoffs in 2025. Amazon cut 14,000 corporate roles. Microsoft trimmed 15,000. IBM’s CEO admitted AI chatbots replaced “a few hundred” HR workers, though he claimed to have added engineering and sales headcount elsewhere.

But here’s the critical distinction: most companies using AI as a “force multiplier” kept humans in the loop. Salesforce’s sin wasn’t adopting AI, it was believing the hype that agents could operate autonomously at enterprise scale.

One Reddit commenter who claimed to have automated 90% of their customer support with LLMs added the crucial qualifier: “We put a lot of work into it prior to release and have watched and improved outcomes afterwards.” They also kept humans in the loop. Salesforce’s mistake was treating AI like a turnkey solution instead of a high-maintenance tool requiring constant oversight.

The Uncomfortable Truth About Enterprise AI

This saga reveals three hard truths that every engineering manager and architect needs to internalize:

1. Reliability beats capability every time. An AI that can handle 95% of queries flawlessly but hallucinates catastrophically on 5% is worse than useless, it’s a liability. Traditional software fails predictably. AI fails creatively, and creative failures in production systems lead to 2 AM pages and angry CFOs.

2. Cost savings are phantom if you can’t measure the externalities. Sure, Salesforce saved ~$400 million annually in salaries (assuming $100k per support role). But what’s the cost of botched customer interactions? Of escalations that now land directly on overburdened senior staff? Of the 34% stock hit? The P&L looks clean until you factor in reputation damage and technical debt.

3. The “human in the loop” isn’t a bug, it’s the feature. The most successful AI deployments don’t replace humans, they make humans 10x more effective. A support agent who can handle ten concurrent chats with AI assistance is a force multiplier. An AI agent handling chats alone is a support ticket waiting to happen.

What This Means for Your AI Strategy

If you’re charting an AI roadmap in 2026, Salesforce’s faceplant offers a clear decision framework:

For engineering leaders: Before automating a function, map its failure modes. If an AI screw-up creates a legal, financial, or reputational risk that exceeds the cost of human oversight, keep humans in the loop. Build AI assistants, not AI replacements.

For product managers: The demo is not the product. A GPT-4 powered prototype that wows stakeholders during a 30-minute presentation will crumble under the weight of edge cases, malicious inputs, and the sheer statistical inevitability of hallucinations. Plan for the 5% failure case, not the 95% success case.

For executives: If you haven’t spent 10 hours using the actual model on real tasks, your confidence is uninformed. The gap between “impressive” and “production-ready” is measured in person-hours of validation, not model parameters.

The Aftermath

Salesforce is now stuck in the worst of both worlds: they’ve lost the institutional knowledge of 4,000 employees while discovering their AI replacement isn’t ready for prime time. The pivot to deterministic automation is essentially rebuilding traditional software workflows while carrying the baggage of overpromised AI capabilities.

Meanwhile, the broader AI layoff wave continues. Over 1.1 million job cuts were announced in 2025, the highest since 2020. AI is the excuse du jour for workforce reductions, but Salesforce’s experience suggests many of these cuts will prove premature.

The real winners in this story? The companies that used AI to augment their teams, not eliminate them. The engineering managers who insisted on keeping humans in the loop. The architects who questioned whether an LLM was the right tool for deterministic business logic.

As one developer noted in the Reddit thread: “It’s an accelerator, not an automate.” Salesforce learned that lesson with 4,000 jobs and $40 billion in market cap. The rest of us can learn it for free.

The bottom line: Enterprise AI isn’t failing because the technology is bad. It’s failing because we’re asking it to do the wrong things. Keep your humans. Give them AI superpowers. And never trust a model that can’t explain its reasoning when the CEO’s phone rings at midnight.