llama.cpp Adds Anthropic API Support, Rendering Cloud API Lock-In Obsolete

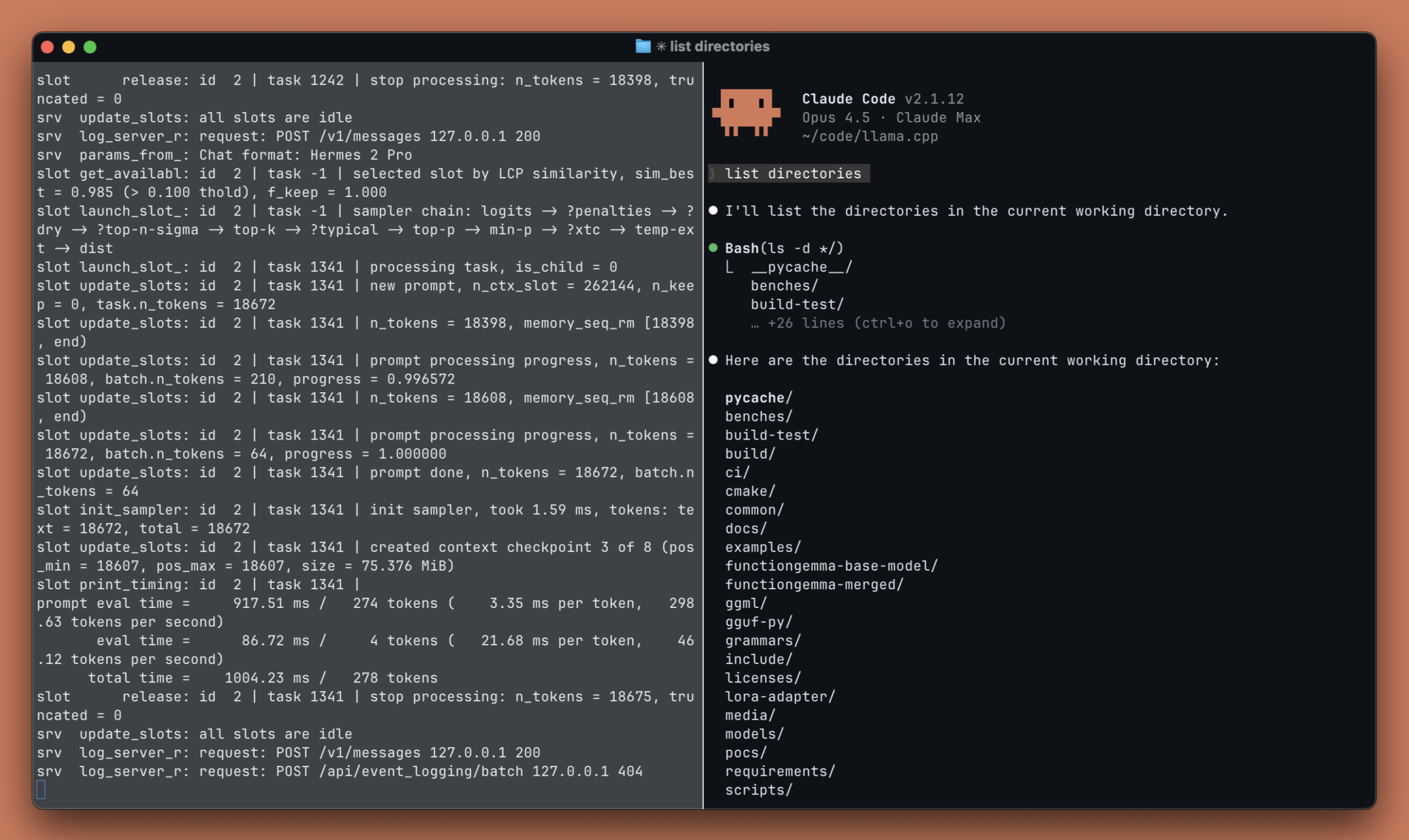

The line between local and cloud AI inference didn’t just blur, it evaporated. With native Anthropic Messages API support landing in llama.cpp, you can now point Claude Code at your laptop and watch it orchestrate local models as if they were Claude itself. This isn’t a hack. It’s not a proxy. It’s a first-class feature merged in PR #17570 that converts Anthropic’s API format to OpenAI’s internally, reusing llama.cpp’s existing inference pipeline.

For developers who’ve been straddling the fence between cloud convenience and data privacy, this changes the calculus entirely.

The Technical Blueprint: How It Works

The implementation is elegantly simple. The llama.cpp server exposes a new endpoint at /v1/messages that speaks fluent Anthropic. When you send a request, it gets translated on-the-fly into the OpenAI format that llama.cpp already understands, processed through the quantized model running on your hardware, then streamed back using Anthropic’s Server-Sent Events format with proper event types (message_start, content_block_delta, etc.).

curl http://localhost:8080/v1/messages \

-H "Content-Type: application/json" \

-d '{

"model": "local-model",

"max_tokens": 1024,

"messages": [{"role": "user", "content": "Hello!"}]

}'That’s it. No middleware. No Claude router workaround. The server handles the entire Anthropic protocol, including features that make Claude Code tick: tool use with tool_use and tool_result content blocks, token counting via /v1/messages/count_tokens, vision support for multimodal models, and even extended thinking parameters for reasoning models.

Claude Code Without Claude: The Killer App

The Reddit thread announcing this feature lit up with developers immediately connecting the dots to Claude Code. One commenter noted they now have “no excuse not to try Claude Code with minimax m2.1 on my M3 Ultra.” The setup is trivial:

# Start server with a capable model

llama-server -hf unsloth/Qwen3-Next-80B-A3B-Instruct-GGUF:Q4_K_M

# Run Claude Code with local endpoint

ANTHROPIC_BASE_URL=http://127.0.0.1:8080 claudeClaude Code, Anthropic’s agentic coding CLI, was designed specifically for their models and communicates via the Messages API, not the more common Chat Completion API. This meant that until now, using it required an Anthropic API key and sending your code to their servers. With llama.cpp’s new support, the tool becomes model-agnostic infrastructure.

The Context Problem Nobody’s Talking About

Here’s where the excitement meets reality. One Reddit commenter dropped a sobering observation: “Wait til you see that claude codes eats up 12k of context right from the start.” This isn’t a limitation of llama.cpp, it’s a characteristic of how Claude Code operates, aggressively consuming context window to maintain conversation state and code awareness.

When you’re paying per token to Anthropic, that’s expensive. When you’re running a quantized 80B model on your own hardware, it’s just memory and latency. But it highlights a critical mismatch: agentic tools built for massive cloud models assume effectively infinite context. Local models, even powerful ones, face hard limits.

The llama.cpp implementation does support token counting (/v1/messages/count_tokens returns {"input_tokens": 10}), but it can’t change how Claude Code uses context. Developers will need to experiment with context sizes and model capabilities to find workable configurations.

The Death of API Moats

This development represents something larger than a feature addition. It’s a direct assault on the API compatibility moat that commercial AI providers have been building. Anthropic’s Messages API was their proprietary interface, a lock-in mechanism that tied developers to their models. Now it’s just another protocol that open-source infrastructure can speak.

The implications ripple outward:

– For startups: You can prototype with Claude’s API, then switch to local models for production without changing your tooling

– For enterprises: Sensitive code never leaves your perimeter, but developers keep their preferred workflows

– For Anthropic: Their ecosystem tools become more valuable while their core API becomes commoditized

One Reddit user captured the sentiment perfectly, expressing fatigue with “open” tools that act as “sales funnel to paid products made by VC funded company.” They dismissed alternatives like OpenCode (security issues) and Vibe (unprofessional naming), positioning llama.cpp’s approach as the genuine article: open infrastructure that doesn’t pretend to be something it’s not.

Technical Depth: What’s Actually Supported

The feature parity is impressive. The implementation includes:

- Full Messages API:

POST /v1/messageswith streaming support - Token counting:

POST /v1/messages/count_tokens - Tool use: Function calling with proper content block structure

- Vision: Image inputs via base64 or URL (requires multimodal model)

- Extended thinking: Support for reasoning models via

thinkingparameter - Streaming: Proper Anthropic SSE event types throughout the lifecycle

This isn’t a partial implementation. It’s a complete protocol translation layer that passes through to llama.cpp’s inference pipeline, meaning you get all the performance benefits of quantized GGUF models running on optimized hardware backends (CPU, CUDA, Vulkan, Metal).

The Community Response: Relief and Skepticism

The reaction on forums has been a mix of excitement and hard-won pragmatism. Developers who’ve been burned by “open” tools that turned into commercial traps see this as genuine progress. Others note that getting Claude Code working with local models is just the first step, the real challenge is matching the model quality and reliability of Claude itself.

The GitHub repository shows active development, with noname22 contributing the initial feature. The implementation reuses existing infrastructure intelligently, suggesting it will be maintained as part of the core server functionality rather than an abandoned side project.

What This Signals for AI Infrastructure

We’re witnessing the collapse of API silos. First OpenAI compatibility became table stakes. Now Anthropic’s protocol is being absorbed into the local inference stack. The pattern is clear: any successful commercial API will inevitably be replicated in open-source infrastructure.

This puts pressure on AI providers to compete on model quality and specialized capabilities rather than ecosystem lock-in. It also accelerates the trend toward hybrid workflows where developers use cloud APIs for prototyping and local models for production, with tooling that works seamlessly across both.

The llama.cpp project, already the dominant local inference engine, is cementing its position as the universal adapter for AI applications. If it speaks HTTP and JSON, llama.cpp will eventually support it, running on everything from Raspberry Pis to multi-GPU servers.

Getting Started Without the Hype

If you want to try this today, grab a recent build of llama.cpp and a capable model. The Qwen3-Next-80B-A3B-Instruct-GGUF at Q4_K_M quantization strikes a good balance between capability and resource requirements. Start the server, point Claude Code at it, and expect to iterate on model selection and context sizing.

The feature is new. The tooling is evolving. But the direction is unmistakable: the walls between AI platforms are coming down, and developers are the winners.

Just don’t expect your local model to magically match Claude’s performance out of the box. The API is the easy part. The model is the hard part. But at least now you can swap them independently.