Tencent’s latest release exposes a growing fracture in AI’s “bigger is better” orthodoxy. The Youtu-LLM-2B model packs just 1.96 billion parameters, smaller than most smartphone-friendly models, yet handles 128K token contexts and executes complex agent tasks better than competitors four times its size. This isn’t incremental improvement, it’s a direct challenge to the parameter-count arms race that has dominated LLM development.

The 2B Parameter Elephant in the Room

For years, the narrative has been straightforward: scale parameters, scale performance. Youtu-LLM-2B throws a wrench into that simple equation. At 1.96B parameters, it’s a fraction of Llama 3.1-8B’s size, yet the benchmarks tell a different story.

The model uses Multi-head Latent Attention (MLA), an architecture borrowed from DeepSeek-V2 that compresses Key-Value caches into latent vectors. This isn’t just academic cleverness, it reduces memory overhead by 93.3% compared to standard attention mechanisms, enabling that massive context window on commodity hardware.

What the Benchmarks Actually Show

The Hugging Face model card reveals performance that should make larger models uncomfortable:

Commonsense & Reasoning:

– MMLU-Pro: 48.4% (beats Llama 3.1-8B’s 36.2%)

– HLE-MC: 17.4% (outperforms Qwen3-4B’s 15.0%)

Coding Tasks:

– HumanEval: 64.6% (crushes Qwen3-1.7B’s 49.9%)

– LiveCodeBench v6: 9.7% (nearly double Qwen3-4B’s 6.9%)

Agentic Performance (APTBench):

– Code tasks: 37.9% (vs. Llama 3.1-8B’s 23.6%)

– Deep Research: 38.6% (vs. Qwen3-1.7B’s 28.5%)

Agentic Performance (APTBench):

– Tool Use: 64.2% (competitive with Qwen3-4B’s 65.8%)

These aren’t cherry-picked wins. Youtu-LLM-2B consistently punches above its weight across diverse tasks, particularly in agentic scenarios where larger models often stumble.

Why Agentic Capabilities Matter More Than Raw Parameter Count

The Reddit discussion around Youtu-LLM-2B zeroes in on one word: “agentic.” Developers aren’t impressed by benchmark scores alone, they care about end-to-end task completion. The sentiment on r/LocalLLaMA suggests this model fills a critical gap: small enough to run locally without a data center, yet capable enough to handle multi-step workflows.

One developer noted they’ve been “repeatedly finetuning Qwen3-1.7B and Qwen3-4B for agentic tasks”, but Youtu-LLM-2B “beats both substantially.” The implication is clear: parameter efficiency beats parameter count when you need deterministic, tool-using agents that can fit on a single GPU.

The model’s architecture supports this focus. With 32 layers, 16 attention heads, and clever MLA dimensioning (1,536 rank for queries, 512 for keys/values), it maintains fast inference while preserving representational capacity where it matters. The 128,256 vocabulary size suggests aggressive tokenization efficiency, further reducing computational overhead.

The Deployment Reality Check

Here’s where Youtu-LLM-2B gets disruptive. The model runs comfortably in 8-12GB VRAM with standard quantization. That puts it in the same deployment class as Phi-4-mini and Llama 3.2-3B, but with capabilities that approach 8B+ models.

The Hugging Face integration is already mature. Loading the model takes three lines:

from transformers import AutoTokenizer, AutoModelForCausalLM

tokenizer = AutoTokenizer.from_pretrained("tencent/Youtu-LLM-2B", trust_remote_code=True)

model = AutoModelForCausalLM.from_pretrained("tencent/Youtu-LLM-2B", device_map="auto", trust_remote_code=True)

The instruct model includes a “Reasoning Mode” toggled via enable_thinking=True in the chat template, activating Chain-of-Thought for complex logic. This isn’t bolted-on prompting, it’s native to the model’s generation pipeline.

For production deployment, Tencent provides vLLM integration with tool-calling support:

vllm serve <model_path> --trust-remote-code --enable-auto-tool-choice --tool-call-parser hermes

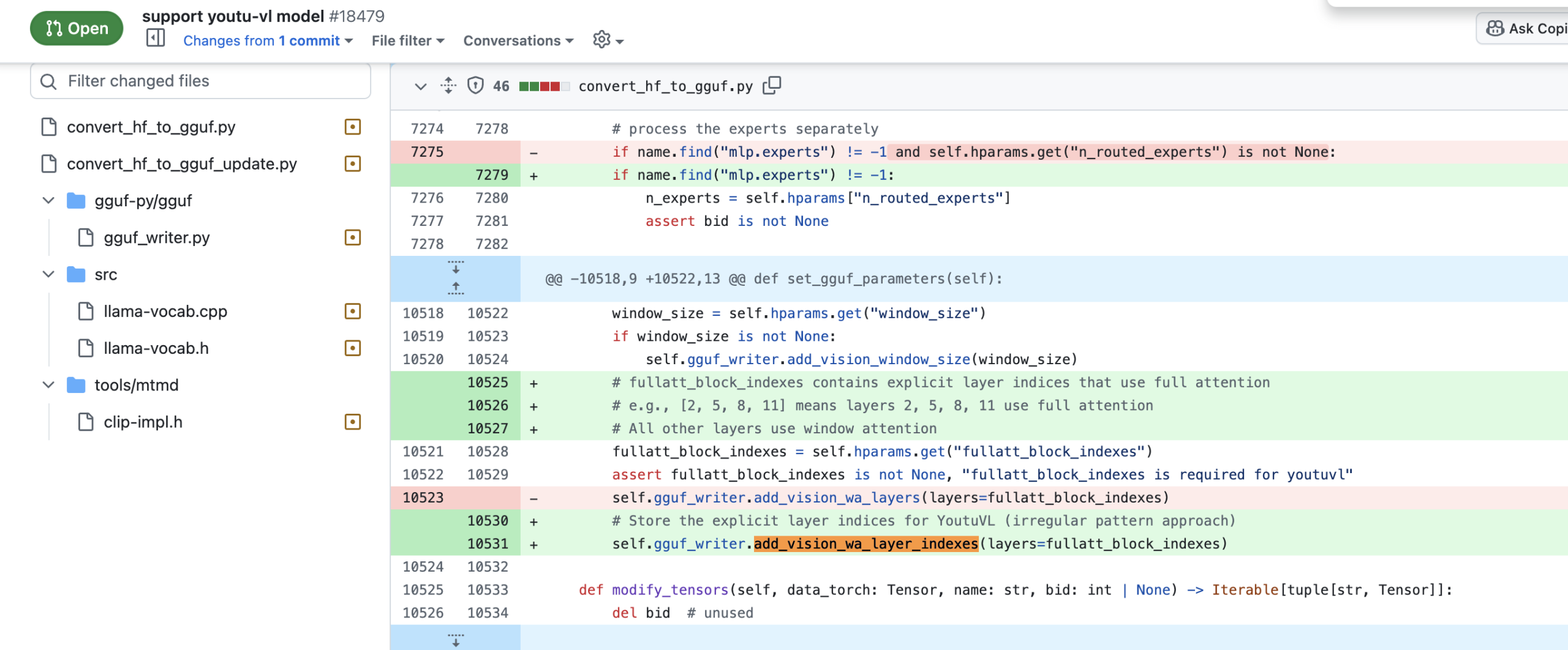

The llama.cpp pull request #18479 shows active community work to optimize inference for edge devices, with developers debating variable naming conventions for layer indexing, exactly the kind of nitty-gritty optimization that matters when you’re targeting CPU inference.

The Scaling Law Rebellion

Youtu-LLM-2B arrives at an inflection point. The AI community is increasingly questioning whether trillion-parameter models are sustainable or necessary. Training compute is plateauing for major labs, and enterprise buyers are demanding ROI, not just capability demos.

This model suggests a different path: architectural innovation over brute-force scaling. MLA attention, efficient tokenization, and targeted training on agentic tasks create a multiplier effect on effective performance. It’s not about having more parameters, it’s about using them intelligently.

The controversy lies in what this means for AI strategy. If 2B models can handle most real-world agent tasks, why invest in 70B behemoths? The answer might be that you don’t, especially for applications requiring low latency, privacy, or edge deployment.

The Fine Print

None of this is to say Youtu-LLM-2B is a universal replacement. It won’t out-reason GPT-4 on abstract philosophy or write better poetry than Claude. But for the bread-and-butter of AI applications, data analysis, code generation, API orchestration, document processing, it delivers 90% of the capability at 10% of the resource cost.

The model’s license (listed as “other” on Hugging Face) warrants scrutiny for commercial use. And while the 128K context is impressive, the NIAH (Needle In A Haystack) score of 98.8% trails Llama 3.1-8B’s 99.8%, suggesting slight trade-offs in extreme long-context retrieval.

What This Signals for AI Development

Tencent’s move reflects a broader shift toward efficiency. As one developer put it in the Reddit thread, the model “looks rly good for agentic” work, a sentiment echoed by others tired of babysitting bloated models through complex workflows.

The technical community’s rapid adoption tells its own story. Within days of release, developers were already fine-tuning it on custom agent datasets, and the llama.cpp integration progressed through multiple review rounds in under a week. When tooling moves this fast, it’s responding to genuine need.

Youtu-LLM-2B proves that the next wave of AI innovation won’t come from adding more parameters, it will come from architectures that do more with less. For startups, enterprises, and individual developers, that’s a future worth building toward.

The question isn’t whether this model is perfect. It’s whether the AI industry is ready to prioritize efficiency over scale. Tencent’s betting the answer is yes. Based on the early community response, they might be right.