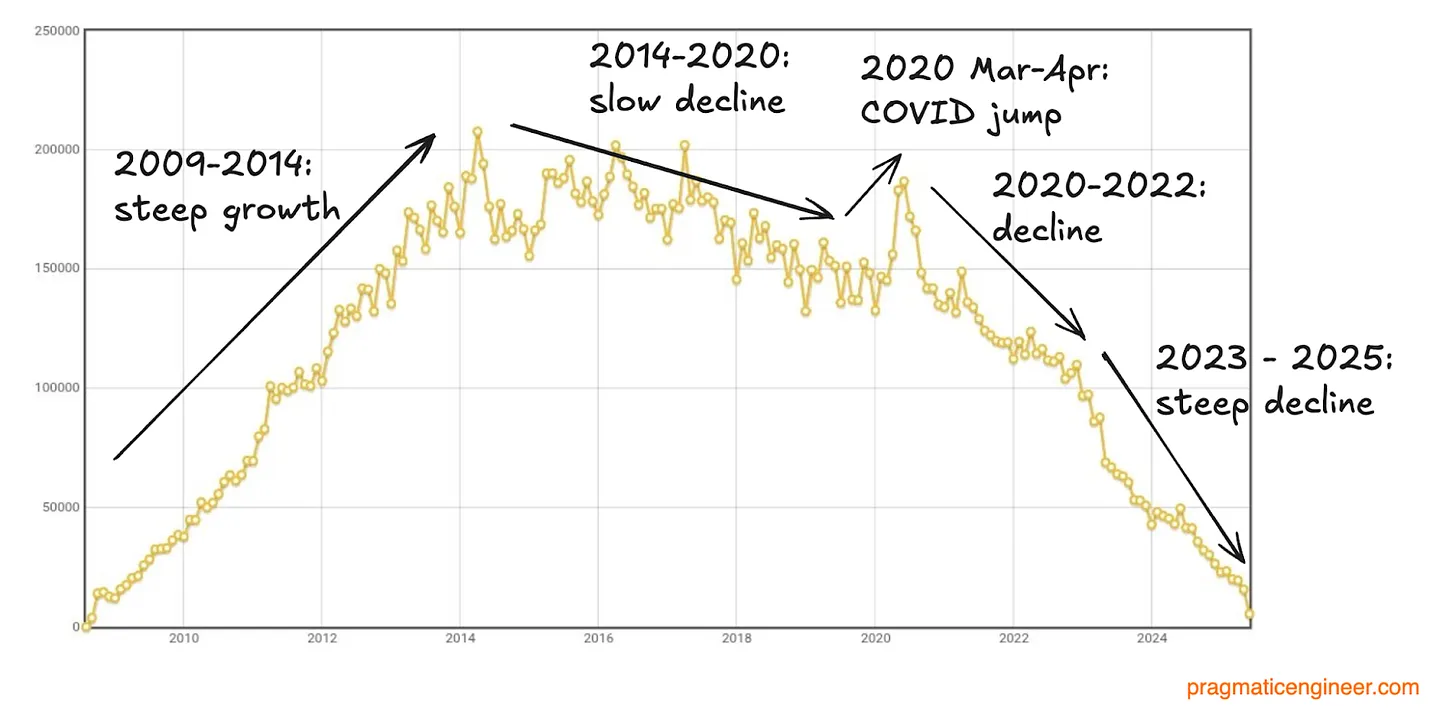

The numbers are brutal. Monthly questions on Stack Overflow cratered from 200,000 in 2014 to just 3,862 in December 2025, a 78% drop from the previous year alone. For a platform that defined how developers learned for over a decade, this isn’t a decline. It’s an extinction-level event. And many developers are cheering.

The schadenfreude is palpable across developer forums. After years of navigating what many describe as a hostile, gatekeeping culture, programmers are declaring that Stack Overflow deserved this reckoning. The platform that once democratized coding knowledge became, in the eyes of many, a cautionary tale about how community moderation can devolve into digital tyranny.

The Data Doesn’t Lie: A Platform in Freefall

Stack Overflow’s own Data Explorer reveals a collapse that accelerated sharply after ChatGPT’s arrival. In January 2025, the platform still saw 21,000 questions. By December, that number had fallen to 3,607. Go back to early 2023, and the platform fielded 100,000 questions per month. The trend line isn’t just downward, it’s catastrophic.

The correlation with AI adoption is impossible to ignore. Developer surveys now show that 84% of developers use AI coding assistants. When you can get an instant answer from ChatGPT, Claude, or GitHub Copilot without waiting for human validation, why endure the ritual humiliation of posting on Stack Overflow?

But the data only tells half the story. The real venom comes from developers who lived through the platform’s culture wars.

The Toxicity Tax: When “Quality Control” Became Community Abuse

The sentiment across developer communities is remarkably consistent. On Reddit and Hacker News, veteran programmers don’t just acknowledge the decline, they actively celebrate it as karmic justice.

One developer, who started using the platform in 2020, describes a Kafkaesque experience: questions downvoted within seconds, moderators editing posts for punctuation rather than substance, and responses that humiliate rather than help. The reputation system, designed to reward expertise, instead created a class of “all-knowing” moderators who treated question-asking as a privilege to be earned through suffering.

Another programmer revealed a workaround that speaks volumes about the culture: they would post questions from an alt account, deliberately stating the problem was “unsolvable.” This ego-baiting tactic reliably drew in experts eager to prove them wrong. Think about that, developers had to psychologically manipulate the community just to get help.

The gatekeeping extended beyond mere attitude. Stack Overflow’s strict duplicate question policies meant similar problems were often closed before they could be answered, even when the “original” question was years old and used outdated syntax. Beginners asking about Java, JavaScript, or Python reported particularly hostile treatment, while communities around older languages like C seemed more welcoming, perhaps because they hadn’t been poisoned by the same volume of reputation-chasing.

The AI Alternative: No Attitude, Just Answers

The appeal of AI assistants isn’t just speed, it’s the complete absence of judgment. As one developer put it, AI offers “infinite patience” and can be told to respond in a style that accommodates insecurity and self-doubt. You can ask the same basic question ten different ways without being told to “learn to code” or having your question marked as duplicate.

This is the emotional core of Stack Overflow’s collapse. For years, developers tolerated the platform’s toxicity because it was the best option. Then AI arrived and offered the same information without the emotional tax. No wonder “People were just happy to finally have a tool that didn’t tell them their questions were stupid.”

The contrast is stark. Stack Overflow’s moderation philosophy prioritized content quality above all else, creating a library of pristine but intimidating knowledge. AI assistants prioritize the user experience, delivering personalized help that adapts to the developer’s skill level and context.

The Great Irony: Feeding the Beast That Kills You

Here’s where the story takes a darkly comedic turn. In 2024, Stack Overflow signed a partnership with OpenAI to license its vast archive for training data. The company described it as a way to “strengthen the world’s most popular large language models.” In reality, they were selling the rope for their own hanging.

The company is doing fine financially, revenue hit $115 million last year, up 17%. They’re successfully monetizing their historical content while their live community withers. It’s a business model that treats the community as a content farm for AI training, not as valuable members worth preserving.

But the contradictions are glaring. Stack Overflow still bans AI-generated answers on its platform, claiming it prevents reputation gaming. Yet they’re happy to sell their human-generated content to AI companies. They’ve introduced an “AI Assist” feature while forbidding users from using AI to assist each other. The cognitive dissonance is staggering.

The Knowledge Commons Collapse: What We’re Actually Losing

This is where the controversy gets serious. Developers celebrating Stack Overflow’s demise may be missing the larger catastrophe. When we move from public Q&A to private AI chats, we lose something fundamental: a searchable, peer-reviewed, evolving knowledge base.

AI assistants don’t cite sources reliably. They don’t show their work. They hallucinate. And most importantly, their conversations exist in private chat histories, not indexed forums where the next developer with the same problem can find them. We’re trading a public library for a series of private tutoring sessions.

As one Hacker News commenter noted, “Stack Overflow was by far the leading source of high-quality answers to technical questions. What do LLMs train off of now?” The answer is increasingly: other AI-generated content, creating a feedback loop of potential degradation.

The platform’s decline creates a data void. Many programming language popularity metrics, research studies, and even AI training datasets relied on Stack Overflow’s fresh content. With new questions drying up, we’re losing real-time insight into what developers are actually struggling with today.

The Reputation Game’s Final Level

Stack Overflow’s gamification system, reputation points, badges, moderator privileges, was supposed to incentivize quality contributions. Instead, it created a class system where high-reputation users controlled the narrative, often prioritizing their status over helping newcomers.

The AI revolution exposes this system’s fundamental flaw: reputation is meaningless when an AI can provide better answers faster. Who cares about earning 10,000 reputation points when ChatGPT gives you the solution in seconds? The entire social economy of Stack Overflow has been devalued overnight.

This explains the vitriol from some veteran users. Their carefully constructed status, built over years of community participation, is now worthless in a world where AI can replicate their expertise. The platform’s anti-AI stance wasn’t just about quality, it was about preserving a social hierarchy that AI made obsolete.

What Comes Next: A Fragmented Future

Stack Overflow isn’t going to disappear completely. Its archive remains valuable, and search engines will continue surfacing its historical answers. But its role as a living community is over.

Developers are fragmenting across alternative platforms:

– Reddit programming communities offer more informal, real-time discussion

– Discord servers provide immediate help without the reputation games

– GitHub Discussions integrate directly with code repositories

– Private Slack/Teams channels create safe spaces for “stupid” questions

These platforms lack Stack Overflow’s centralized searchability but make up for it in accessibility and psychological safety. The trade-off is clear: better user experience at the cost of knowledge centralization.

Meanwhile, Stack Overflow’s leadership is attempting to pivot. They’ve softened moderation policies, integrated AI features, and tried to rebrand as a “broader developer community.” But it’s likely too late. The trust is gone, and the users have already found alternatives that don’t treat them like problems to be moderated.

The Uncomfortable Truth

Stack Overflow’s collapse isn’t just a story about AI disruption. It’s a parable about community governance. The platform’s moderation culture, born from genuine desires to maintain quality, created such a hostile environment that developers were primed to abandon it the moment a viable alternative appeared.

The AI didn’t kill Stack Overflow. It was the executioner, but the platform had been on death row for years, sentenced by its own community’s toxicity. Developers aren’t just choosing AI because it’s faster, they’re choosing it because it doesn’t make them feel like idiots for asking questions.

This leaves us with a troubling question: If AI assistants are training on Stack Overflow’s historical content, but that content is no longer being refreshed with new problems and solutions, how long until the AI’s knowledge becomes stale? We’re potentially entering an era where programming help is fast, friendly, and fundamentally frozen in time.

The celebration of Stack Overflow’s demise might be premature. We could be trading one set of problems for another, exchanging gatekeeping humans for confidently wrong AI, and losing our collective knowledge base in the process.

The platform that taught a generation to code has become a case study in how not to build a community. And the tool that replaced it might just be teaching us that convenience comes with hidden costs.

The data is clear, the community has spoken, and the verdict is harsh: Stack Overflow’s obsession with quality control created a culture so toxic that developers would rather risk AI hallucinations than face human humiliation. That’s not just a business failure, it’s a failure of community engineering that the entire tech industry should study carefully.