When an anonymous Reddit user recently posted about their “temporary” setup, two RTX 5090s plus an RTX Pro 6000 Blackwell, casually power-limited because apparently 500W each “just isn’t enough”, it revealed something fundamental about the AI inference landscape. We’re witnessing the rise of consumer hardware so powerful it’s giving datacenter-grade equipment an identity crisis.

The original Reddit post showed the enthusiast running a WRX80E motherboard with Threadripper 3975WX and 512GB DDR4 RAM, with power-limited GPUs totaling over 1,500W. Community requests poured in for benchmark comparisons between multi-GPU configurations, including one user asking to test Qwen3 30b a3b at AWQ 4bit to see “how well they scale” in practice.

When Consumer Hardware Challenges the Datacenter

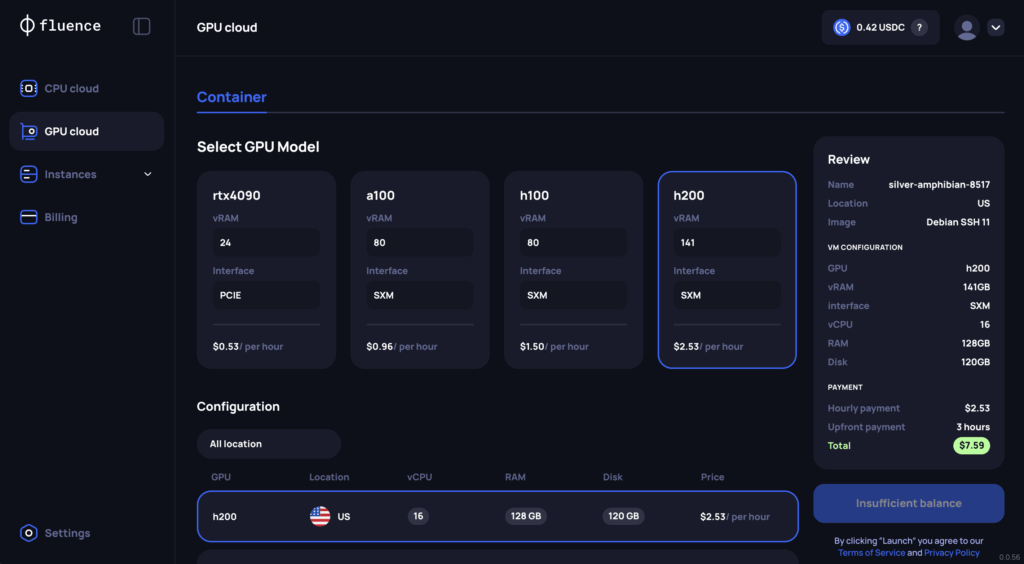

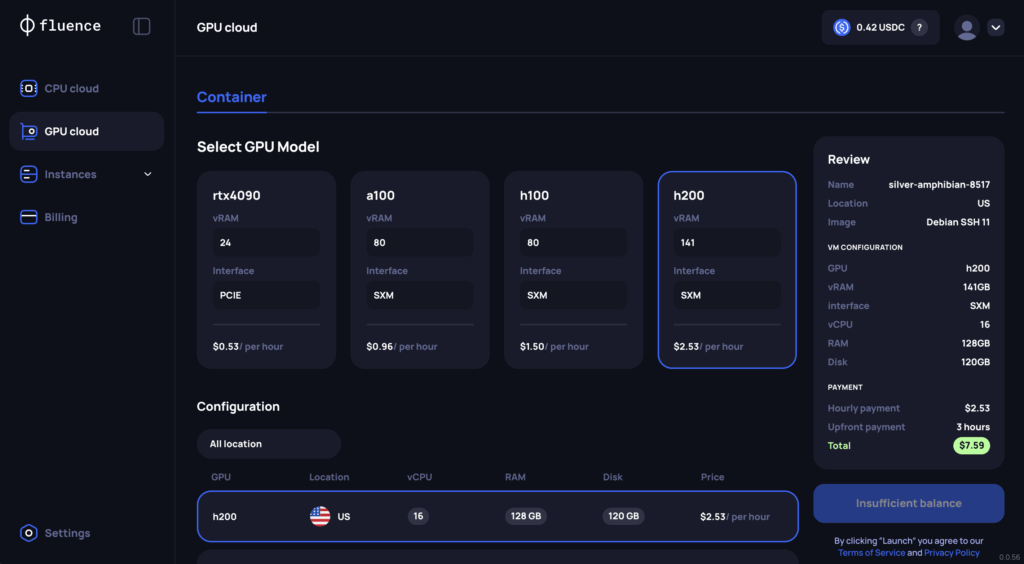

The economics are getting absurd. According to Fluence Network’s analysis, “a dual RTX 5090 configuration even outperforms a single NVIDIA H100 in sustained LLM inference” while costing a fraction of the price. The RTX 5090’s specifications tell the story: 21,760 CUDA cores, 32 GB of GDDR7 memory, and 1,792 GB/s bandwidth at a $1,999 MSRP versus the H100’s enterprise pricing that starts in the five figures.

This isn’t just theoretical. The benchmarks translate directly to real-world AI workloads. For Flux 2 image generation, testing shows that FP8 precision on RTX 5090 delivers 40-50% faster generation compared to FP16 with nearly identical output quality. At 1024×1024 resolution, that means 6.2 seconds versus 11.4 seconds, the kind of performance difference that changes workflows from iterative to interactive.

The Blackwell architecture’s fifth-generation Tensor Cores with native FP8 support mean these consumer cards aren’t just brute-forcing their way through inference, they’re doing it efficiently. The RTX 5090’s memory bandwidth advantage, nearly double the RTX 4090’s 1,008 GB/s, proves especially critical for LLM inference where memory bottlenecks often dictate performance ceilings.

The Mad Science of Multi-GPU Consumer Rigs

What makes setups like the Reddit user’s configuration particularly interesting isn’t just the raw horsepower, it’s the scaling challenges. As one commenter noted, they’re curious about comparing “2×3090 under tensor parallelism to 1×5090 with a model under 24gb.” The theory suggests the 3090s should have more combined bandwidth, but “in practice don’t scale linearly.”

This gets to the heart of why enthusiast builds matter: they’re real-world stress tests of distributed inference architectures that most users only encounter in theory. When you’ve got power-limited consumer cards working alongside professional-grade hardware like the RTX Pro 6000 Blackwell, you’re essentially testing the practical limits of heterogeneous GPU computing.

The cooling solutions alone deserve mention. High-end RTX 5090 models like the Asus ROG RTX 5090 Astral OC feature vapor chambers and triple axial-tech fans just to handle the 575W TDP under load, while liquid-cooled variants from MSI and Asus push the boundaries of what’s possible in consumer form factors. One has to wonder: at what point does this stop being a “desktop” and start becoming a specialized compute appliance?

The Surprising Economics of Local Inference

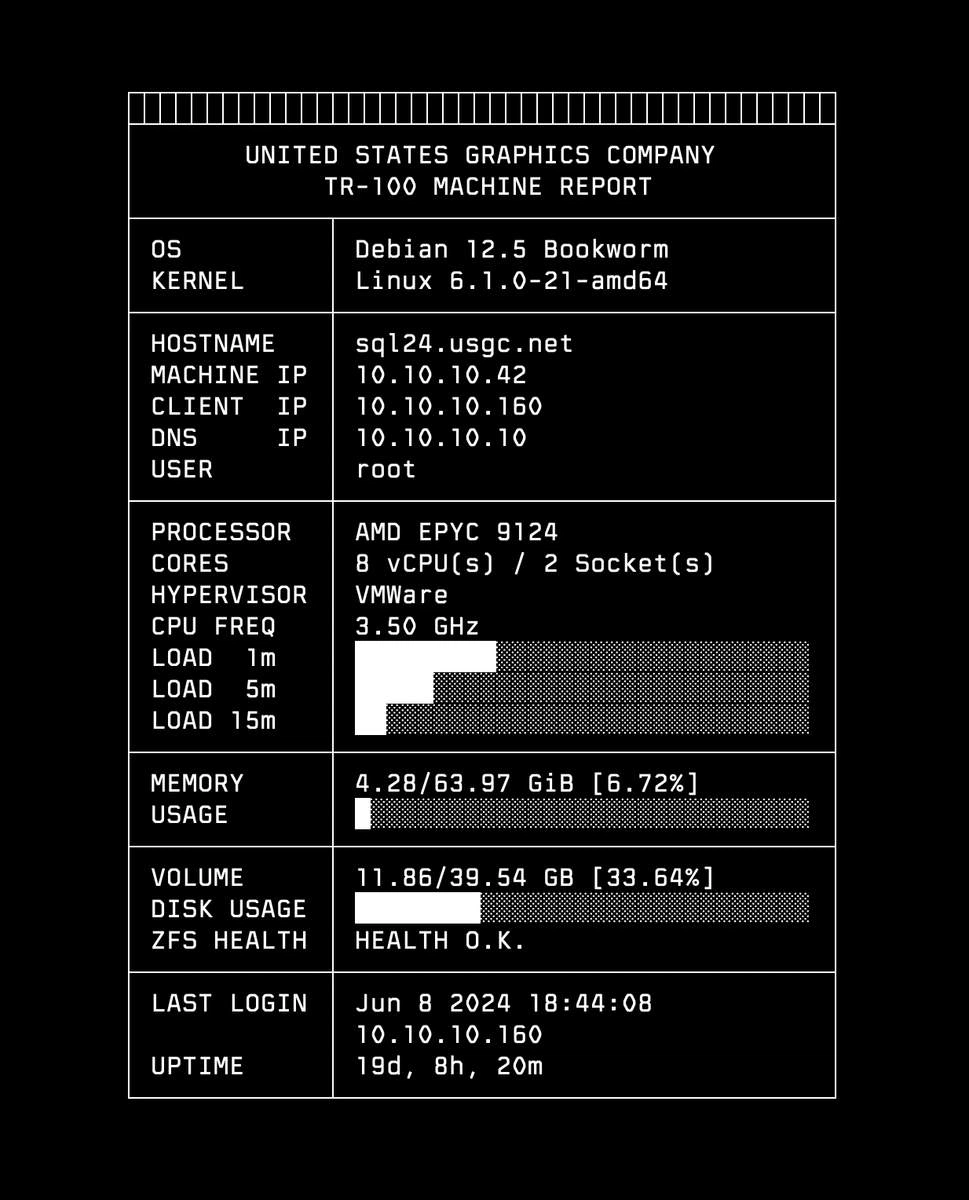

The cloud versus local debate gets complicated when consumer hardware reaches this level. According to Fluence Network’s marketplace data, renting RTX 4090 VMs starts at $0.64 per hour from providers like TensorDock, while RTX 5090 instances are becoming available at similar price points. Compare that to the upfront cost of building your own rig, and the break-even math becomes fascinating.

For developers running inference continuously, the economics tilt toward ownership surprisingly quickly. A dual RTX 5090 setup costing ~$4,000 pays for itself in about 3,125 hours of cloud usage, roughly 130 days of 24/7 operation. For research labs, AI startups, or even serious hobbyists working on long-running training jobs, that’s not an unreasonable timeframe.

The real advantage, however, might be in workflow rather than raw cost. Local inference eliminates data transfer latency, provides predictable performance without noisy neighbors, and offers complete control over the environment. When you’re fine-tuning models or running sensitive inference workloads, these intangible benefits can outweigh pure financial calculations.

The Blackwell Architecture’s Secret Sauce

The RTX Pro 6000 Blackwell in these enthusiast builds represents the professional counterpart to the consumer 5090, featuring the full GB202 GPU with 24,064 CUDA cores and an incredible 96GB of GDDR7 memory. As ThePCEnthusiast notes, this professional card costs “more than four times the price of an RTX 5090” but offers capabilities that hint at where consumer hardware might be headed.

Blackwell’s architectural improvements extend beyond raw specs. The DEV Community analysis highlights that “Blackwell Ultra’s Tensor Cores deliver ~1.5× more FP4 throughput” versus previous generations, with “~7.5× the low-precision throughput on comparable workloads, especially transformer inference.”

This matters because transformer inference, the backbone of modern LLMs, benefits disproportionately from these architectural optimizations. The combination of higher memory bandwidth, specialized tensor cores, and improved data reuse mechanisms means consumer Blackwell cards punch far above their weight class for specific AI workloads.

Practical Implications for Developers

For developers building local AI applications, these hardware advances translate into tangible capabilities. The RTX 5090’s 32GB VRAM means you can run larger models entirely in GPU memory without model sharding or constant swapping. Combined with FP8 optimization, this enables workflows that were previously impractical on consumer hardware.

Consider the memory usage differences: Flux 2 Dev FP8 uses approximately 12.2GB VRAM for the base model versus 23.4GB for FP16. That 11GB difference is the margin that enables simultaneously loading ControlNets, LoRAs, and other specialized models without constant memory management gymnastics.

The practical result? An enthusiast with a dual RTX 5090 setup can run sophisticated multi-model pipelines that would have required cloud instances just a generation ago. They can fine-tune larger models locally, experiment with ensemble approaches, and maintain complete data privacy, all while potentially outperforming cloud alternatives for specific workloads.

The Cloud Counterargument

Before you mortgage your house for a multi-GPU setup, consider the cloud perspective. hyperscalers are deploying Blackwell Ultra systems delivering “~5× higher throughput per megawatt” versus previous generations, creating economies of scale that individual setups can’t match. The infrastructure supporting these deployments, liquid cooling, specialized power delivery, optimized networking, isn’t something you can replicate in a home office.

There’s also the maintenance factor. Running multiple high-end GPUs at their power limits requires serious cooling solutions, stable power delivery, and tolerance for significant heat output. The cloud abstracts all these concerns away, letting you focus on the AI workload rather than the hardware supporting it.

And let’s not forget the rapid pace of advancement. The same Reddit user who’s proudly showing off their 5090 setup today might be looking enviously at next-generation hardware in twelve months, while cloud users automatically get access to the latest infrastructure without capital expenditure.

Where This Madness Is Headed

The trend toward powerful local inference rigs reflects broader shifts in AI development. As models become more capable and specialized, the ability to run them locally, with low latency, complete data control, and predictable performance, becomes increasingly valuable.

We’re seeing the emergence of what might be called “prosumer AI workstations”, systems that blend consumer accessibility with near-datacenter capabilities. These aren’t gaming PCs with fancy graphics cards, they’re specialized compute platforms that happen to use consumer-grade components.

The implications extend beyond individual enthusiasts. Small AI startups can now prototype and deploy sophisticated models without massive cloud bills. Researchers can experiment with novel architectures without fighting for shared cluster time. Even enterprises might reconsider their inference deployment strategies when local hardware offers this level of performance.

The Verdict: Brilliant or Bonkers?

So is building a multi-thousand-dollar local inference rig a stroke of genius or fiscal insanity? The answer, unsurprisingly, is “it depends.”

For workflows requiring continuous inference, rapid iteration, or data sensitivity, the upfront investment can make economic sense. The performance-per-dollar of current-generation consumer hardware, particularly when optimized with techniques like FP8 quantization, creates compelling value propositions.

But for most developers, the cloud’s flexibility and scalability remain unbeatable. The ability to spin up massive compute for short periods, access specialized hardware without long-term commitment, and avoid hardware maintenance overhead is worth the premium for many use cases.

What’s undeniable is that we’re living through a golden age of AI hardware accessibility. The fact that someone can reasonably ask a Reddit stranger to benchmark specific model configurations on their personal hardware, and get meaningful results, speaks volumes about how far we’ve come. Whether this represents the future of AI development or just an interesting historical anomaly remains to be seen, but for now, the mad scientists building these rigs are pushing the boundaries of what’s possible with consumer technology.

One thing’s certain: the next time someone complains about their “pleb 2x 5070ti setup”, remember that somewhere out there, an enthusiast is casually running models on hardware that would make most small datacenters jealous.