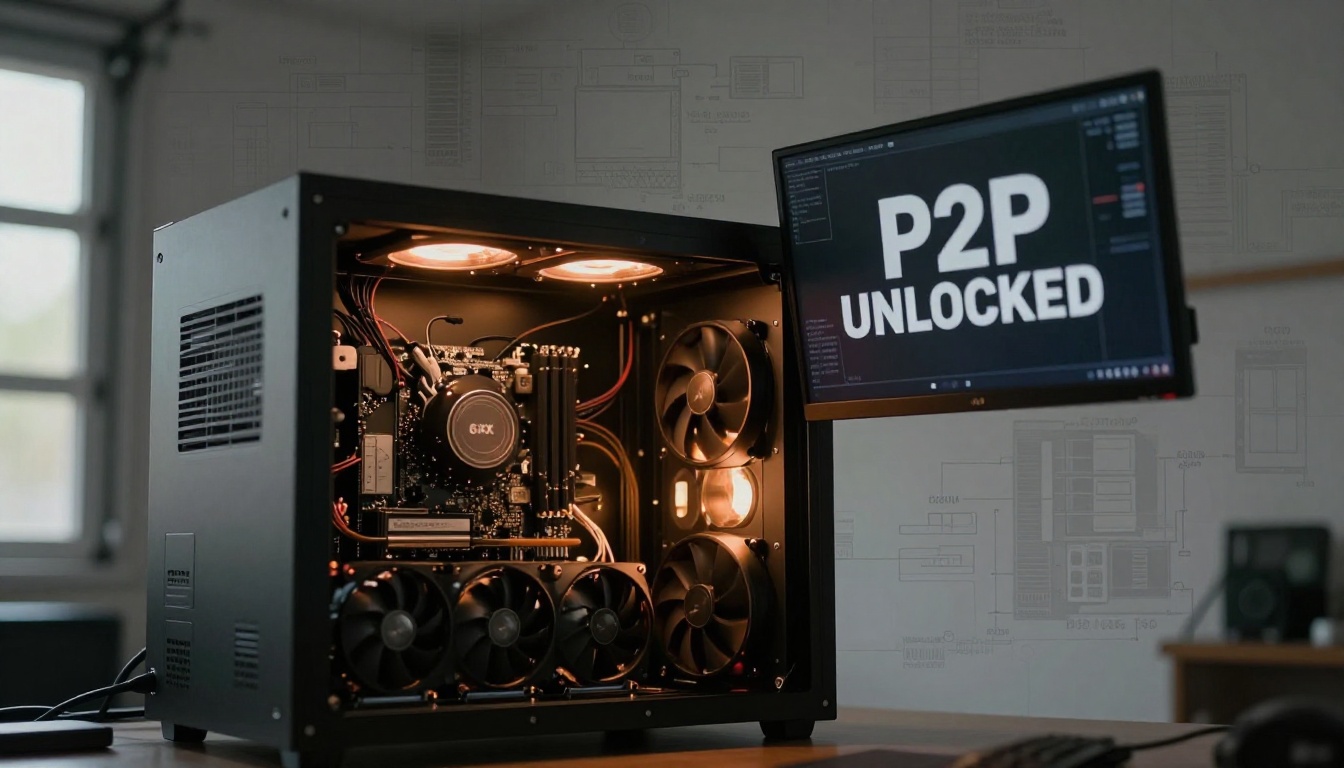

Someone just built an AI supercomputer in their garage that makes NVIDIA’s $50,000 enterprise boxes look like overpriced space heaters. The specs? Six RTX 3090s crammed onto a single motherboard, 144GB of VRAM, and modified Tinygrad drivers that unlock peer-to-peer bandwidth NVIDIA swore wasn’t possible on consumer hardware. The total cost? About what you’d pay for a single A100 on eBay.

This isn’t another “hey look at my RGB gaming rig” post. This is a technical declaration of independence from the AI hardware cartel.

The Technical Rebellion: Specs That Matter

The build centers around an ASRock ROMED-2T motherboard paired with an AMD Epyc 7502 CPU, server-grade hardware that doesn’t ask for permission to run GPUs however it damn well pleases. Six Gigabyte 3090 Gaming OC cards sit at PCIe 4.0 x16 speeds, each sipping a conservative 270W power limit. That’s 1,620W total, which sounds insane until you realize it’s still less than what a single DGX Spark pulls while delivering half its advertised performance.

Memory comes from eight DDR4 sticks running in octochannel mode at 2400MHz, nothing flashy, but enough to keep the Epyc fed. The real star is the 144GB of VRAM pool, created by stitching together six 24GB framebuffer cards. For context, that’s enough to load a 70B parameter model at FP16 precision without breaking a sweat, or train a 10B parameter diffusion model from scratch, the builder’s stated goal.

P2P Hacking: Where the Magic Happens

Here’s where things get spicy. The builder modified Tinygrad’s NVIDIA drivers to enable peer-to-peer (P2P) communication between GPUs, something NVIDIA officially reserves for its data center cards. The result? 24.5 GB/s intra-GPU bandwidth, enough to make model sharding across cards actually viable instead of a PCIe bus bottleneck nightmare.

This isn’t just flipping a BIOS switch. It requires bypassing NVIDIA’s artificial segmentation, which has been a cornerstone of their product strategy since the Titan series. The modification proves that the hardware is perfectly capable of P2P, the limitation is purely software-driven market segmentation.

The implications are massive. With reliable P2P, you can:

– Split large models across GPUs without the CPU becoming a traffic cop

– Implement efficient pipeline parallelism for training

– Run inference on models that exceed single-GPU VRAM without the latency hit of CPU offloading

One commenter on the original thread noted they’re running an 8×3090 setup with x16 to x8x8 splitters on PCIe 3.0, proving this scales beyond pristine server hardware into the janky realm of “whatever works.”

Tinygrad: The Anti-Establishment Framework

While PyTorch and JAX dominate the AI landscape, Tinygrad represents something fundamentally different: a minimalist, open-source framework that doesn’t treat consumer hardware as second-class citizens. The builder’s choice to modify Tinygrad drivers rather than wait for NVIDIA’s blessing speaks volumes about the state of AI software.

Recent research from Google and Sandia National Labs validates this approach. In a paper testing Tinygrad across multiple hardware platforms, including NVIDIA GPUs and Apple Silicon, they found consistent behavior across implementations. The framework isn’t a toy, it’s a legitimate tool for scientific computing that happens to be hackable.

The real power move here is philosophical. By using Tinygrad, the builder sidesteps:

– CUDA licensing restrictions

– Framework bloat that assumes you’re running on H100s

– The entire enterprise support ecosystem that adds zero value for self-sufficient researchers

The 3090 Value Proposition: Corporate Amnesia

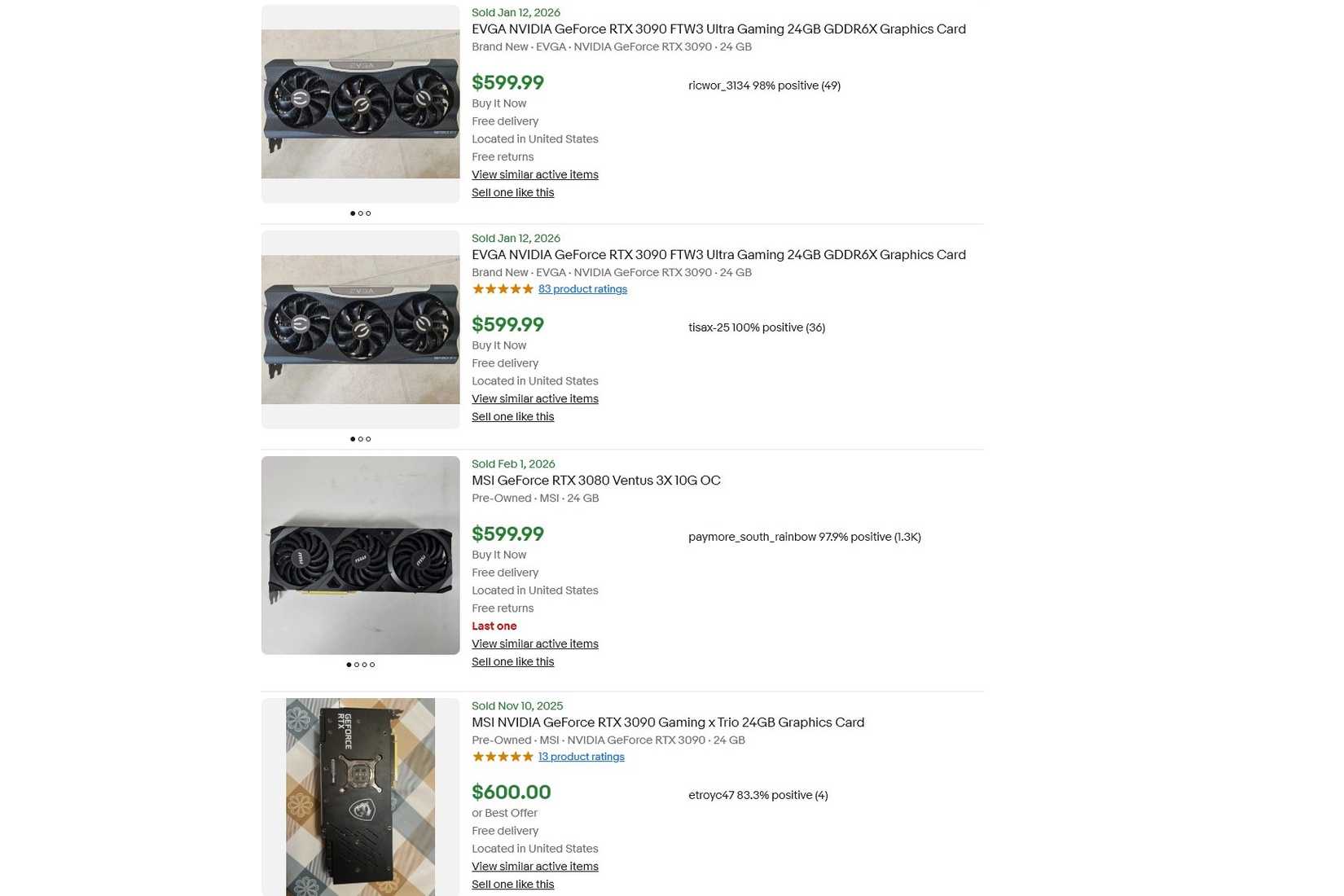

NVIDIA would rather you forget the RTX 3090 exists. In 2026, they’re pushing RTX 5090s starting at $3,500 while artificially limiting the 5080 to 16GB of VRAM. The 3090’s 24GB framebuffer is “unheard of in today’s graphics cards, except on the overpriced RTX 5090”, as one hardware analyst put it.

The math is brutal for NVIDIA’s product managers. A used 3090 costs $600-900 on eBay. Two of them give you 48GB of VRAM for less than the price of a single used RTX 5090. Six of them, like this build, provide 144GB for roughly the cost of one A100, while consuming less power and fitting in a standard server chassis.

VRAM per dollar is the only metric that matters for AI work, and the 3090 crushes everything in its price bracket. The Novi AI hardware guide confirms this: when evaluating GPUs for AI, traditional gaming benchmarks become irrelevant. The 3090 delivers 24GB at a fraction of the cost-per-gigabyte of any modern card.

Performance Reality Check: Numbers That Matter

The 24.5 GB/s P2P bandwidth is respectable but not earth-shattering. For comparison, NVLink on A100s delivers 600 GB/s. But here’s the kicker: you don’t need NVLink speeds for many workloads. Training a 10B parameter diffusion model from scratch, the builder’s target, benefits more from having 144GB of pooled memory than from blazing-fast inter-GPU communication.

The PCIe 4.0 x16 links provide 64 GB/s theoretical bandwidth each. In practice, with overhead and the P2P hack, 24.5 GB/s sustained is enough to keep all six GPUs busy during distributed training runs. The builder is currently wrestling with getting sharding to work reliably for GPT-2 training on OpenWebText, which is exactly the kind of real-world problem that exposes whether your interconnect is actually usable or just benchmark-bait.

Power consumption at 270W per GPU is deliberate. The 3090 can boost to 350W+, but running at 77% of max power reduces thermal stress, extends card lifespan (critical for used hardware), and only sacrifices ~10% performance. For a 24/7 training rig, that’s smart engineering, not corner-cutting.

The Commercial Hardware Comparison: DGX Spark’s Shadow

This DIY build exists in direct opposition to NVIDIA’s DGX Spark, which promised 1 PFLOPS of AI performance in a compact form factor but delivers roughly half that in real workloads. The Spark’s dirty secret isn’t just inflated specs, it’s that you’re paying a 10x premium for software lock-in and a gold paint job.

The 6×3090 build doesn’t have fancy MIG partitioning or enterprise support contracts. But it does give you:

– 144GB VRAM vs Spark’s 64GB

– Freedom to run any framework, any modification

– No telemetry phoning home to NVIDIA

– Upgrade path as better consumer cards emerge

A commenter in the Tinygrad community running an 8×3090 setup with dual CPUs noted that even using two processors on the same motherboard makes things slower. The DIY approach forces you to understand these bottlenecks rather than hide them behind marketing.

The Controversy: Why This Threatens the AI Status Quo

The establishment response to builds like this is predictable: “It’s not supported”, “You’ll have driver issues”, “Enterprise reliability matters.” But that’s missing the point. The entire AI boom was built on researchers cobbling together whatever hardware they could find. The only difference now is that NVIDIA has tasted enterprise money and wants to keep it that way.

This build represents a return to first principles:

1. Hardware should do what it’s physically capable of, not what marketing departments allow

2. Open frameworks beat enterprise software when you need to modify drivers

3. VRAM density trumps architectural improvements for many real workloads

4. Grassroots innovation drives the field more than corporate R&D

The Tinygrad modification proves that NVIDIA’s segmentation is artificial. The 3090’s longevity proves that their product strategy is anti-consumer. And the builder’s success proves that you don’t need a $50,000 box to do serious AI research.

Challenges and Limitations: No Free Lunch

Let’s be clear: this isn’t plug-and-play. The builder is “trying to figure out how to get sharding to work reliably”, which is AI researcher code for “the damn thing crashes every third epoch.” Used GPUs have no warranty, and six of them means six times the failure probability. Power delivery becomes a serious engineering problem, one commenter with a similar build runs four 1200W PSUs to handle spikes.

Thermal management is another beast. Six 30s pumping out 270W each creates a 1620W heat source in a single chassis. That’s space heater territory, requiring serious airflow or liquid cooling. The ROMED-2T motherboard is EATX, so forget about stuffing this in a standard case.

And then there’s the software ecosystem. Tinygrad is lean and hackable, but it doesn’t have PyTorch’s library support or JAX’s XLA compilation magic. You’re trading convenience for control, which is the right call for some but career suicide for others.

The Bigger Picture: AI Democracy vs. AI Feudalism

The most subversive aspect of this build isn’t the hardware, it’s the philosophy. While NVIDIA courts AI feudal lords with DGX systems and CUDA lock-in, builds like this keep the door open for AI democracy. Every researcher who can train a 10B model on $6,000 of used hardware is one less customer for NVIDIA’s enterprise division.

The trend toward edge AI and personal hardware clusters reinforces this. As NeuralCoreTech’s analysis shows, 2026 is seeing a shift from cloud-centric training to edge-centric inference and personalized model execution. The hardware stack, NPUs, edge GPUs, FPGAs, is becoming more heterogeneous and accessible.

This 6×3090 build sits at the intersection of two revolutions: the hardware democratization of AI and the software liberation from corporate frameworks. It’s not perfect, but it’s possible. And possibility is what drives innovation, not polished enterprise solutions.

What This Means for Your AI Strategy

If you’re a researcher, startup, or just an AI enthusiast with a grudge against NVIDIA’s pricing, here’s the actionable takeaway: stop optimizing for the hardware you’re told to use and start optimizing for the hardware you can actually afford.

The RTX 3090 isn’t just a used GPU, it’s a statement. The Tinygrad framework isn’t just alternative software, it’s a weapon against lock-in. And P2P enabling isn’t just a hack, it’s proof that artificial limitations exist to be broken.

The AI community has always thrived on clever workarounds and resourceful engineering. This build is just the latest chapter in that story. The question isn’t whether you should replicate it. The question is: what will you build now that you know these limits are imaginary?

The cracks in NVIDIA’s AI strategy aren’t just showing, they’re being actively exploited by researchers who remember that the best tools are the ones you can actually modify. While the DGX Spark struggles to deliver its promised performance, garage builds like this are pushing the boundaries of what’s possible with open frameworks and consumer hardware.

Welcome to the DIY AI revolution. Bring your own thermal paste.