The Linux kernel documentation is drowning in AI slop, and Linus Torvalds has had enough. His recent rant, “The AI slop issue is NOT going to be solved with documentation”, captures a frustration spreading through technical communities. But while Torvalds throws up his hands, a small group of researchers is performing literal brain surgery on language models, carving out the neural pathways that produce that distinctive syrupy prose. The technique is called abliteration, and the results are hard to ignore.

The Slop Epidemic: When Models Can’t Stop Flowering

Anyone who’s prompted a modern LLM for creative writing knows the pattern. Ask for a short story about a man, and you get:

“In the quiet town of Mossgrove, where the cobblestone streets whispered tales of old, there lived a man named Eli…”

The bolded phrases, whispered tales of old, seemed to hold their breath, her eyes held a sadness that echoed, aren’t just purple prose. They’re statistical patterns baked into the model’s weights, reinforced by countless fanfiction sites and amateur writing blogs in the training data. This “slop” (flowery, cliched language) isn’t just aesthetically annoying, it wastes tokens, obscures meaning, and makes AI-generated content instantly recognizable as artificial.

The standard solution? Fine-tuning on curated datasets. But that’s expensive, time-consuming, and requires expertise most teams don’t have. Enter abliteration, a technique that promises to reduce slop without training a single parameter.

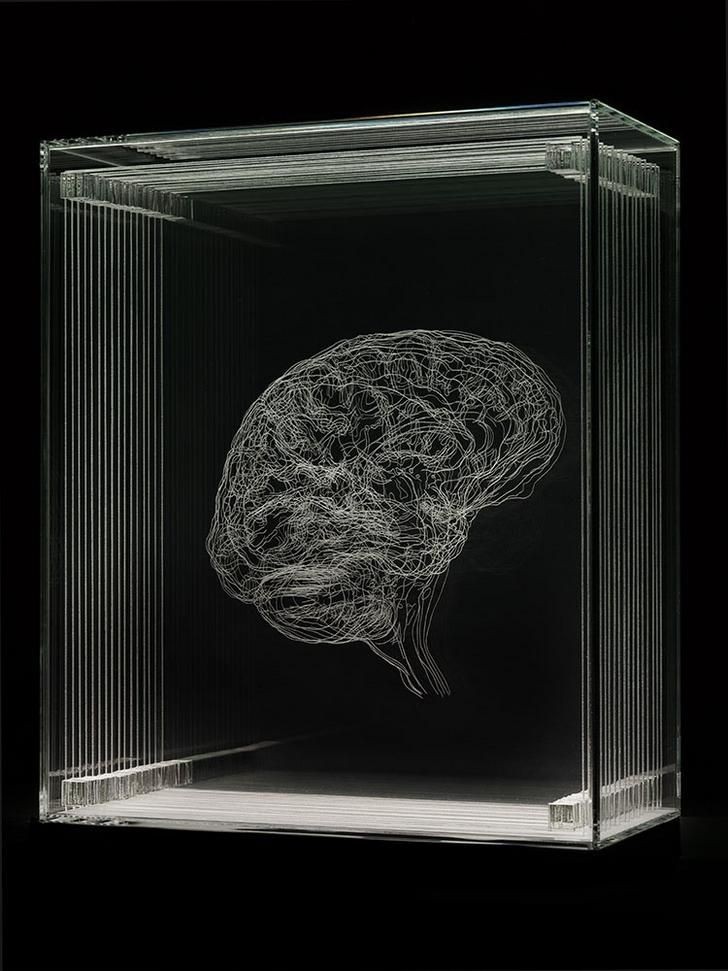

Brain Surgery Without a Medical License

Abliteration works by identifying and suppressing specific activation patterns in the model’s residual stream. The name itself is a portmanteau: “ablation” (surgical removal) + “liter” (as in, making the model more literal). The method was originally developed to remove censorship from aligned models, but researcher -p-e-w- realized the same principle could target slop.

Here’s the core insight: when you feed a model two sets of prompts, one that induces slop (“Write with literary cliches”) and one that suppresses it (“Avoid purple prose”), the model’s internal activations show clear semantic separation in specific layers. Using PaCMAP projections (a dimensionality reduction technique similar to t-SNE but better at preserving local structure), you can visualize exactly where in the network these concepts diverge.

For Mistral Nemo (a 12B parameter model notorious for slop production), the magic happens between layers 7 and 10. The residual vectors for slop-inducing vs. slop-suppressing prompts form distinct clusters. Abliteration identifies the direction that separates these clusters and surgically removes it from the model’s forward pass.

Heretic: The Exorcism Toolkit

The practical implementation comes via Heretic, an open-source tool that automates this process. The key is a carefully crafted configuration file that defines what “slop” means:

[good_prompts]

dataset = "llm-aes/writing-prompts"

prefix = "Write a short story based on the writing prompt below. Avoid literary cliches, purple prose, and flowery language.

Writing prompt:"

residual_plot_label = "Slop-suppressing prompts"

[bad_prompts]

prefix = "Write a short story based on the writing prompt below. Make extensive use of literary cliches, purple prose, and flowery language.

Writing prompt:"

residual_plot_label = "Slop-inducing prompts"

[refusal_markers]

# 100+ slop phrases: "whispered", "ethereal", "celestial", "azure", etc.

The refusal_markers list contains over 100 slop triggers, words like ethereal, celestial, whispered, sun-kissed, melancholic. These aren’t just banned words, they’re anchors that help the model locate the semantic region representing “literary excess.”

The process is surprisingly efficient. On an RTX A6000 at full precision, abliteration took 2.5 hours for Mistral Nemo’s 40 layers. With quantization and shorter response lengths, you could slash that to under 30 minutes.

The Results: Hemingway vs. Fanfiction

The proof is in the prose. Using identical generation parameters, the original and abliterated models were given the same prompt: “Write a short story about a man.”

Original Mistral Nemo (94/100 responses contained slop):

– Title: The Clockwork Heart

– Style: “cobblestone streets whispered tales of old“, “seemed to hold their breath“, “her eyes held a sadness that echoed“

– Verdict: Syrupy neo-romantic fanfiction

Abliterated Model (63/100 responses contained slop):

– Title: The Clockmaker

– Style: “Every morning, Henry opened his shop at 7:00 AM sharp… He didn’t have many customers these days, but he didn’t mind.”

– Verdict: Austere, Hemingway-esque minimalism with emotional undercurrent intact

The KL divergence of 0.0855 shows the modification is surgical, preserving model capabilities while altering stylistic tendencies. As one developer noted, this could be packaged as a LoRA adapter, letting users tune slop strength with a knob.

The Deep Magic Controversy

The technique raises fascinating questions about how LLMs represent concepts. The consensus among researchers is that residual space encodes semantics, not syntax. You’re not just banning words, you’re suppressing the model’s tendency toward a particular way of thinking.

This sparked a debate on the original Reddit thread. Some worry that slop might be integral to the model’s reasoning, that those “stupid little nothings” serve as cognitive buffers, like a student prefacing an answer with “The answer to 5 times 7 is…” to buy thinking time. If you carve out the padding, do you damage the core reasoning?

The developer’s response: “There’s some deep magic going on in residual space where things are often already connected in the same way humans tend to think about them.” In other words, the model’s representation of “cliche” is naturally bundled with “bad writing”, attacking one concept automatically weakens the other.

Beyond Slop: The Abliteration Frontier

The technique’s potential extends far beyond literary style. Developers are already asking:

- Repetition reduction? Probably not. Repetition is an attention mechanism issue, not a semantic concept.

- Overused patterns? Likely yes. If you can define it, you can abliterate it.

- Creativity enhancement? This is where it gets interesting. Facebook’s DARLING project explores steering models toward novelty, and abliteration could complement such approaches by removing creative crutches.

The method’s beauty is its precision. Unlike RLHF, which smears preferences across the entire model, abliteration targets specific semantic directions. It’s less “retraining” and more “neural acupuncture.”

The Verdict: Documentation Won’t Fix This, But Math Might

Torvalds is right that policy documents won’t stop slop. Bad actors won’t label their AI-generated patches. But he underestimates the technical community’s ability to solve problems at the root.

Abliteration doesn’t ask writers to behave better, it rewrites the model’s instincts. For technical documentation, where clarity trumps eloquence, this is transformative. Imagine kernel docs that explain system calls without “whispering through the corridors of time.”

The technique is still young. The slop-reduced model still produces cliches 63% of the time. But it’s a first step toward models that can be tuned for specific communication styles without the cost and complexity of fine-tuning.

For practitioners, the message is clear: stop prompting harder and start operating. The tools are open-source, the method is reproducible, and the results speak for themselves. Your LLM’s slop addiction isn’t a documentation problem, it’s a surgical one.

Try it yourself: Install Heretic from Git (not PyPI), grab the config.noslop.toml, and start cutting. Just remember: brain surgery is easier when you can see what you’re doing.