Qwen is eating the open-source LLM market while nobody was looking. With 20% of OpenRouter traffic and benchmark scores that make Claude sweat, Alibaba’s open-source model family isn’t just another academic project, it’s become the default choice for developers who actually need to ship things. But here’s the uncomfortable question: can a model family funded by Alibaba Cloud’s budget really keep pace with OpenAI’s $100B war chest and Anthropic’s Amazon-backed billions?

The data tells a story that defies the usual narrative about open-source models being perpetually behind. On OpenRouter, the aggregator that shows what models developers actually use rather than what companies claim they’re using, Qwen’s market share isn’t a rounding error, it’s a statement. Meanwhile, in head-to-head benchmarks on mathematical reasoning and coding tasks, Qwen3 variants are posting numbers that force a recalibration of what we thought possible from non-Western models. Yet the sustainability concerns are real, and they go deeper than just funding.

The 20% That Matters: Decoding OpenRouter Metrics

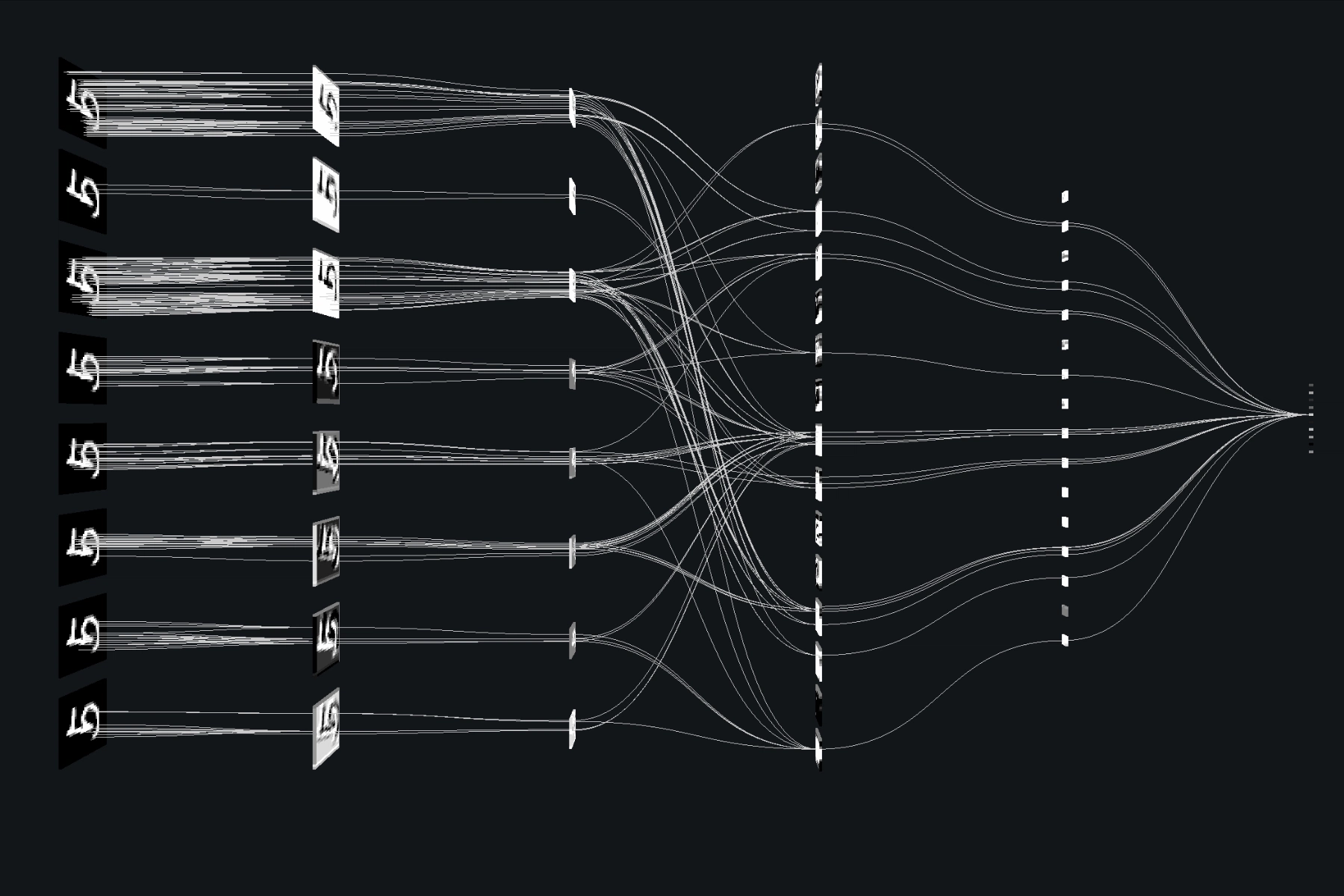

OpenRouter’s ranking system cuts through marketing fluff by measuring actual token consumption across its platform. When Qwen captures 20% of traffic, that means one in five API calls is going to Alibaba’s model family. To understand why, look at the category breakdowns from the rankings:

In programming tasks, specialized models dominate, but Qwen maintains a consistent presence where general-purpose models typically flame out. The real story is in multilingual applications, Qwen’s Chinese-English bilingual training gives it a structural advantage that monolingual models can’t easily replicate. One developer on Reddit put it bluntly: “Qwen handles Asian and European languages better than most open peers.”

But raw traffic share doesn’t tell the whole story. The CSDN analysis of OpenRouter data reveals a critical pattern: while Claude Sonnet commands 14.0% of total usage and Google’s Gemini variants collectively hold 18.7%, Qwen’s 20% represents sustained growth across multiple model sizes, a distribution strategy that turns out to be its secret weapon.

This concentration reveals something crucial: Qwen isn’t winning on flagship models alone. It’s winning by being everywhere developers need it to be.

The Benchmark Reality Check: When Numbers Lie and Tell the Truth

Academic benchmarks are the original sin of LLM marketing, but the arXiv cost-benefit analysis provides sobering context. Their Table I shows Qwen3-235B posting 79.0% on GPQA and 98.4% on MATH-500, numbers that flirt with Claude-4 Opus territory. But as one developer noted after extended use, “Benchmarks can be deceiving.”

The paper’s performance table tells a more nuanced story:

| Model | GPQA | MATH-500 | LiveCodeBench | MMLU-Pro | API Cost (In/Out, USD/M tokens) |

|---|---|---|---|---|---|

| Qwen3-235B | 79.0% | 98.4% | 78.8% | 84.3% | , |

| Claude-4 Opus | 70.1% | 94.1% | 54.2% | 86.0% | $15.00 / $75.00 |

| GPT-5 | 85.4% | 99.4% | 66.8% | 87.1% | $1.25 / $10.00 |

Qwen3-235B beats Claude-4 Opus on GPQA by nearly 9 points and crushes it on LiveCodeBench by nearly 25 points. The MATH-500 score of 98.4% is within spitting distance of GPT-5’s 99.4%. For a model you can download and run locally, that’s not just competitive, it’s disruptive.

The Consumer Hardware Revolution

Here’s where Qwen’s strategy gets clever. While competitors chase trillion-parameter models that require data-center scale, Qwen optimized for what developers actually have: consumer GPUs.

The arXiv paper’s hardware breakdown shows Qwen3-30B runs efficiently on a single RTX 5090 ($2,000) at 180 tokens/sec, delivering performance that beats models costing 10x more to deploy. Compare the deployment specs:

| Model | VRAM (FP8) | Hardware Deployment | Hardware Cost | Token Capacity/Month |

|---|---|---|---|---|

| Qwen3-235B | 235 GB | 4× A100-80GB | $60,000 | 253.4M |

| Qwen3-30B | 30 GB | 1× RTX 5090 | $2,000 | 114.0M |

| Llama-3.3-70B | 70 GB | 1× A100-80GB | $15,000 | 120.4M |

For SMEs processing \<10M tokens/month, Qwen3-30B breaks even with Claude-4 Opus in 0.3 months, essentially paying for itself in a week. Even the large 235B model reaches break-even with Claude in 4.3 months, a fraction of the hardware’s useful life.

The Reddit community’s sentiment perfectly captures the practical impact: one developer noted Qwen models “can be run on consumer grade hardware (1 graphics card, under 16gb vram) and even when quants are needed it’s usually higher than Q4 leading to less intelligence falloff.”

Real-World Performance: The OCR Case Study

Benchmarks are one thing, production performance is another. The Analytics Vidhya comparison of OCR models provides a brutal reality check. When tested on IRS Form 5500-EZ, a document mixing printed text, handwritten fields, and complex layout, Qwen-3 VL showed both brilliance and fatal flaws.

Character-level accuracy: Qwen-3 VL achieved the highest recognition quality, correctly parsing “ACME Corp Software” where DeepSeek OCR misread it as “Aone Corp Software.” The model’s layout understanding preserved table structures and even captured checkbox marks correctly.

Latency failure: Despite superior accuracy, Qwen-3 VL demonstrated critical production unsuitability. The system “started giving out infinite dots while trying to finalize the extraction”, a timeout behavior that makes it unusable for high-volume pipelines. As the analysis concluded: “High accuracy is meaningless without low-latency delivery.”

Speed comparison:

– Mistral OCR: 3-4 seconds, excellent layout understanding

– DeepSeek OCR: 4-6 seconds, moderate quality

– Qwen-3 VL: Indeterminate (timeout), excellent quality but failed to complete

This exemplifies the gap between benchmark performance and deployment reality that the arXiv paper warns about. Qwen’s technical capabilities are proven, but operational robustness remains a question mark.

The Sustainability Equation: Can Open Source Compete at Scale?

The Reddit discussion that sparked this analysis cuts to the core concern: “This model doesn’t appear to be sustainable however. This will require masssive inflow of resources and talent to keep up with giants like Anthropic and OpenAI or Qwen will fast become a thing of the past very fast.”

The counterargument is straightforward: Qwen has the resources. As one commenter pointed out, “They have the funding, they’re the freaking Alibaba Cloud and DAMO Academy.” The backing isn’t hypothetical, it’s one of the world’s largest cloud providers.

But funding alone doesn’t guarantee relevance. The arXiv paper’s break-even analysis reveals the economic pressure:

| Model Comparison | Break-Even Time (months) | Performance Difference |

|---|---|---|

| Qwen3-235B vs Claude-4 Opus | 4.3 | +9.03% (favoring Qwen) |

| Qwen3-235B vs GPT-5 | 34.0 | +0.45% (favoring Qwen) |

| Qwen3-30B vs Claude-4 Opus | 0.3 | +3.38% (favoring Qwen) |

The numbers show Qwen’s economic advantage is strongest against Claude, precisely the competitor it most needs to outmaneuver. Against GPT-5, the break-even stretches to nearly 3 years, reflecting OpenAI’s aggressive pricing.

The Licensing Advantage Nobody Talks About

While everyone obsesses over parameter counts, Qwen’s Apache 2.0 license is quietly reshaping deployment strategies. Unlike Meta’s LLaMA with its restrictive commercial terms, Qwen lets enterprises self-host without legal anxiety.

The Genius Firms review highlighted this exactly: “Apache 2.0 licensing means commercial use is allowed. That’s why researchers and startups are already hosting it on-prem without worrying about vendor lock-in.”

This creates a flywheel effect: more deployments → more community improvements → better performance → more deployments. The OpenRouter data proves this is already happening, with usage growing steadily across 2024-2025.

Deployment Decision Framework: When Qwen Makes Sense

Based on the comprehensive cost-benefit analysis, here’s when Qwen becomes the rational choice:

For SMEs (\<10M tokens/month): Qwen3-30B breaks even in under a month on consumer hardware. The ROI is immediate and overwhelming.

For Medium Enterprises (10-50M tokens/month): Medium models like Qwen3-30B offer “balanced economics” with break-even periods of 3.8-34 months, depending on the commercial alternative being replaced.

For Large Enterprises (>50M tokens/month): The 235B model becomes viable at scale, though break-even extends to 3.5-69.3 months. The key is utilizing existing GPU clusters to reduce incremental CapEx.

The critical insight from the paper: deployment economics are “highly context-dependent and challenge common assumptions about local feasibility.” Qwen’s advantage isn’t universal, it’s specific to organizations that value data sovereignty and have predictable, high-volume workloads.

The Community Verdict

Developer sentiment on Reddit reveals a nuanced reality. The model family wins on practical metrics: size efficiency, multilingual capability, and cost-effectiveness. But skepticism persists about long-term innovation velocity.

One developer’s month-long trial concluded: “Qwen has become my default open model family for experimentation, writing, and lightweight deployments. It’s flexible, affordable, and legally clear, traits most enterprises crave.”

The caveat? “For code debugging, it still trails GPT-4-Turbo, for deep creative writing, it occasionally over-summarizes.” The model excels as a “workhorse” for generic tasks but isn’t yet pushing boundaries in specialized domains.

The Bottom Line: A Sustainable Disruption

Qwen’s 20% market share isn’t a fluke, it’s the result of a strategy that prioritizes developer needs over marketing headlines. By optimizing for consumer hardware, maintaining Apache 2.0 licensing, and delivering benchmark-competitive performance, Alibaba has created a model family that solves real deployment problems.

The sustainability question remains partially answered. Yes, Alibaba Cloud provides massive resources. Yes, the technical foundation is solid. But maintaining innovation velocity requires sustained investment.

The data suggests this is a sustainable disruption, not a flash in the pan. The break-even economics work. The performance is real. And most importantly, developers are voting with their API calls.

For organizations evaluating LLM strategies, Qwen isn’t just an open-source curiosity anymore, it’s a strategic option that demands serious consideration. The question isn’t whether it’s good enough to use. The question is whether the incumbents can adapt fast enough to compete.

Ready to evaluate Qwen for your use case? Start with the 30B variant on consumer hardware. If your workload exceeds 10M tokens/month, run the break-even math from the arXiv paper. And if you’re processing >50M tokens, the 235B model might already be cheaper than your current API bill.