The traditional API gateway pattern just got disrupted. With Gemini 3’s release, Google didn’t just ship another AI model – they shipped an architectural blueprint. The era where APIs merely exposed backend services is over. Welcome to the age where APIs are the intelligence layer.

The AI Gateway Pattern Emerges

Gemini 3’s rollout strategy reveals a fundamental shift in how cloud providers think about service exposure. When Google announced that Gemini 3 is “available today across a suite of Google products so you can use it in your daily life to learn, build and plan anything”, they weren’t just describing feature parity – they were describing a new architectural pattern.

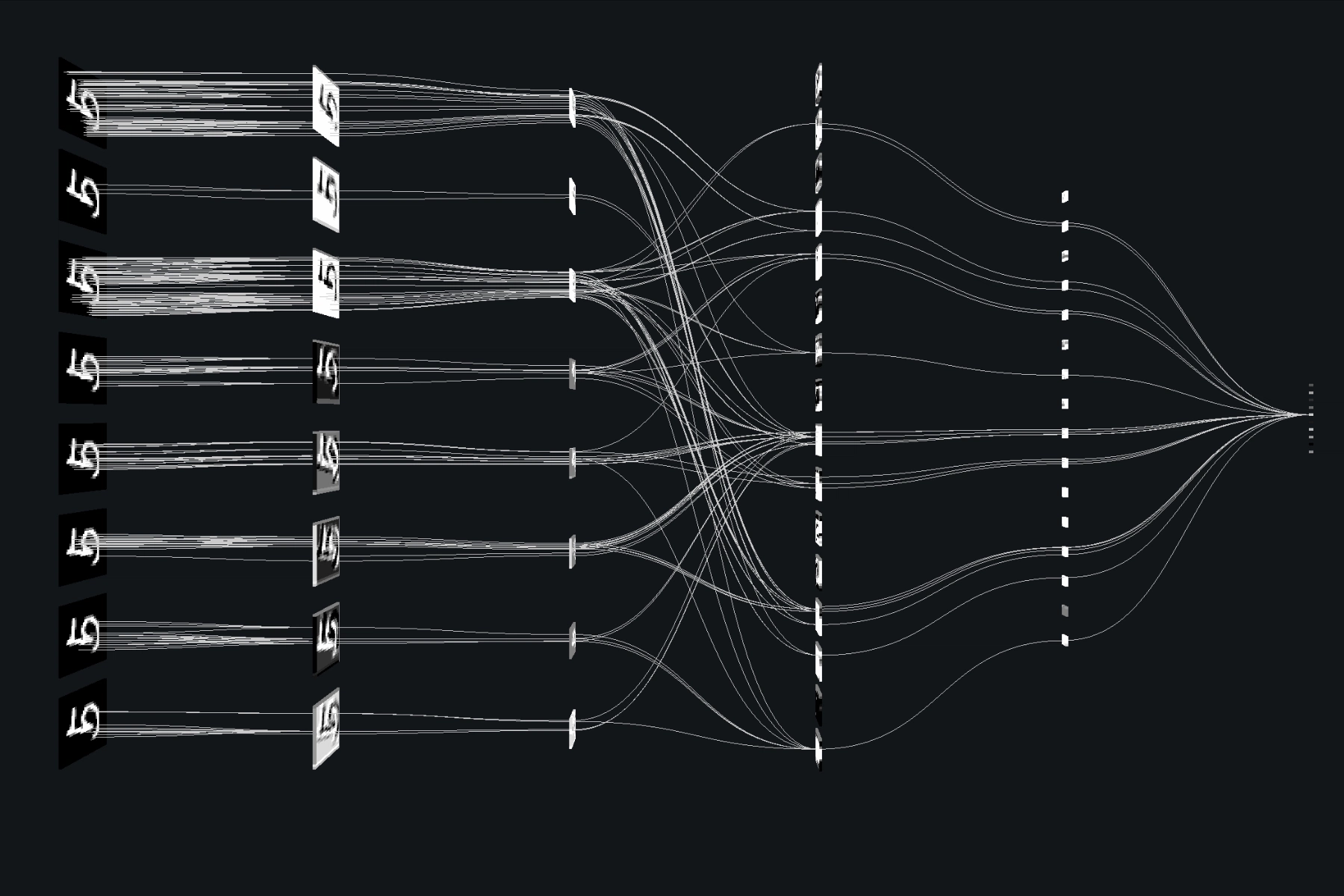

The AI gateway isn’t just another API endpoint. It’s a service abstraction layer that fundamentally changes how we think about application boundaries. Traditional microservices expose discrete functionality: authentication service, payment service, notification service. The AI gateway exposes capability – reasoning, coding, planning, multimodal understanding – that orchestrates across multiple traditional services.

Consider this: Gemini 3 Pro now “orchestrate[s] complex workflows across different services that hold your team’s context”, as noted in the Google Developers Blog. This isn’t incremental improvement, it’s architectural revolution.

What Traditional Microservices Get Wrong

The microservice architecture pattern emerged to solve scaling and organizational problems, but it created new ones. Service boundaries become brittle, integration complexity explodes, and the overhead of managing interservice communication often outweighs the benefits. The AI gateway pattern attacks these problems head-on.

Take Gemini CLI’s demonstration of debugging performance issues in a live Cloud Run service. The AI doesn’t just call APIs – it understands context, plans multi-step investigations, and executes across service boundaries automatically. In traditional microservices, you’d need specialized services for observability tracing, log aggregation, and performance monitoring, plus complex orchestration logic. The AI gateway collapses this into a single interface.

The numbers speak for themselves: Gemini 3 Pro scores 54.2% on Terminal-Bench 2.0, which tests a model’s tool use ability to operate a computer via terminal. More tellingly, it maintains “consistent tool usage and decision-making for a full simulated year of operation” on planning benchmarks. This isn’t just better AI – it’s a different way of building systems.

The API Pricing Revolution

Google’s pricing model for Gemini 3 reveals their architectural bet. At $2/million input tokens and $12/million output tokens, they’re not selling compute cycles – they’re selling intelligence units. This fundamentally changes how we calculate the cost of features.

In traditional microservices, you pay for CPU time, memory, and network egress. With AI gateways, you pay for capability consumed. The business logic shifts from “how many instances do we need?” to “what intelligence do we want to expose?”

The Gemini 3 API pricing structure reflects this shift. It’s usage-based intelligence consumption, not resource-based infrastructure provisioning. This changes everything from cost accounting to capacity planning.

Agentic Systems Demand New Boundaries

Google Antigravity, the new agentic development platform, demonstrates where this is heading. When agents have “direct access to the editor, terminal and browser” and can “autonomously plan and execute complex, end-to-end software tasks simultaneously on your behalf while validating their own code”, we’re no longer talking about service-oriented architecture.

This is capability-oriented architecture. The boundaries aren’t between services, they’re between capabilities. An agent doesn’t care whether authentication happens in AuthService v2.3 or UserManagement microservice – it cares about the capability to verify identity and permissions.

Consider Gemini 3’s File Search API implementation patterns, where developers create “one store per organization” rather than per service. The AI gateway abstracts away the service topology, focusing instead on organizational boundaries and data access patterns.

The Death of Service Decomposition

For years, we’ve obsessed over service decomposition – breaking applications into smaller, focused services. But Gemini 3’s approach suggests we’ve been optimizing the wrong thing.

When you can describe a “retro 3D spaceship game with richer visualizations and improved interactivity” and get working code from a single prompt, the value isn’t in how well-decomposed your services are. It’s in how well-integrated your capabilities are.

The new unit of decomposition isn’t the service – it’s the capability. And capabilities map directly to what users actually want to do, not to technical implementation details.

Practical Implications for Architects

This shift demands new architectural thinking:

Design for Capability, Not Service

Instead of asking “what services do we need?”, ask “what capabilities should our AI gateway expose?” The Gemini File Search API integration handbook demonstrates this mindset – focus on what users can do, not how services are structured.

Intelligence as First-Class Citizen

AI capabilities should be primary API concerns, not afterthoughts. The traditional “add AI later” approach becomes technical debt when AI gateways become your primary interface.

Plan for Agentic Consumption

Your APIs will increasingly be consumed by AI agents, not just human developers. This requires different documentation, error handling, and capability descriptions.

The Orchestration Layer Eats Everything

What’s emerging is an orchestration-first architecture. Instead of composing microservices through explicit API calls, you’re composing capabilities through natural language instructions to an AI gateway.

This explains why Gemini 3 emphasizes “vibe coding” and single-prompt application generation. The value moves from service implementation to capability orchestration.

The Coming Integration Crisis

This shift creates immediate tension in existing architectures. Legacy microservices weren’t designed for AI gateway consumption. Their fine-grained boundaries often become impedance mismatches when exposed through capability-oriented interfaces.

The solution isn’t to rewrite everything, but to think differently about API design. Your AI gateway should expose business capabilities, not technical services. This might mean aggregating multiple microservices behind capability-focused endpoints.

What This Means for Developers

For developers, this changes everything:

Natural Language Becomes the API

Instead of learning REST endpoints and GraphQL schemas, developers describe intent. The AI gateway handles the translation to underlying service calls.

Service Boundaries Become Fluid

The distinction between “authentication service” and “user profile service” matters less than the capability to “authenticate user and retrieve profile.”

Debugging Shifts Focus

Instead of tracing requests through service meshes, you’ll debug capability delivery and reasoning chains.

The Future Is Gateway-Centric

Gemini 3 isn’t an isolated phenomenon. It’s part of a broader trend where AI capabilities are becoming the primary interface to cloud services. We’re moving from service-oriented architecture to capability-oriented architecture, with AI gateways as the orchestrators.

The implications are profound. Service discovery becomes capability discovery. API versioning becomes capability evolution. Load balancing becomes intelligence routing.

The microservices pattern served us well, but its time is passing. The future belongs to capability gateways powered by AI – and Gemini 3 shows us exactly what that future looks like. The question isn’t whether you’ll adopt this pattern, but when your competitors will force you to.