When Cloudflare’s global infrastructure stumbles, the internet stumbles with it. On November 18, 2025, a seemingly innocuous database permission change snowballed into what CEO Matthew Prince called “Cloudflare’s worst outage since 2019” – a multi-hour cascade that brought major portions of the network to their knees.

The incident timeline reveals a classic distributed systems failure cascade. At 11:20 UTC, Cloudflare’s Statuspage reported the Global Network experiencing issues. For the next three hours, services blinked in and out: WARP access disabled in London, dashboard failures, bot management systems faltering. The root cause wasn’t a malicious actor or external attack, but a perfect storm of architectural oversights that started with a simple database query.

The Butterfly Effect: From Database Permissions to Global Blackout

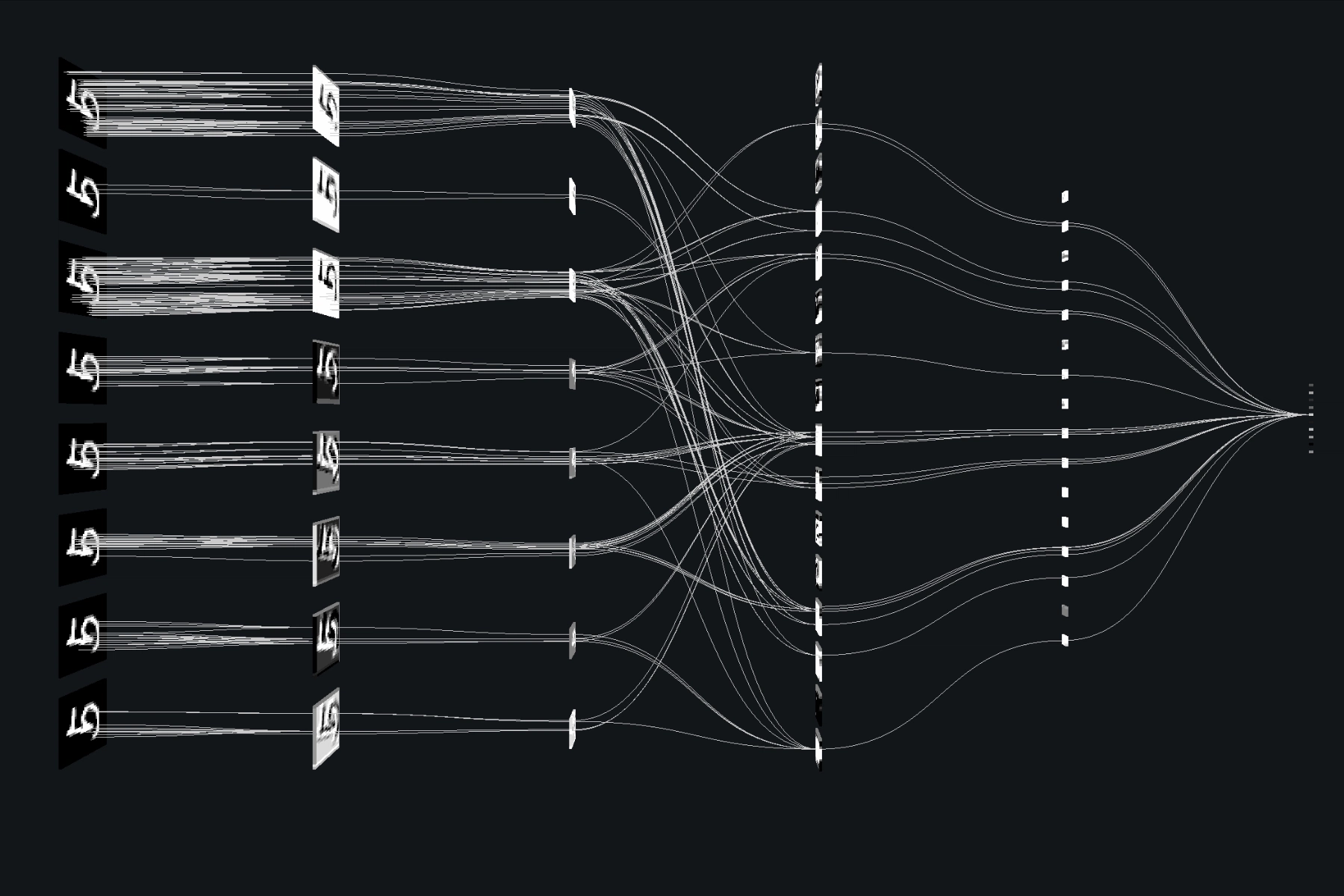

The outage traces back to a routine security update intended to rotate database credentials. As part of this security work, a Cloudflare engineer modified permissions on their ClickHouse analytics database. This innocent change exposed additional database tables to the ML bot management feature file generation system.

Suddenly, what should have been a focused query turned into a data explosion – the system began querying across multiple irrelevant tables, doubling the size of the generated feature file from its expected configuration.

The critical failure occurred in their Rust-based FL2 proxy service, which featured this now-infamous line:

let feature_values = features.append_with_names(&mut write_features).unwrap();That .unwrap() – Rust’s equivalent of assuming perfection – became the single point of failure affecting 20% of internet traffic. When the oversized configuration file arrived, the system attempted to parse more features than its hardcoded limit allowed, causing an immediate panic. Instead of graceful degradation or error recovery, thousands of global proxy instances crashed simultaneously.

The System Architecture Blind Spot

The bot management system’s design requirements created inherent risk. As one FAANG incident response veteran noted on Hacker News, “Their bot management system is designed to push configuration out to the entire network rapidly” rather than rolling out changes gradually. This rapid propagation capability, while essential for quickly responding to attacks, created risk disproportionate to their operational visibility and rollback capabilities.

What made this particularly painful was the time-to-identification breakdown. Initial assumptions pointed toward a coordinated cyberattack, consuming two precious hours while services remained crippled. The team disabled WARP access in London and scrambled to understand why error rates remained elevated globally. Only after eliminating external theories did they trace the problem back to the configuration file propagation system.

The distributed nature of the failure amplified recovery complexity. Cloudflare’s status updates reveal the painstaking restoration process:

“We continue to see errors drop as we work through services globally and clearing remaining errors and latency.”

The recovery wasn’t instantaneous. Even after identifying the problematic configuration, engineers needed to reboot processes across their global fleet to clear corrupted state and restore normal operations.

The Swiss Cheese Model of Failure

This wasn’t one failure but a sequence of defensive gaps:

- The Query Change: Database permission updates exposed unintended tables

- The Configuration Pipeline: Lack of validation on generated feature files

- The Code Assumption: Hardcoded limits with

.unwrap()instead of graceful error handling - The Operational Response: Delayed recognition of configuration-based failure

- The Recovery Gap: No rapid rollback mechanism for poison configurations

Each layer alone might have been survivable, but together they created what experts call “Swiss cheese model” failure conditions – when holes in multiple defenses align perfectly.

BPG Beyond the Web

While BPG configurations weren’t directly implicated in this particular failure, the outage’s global impact demonstrates how tightly coupled modern internet infrastructure has become. When Cloudflare stumbles, the effects ripple across DNS resolution, routing decisions, and global traffic flow – regardless of the specific technical cause.

The most telling aspect? For nearly two hours, engineers suspected a sophisticated DDoS attack from the Aisuru botnet rather than their own configuration system. This reveals how external threats have become the default hypothesis when services fail at scale.

Recovery Lessons for Distributed Systems

Cloudflare’s response highlights several critical learnings for organizations operating at internet scale:

Rapid global configuration deployment demands rapid rollback capability – if you can propagate changes in minutes, you need equally fast recovery mechanisms. The three-hour resolution window for what should have been a near-instant configuration revert represents a significant operational gap.

Monitoring and observability must correlate configuration changes with system behavior – had alerts been wired between configuration updates and system stability metrics, the connection might have been identified much sooner.

Error handling in critical paths requires more than assumptions – the .unwrap() that caused this cascade represents a broader pattern of optimistic programming that fails badly at scale.

The incident wasn’t just about technical debt – it was about operational assumptions. When your infrastructure serves 20% of internet traffic, every decision carries global consequences. Cloudflare’s commitment to rapid post-mortem publication demonstrates maturity, but the architectural questions linger: How do you balance rapid response capabilities with rollback safety? When does aggressive optimization create unacceptable risk?

As one commenter noted, “You can write the safest code in the world, but if you’re shipping config changes globally every few minutes without a robust rollback plan or telemetry that pinpoints when things go sideways, you’re flying blind.”

The unwrap heard around the world serves as a stark reminder that in distributed systems, there are no small changes – only those whose consequences haven’t yet been discovered.