If you’ve ever wondered why your database can find one record among billions in milliseconds while your fancy new data structure struggles, welcome to the brutal reality of disk I/O optimization. B-Trees aren’t just surviving, they’re thriving in production databases everywhere from your small PostgreSQL instance to global-scale MySQL deployments.

The Disk I/O Problem That Binary Trees Can’t Solve

Let’s start with what doesn’t work: binary search trees on disk. In memory, binary trees are elegant, O(log n) search complexity sounds great until you factor in disk access times.

The numbers don’t lie:

- Memory access: ~0.0001 milliseconds (pointer dereference)

- SSD access: ~0.1 milliseconds

- HDD access: ~10 milliseconds

For a binary tree with 1 million records (height ≈ 20), you’d need 20 disk seeks. On HDDs, that’s 200ms, 100,000x slower than the same operation in memory. But the real killer comes at scale: for 1 billion records, that’s 300ms on HDDs, turning what should be instant lookups into noticeable delays.

# Binary tree disaster on disk

def estimate_binary_tree_performance(num_records: int, storage_type: str):

height = math.ceil(math.log2(num_records))

seek_time = 10 if storage_type == "HDD" else 0.1

return height * seek_time

# 1 million records: 20 seeks × 10ms = 200ms (HDD)

# 1 billion records: 30 seeks × 10ms = 300ms (HDD)The fundamental problem? Binary trees have fanout of only 2. We need hundreds of children per node to minimize those expensive disk seeks.

How B-Trees Conquered Disk Storage

Enter the B-Tree, a self-balancing tree optimized for disk access where each node fits perfectly in one disk block (typically 4KB to 16KB). The brilliance is simple: since you have to read the entire block anyway, pack as many keys as possible into it.

B-Tree Structure That Actually Works:

- Root node: Single entry point

- Internal nodes: Guide searches with separator keys

- Leaf nodes: Contain actual data or pointers

- High fanout: 100-1000 children per node

Let’s compare the performance improvement:

def estimate_btree_height(num_records: int, fanout: int):

return math.ceil(math.log(num_records, fanout))

# B-Tree with fanout=100

print(f"1 million records: {estimate_btree_height(1_000_000, 100)} levels")

print(f"1 billion records: {estimate_btree_height(1_000_000_000, 100)} levels")The result? 1 million records take only 3-4 levels, and 1 billion records need just 5 levels. That’s 3-5 disk seeks instead of 20-30, a 6x improvement even before considering the sequential reads within each block.

Real-World Database Implementation Secrets

Every major database you use leverages B-Trees (or their B+Tree variant) under the hood:

MySQL InnoDB uses 16KB pages with fanout around 100-200. The primary key becomes a clustered index where leaf nodes contain actual row data, while secondary indexes store pointers back to the primary key.

PostgreSQL defaults to B-Trees with 8KB pages, while SQLite uses B-Trees for both tables and indexes with its “r-tree” implementation (despite the confusing name).

-- This creates a B-Tree index in MySQL/PostgreSQL

CREATE INDEX idx_users_email ON users(email);

-- And this query leverages it efficiently

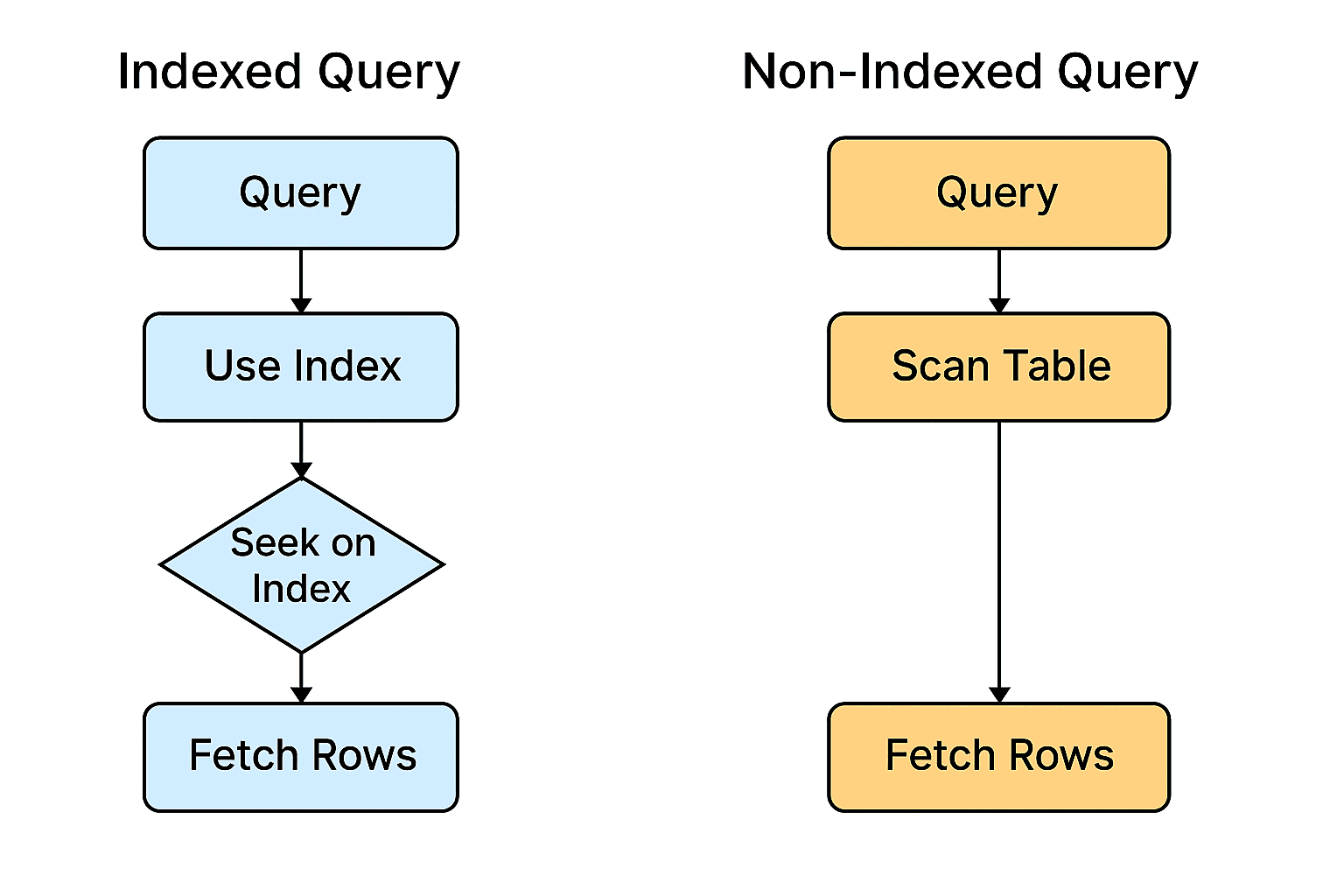

SELECT * FROM users WHERE email = 'alice@example.com';

-- Only 3-4 disk reads instead of scanning millions of rowsThe performance difference is staggering: milliseconds versus seconds for large tables.

The Modern Challenger: LSM-Trees

Enter Log-Structured Merge Trees, the “modern” alternative championed by systems like RocksDB, Cassandra, and LevelDB. LSM-trees optimize for write-heavy workloads by batching writes in memory and flushing them sequentially to disk.

LSM-trees prioritize write throughput by avoiding in-place updates. They buffer writes in an in-memory memtable and periodically flush sorted runs (SSTables) to disk. This approach eliminates random writes but complicates reads, you might need to check multiple SSTables to find the latest value.

Why B-Trees Keep Winning the War

Despite LSM-tree hype, B-Trees maintain their dominance for several key reasons:

1. Predictable Read Performance

B-Trees provide consistent O(log n) read times regardless of write patterns. LSM-trees can suffer from read amplification where queries might need to check multiple SSTable files.

# B-Tree: predictable 3-4 seeks regardless of write history

# LSM-Tree: could be 1 seek (memtable) or 10+ seeks (multiple SSTables)2. Superior Range Query Support

B-Trees naturally support range queries efficiently because leaf nodes are linked sequentially. Finding all records between two values is trivial.

-- B-Tree excels at range queries

SELECT * FROM orders

WHERE order_date BETWEEN '2024-01-01' AND '2024-12-31'

ORDER BY order_date;

-- Leaf nodes are already sorted and linkedLSM-trees require merging results from multiple SSTables, making range queries more expensive.

3. Mature Concurrency Control

Decades of production use have refined B-Tree concurrency mechanisms. Databases implement sophisticated locking and MVCC models that work seamlessly with B-Trees. The transactional guarantees that applications rely on, ACID properties, are fundamentally built around B-Tree semantics.

4. The SSD Revolution Didn’t Kill Them

You might think SSDs would eliminate B-Tree advantages by making random I/O cheaper. But B-Trees actually benefit more from SSDs than LSM-trees do. While both benefit from faster storage, B-Trees eliminate the worst-case scenarios of HDD seeks entirely on SSDs.

When LSM-Trees Actually Win

LSM-trees aren’t useless, they dominate specific use cases:

- Write-heavy workloads: Applications logging high-velocity data streams

- Append-oriented data: Time-series, event streaming, and logging systems

- SSD-based storage: Where sequential writes still outperform random writes

But for the bread-and-butter of application databases, mixed read/write workloads with complex queries, B-Trees remain king.

The Write Amplification Myth

One common criticism of B-Trees is write amplification, where one logical write triggers multiple physical writes due to node splits. But modern implementations have largely mitigated this:

Write-optimized B-Trees use techniques like copy-on-write, append-only variants, and sophisticated caching to reduce the overhead. Plus, the “amplification” cost often exaggerates real-world impact, most applications aren’t write-bound to begin with.

# Real-world write amplification is often 2-3x, not catastrophic

# And read performance remains consistently excellentThe Future Isn’t Binary

The real story isn’t B-Trees versus LSM-trees, it’s about choosing the right tool. Modern databases increasingly offer both:

- MySQL with InnoDB (B-Tree) and MyRocks (LSM-tree)

- PostgreSQL with its extensible storage engine architecture

- Specialized databases optimized for specific workloads

The “revolutionary” LSM-tree mostly revolutionized paperwork for database administrators managing write-heavy specialized systems.

Bottom Line: Why B-Trees Endure

After 50+ years, B-Trees remain dominant because they solve the fundamental constraints of persistent storage:

- They match disk block sizes – maximizing data per I/O operation

- They provide predictable performance – critical for user-facing applications

- They support complex query patterns – range queries, sorting, and aggregations

- They integrate seamlessly with transactions – the foundation of reliable applications

Next time your database serves a query in milliseconds instead of minutes, thank the unkillable B-Tree, the 1970s data structure that refuses to retire, quietly powering everything from your local SQLite database to global-scale e-commerce platforms.

The pattern holds true: sometimes the boring, battle-tested solution outperforms the shiny new alternative, especially when it’s been optimized for half a century against real production workloads.