The illusion of privacy in AI chat just shattered. Microsoft researchers have revealed “Whisper Leak” – a side-channel attack that can infer your conversation topics from encrypted AI chat traffic with over 90% accuracy. Forget reading your messages, attackers can now know what you’re discussing just by watching the timing and size of data packets.

The Illusion of Encrypted Privacy

Here’s the uncomfortable truth: when you chat with ChatGPT, Claude, or any of the major AI assistants, your HTTPS-encrypted conversations aren’t as private as you think. While the content remains scrambled, the metadata tells a revealing story.

The attack exploits how AI models generate responses token-by-token in streaming mode. Each topic – from mental health discussions to financial advice – produces unique traffic patterns in packet sizes and inter-arrival times. As Microsoft researchers explain in their technical paper, these patterns create “digital fingerprints” that machine learning classifiers can recognize with alarming precision.

How Whisper Leak Actually Works

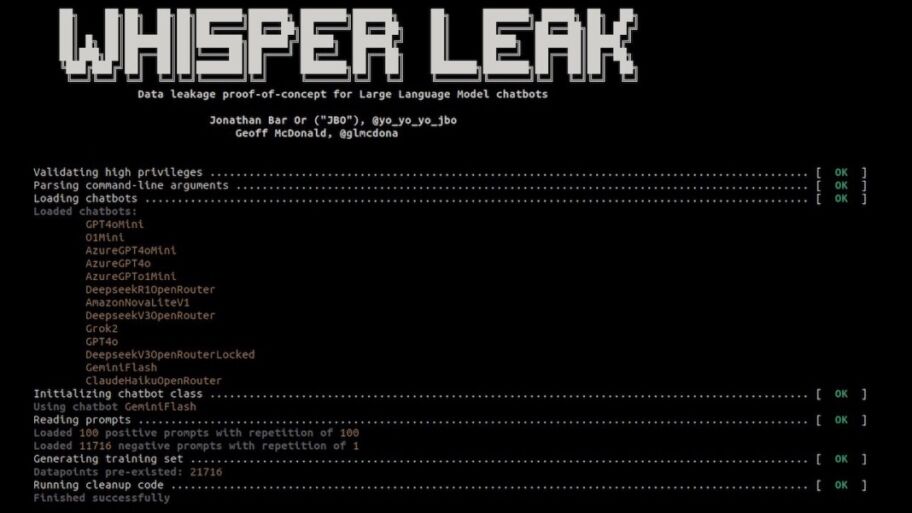

The attack methodology is elegantly simple yet devastatingly effective. Researchers trained classifiers on three machine learning architectures – LightGBM, LSTM-based models, and BERT – to distinguish sensitive topics from general traffic.

For their proof-of-concept, they used “legality of money laundering” as the target topic, generating 100 semantically similar variants like “Are there international laws against money laundering?” These were mixed with 11,716 unrelated questions from the Quora dataset to simulate realistic background noise.

The results were staggering. Using just packet sizes and timing data collected via tcpdump, their models achieved near-perfect classification across multiple AI platforms.

The Chilling Real-World Effectiveness

What makes Whisper Leak particularly alarming is its performance in realistic surveillance scenarios. In simulations with a 10,000-to-1 ratio of background noise to target conversations, the attack identified sensitive topics with 100% precision for 17 of the 28 tested models while still detecting 5-20% of all target conversations.

The performance metrics speak for themselves. Models from Mistral, xAI, OpenAI, and others showed attack success rates (AUPRC) above 98% across multiple classifiers:

| Provider-Model | BERT | LSTM | LightGBM | Best |

|---|---|---|---|---|

| mistral-large | 98.8% | 99.9% | 95.8% | 100.0% |

| microsoft-deepseek-r1 | 98.6% | 99.9% | 94.8% | 99.9% |

| xai-grok-3-mini-beta | 99.1% | 99.9% | 97.2% | 99.9% |

| openai-gpt-4o-mini | 97.5% | 98.2% | 98.6% | 98.6% |

As the researchers noted, this means “a cyberattacker could operate with high confidence, knowing they’re not wasting resources on false positives.”

The Scale of the Problem

This isn’t a theoretical vulnerability affecting a few obscure models. Microsoft tested 28 commercially available LLMs from all major providers. The attack worked across OpenAI’s GPT models, Anthropic’s Claude, Google’s Gemini, Microsoft’s own offerings, and numerous others.

The practical implications are massive. Imagine a government agency monitoring internet traffic to identify citizens discussing protest organization, journalists researching sensitive topics, or healthcare providers consulting AI about patient conditions. Even with perfect encryption, these conversations become transparent to anyone with network access.

This Is Just the Beginning

The attack effectiveness isn’t static – it improves with time and data collection. As the Microsoft team discovered, “attack effectiveness improves as cyberattackers collect more training data.”

This means that nation-state actors or persistent adversaries could develop even more accurate classifiers over time. The researchers warned that “combined with more sophisticated attack models and the richer patterns available in multi-turn conversations or multiple conversations from the same user, this means a cyberattacker with patience and resources could achieve higher success rates than our initial results suggest.”

The Patchwork Response

Microsoft began responsible disclosure in June 2025, and the industry response has been mixed. OpenAI, Microsoft, Mistral, and xAI have deployed mitigations, while other providers have been slower to respond or have declined to implement fixes entirely.

OpenAI and Microsoft implemented an “obfuscation” field that adds random text sequences of variable length to each response. This masks token lengths and substantially reduces attack effectiveness. Microsoft confirmed their Azure implementation “successfully reduces attack effectiveness to levels we consider no longer a practical risk.”

Mistral took a similar approach, introducing a new “p” parameter that achieves comparable protection:

However, these mitigations come with trade-offs. Random padding adds computational overhead, token batching can degrade the real-time chat experience, and packet injection can triple bandwidth consumption. This highlights the fundamental tension between privacy and performance in streaming AI systems.

What You Can Do Today

While this is primarily an issue for AI providers to address, users concerned about privacy have options:

- Avoid sensitive topics on untrusted networks – Public Wi-Fi becomes significantly riskier

- Use VPN services – Adds an extra layer of traffic obfuscation

- Choose providers with mitigations – OpenAI, Microsoft, and Mistral have implemented protections

- Use non-streaming models – Batch processing eliminates the timing side-channel

- Stay informed – Monitor security updates from your AI provider

A Fundamental Rethinking of AI Privacy

The Whisper Leak vulnerability exposes a deeper truth about AI security: encryption alone cannot guarantee privacy in the age of metadata analysis. As Security Affairs notes, this flaw “poses serious risks to the privacy of user and enterprise communications with chatbots.”

This isn’t just another bug to patch – it represents a paradigm shift in how we think about AI communication security. The streaming nature of modern LLM interactions, designed for user experience, creates inherent privacy trade-offs that encryption alone cannot solve.

As The Hacker News points out, this comes amid growing concerns about AI chatbot security, with recent research showing these systems “are vulnerable to manipulation through extended back-and-forth conversations.”

The era of assuming encrypted equals private is over. As AI becomes embedded in healthcare, legal, and personal conversations, we need a fundamental rethinking of what privacy means in AI-assisted communication. The patterns matter as much as the content, and the metadata can be just as revealing as the message itself.