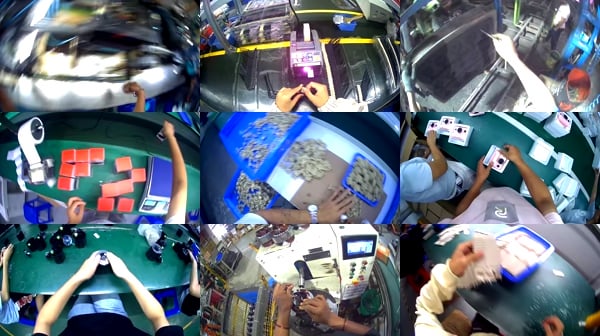

Forget simulated environments and lab-created tasks. The most ambitious real-world robotics dataset ever created just dropped, and it didn’t come from a university research lab, it came straight from the factory floor. Egocentric-10K represents a radical departure from everything that came before it: 10,000 hours of first-person perspective footage captured from 2,153 actual factory workers across real industrial environments, totaling 1.08 billion frames of pure industrial reality.

This isn’t just another dataset release, it’s a statement about where embodied AI is heading. When robotics companies insist their main limitation is data, and then someone drops 16.4 terabytes of real-world industrial footage into the open-source community, you pay attention.

The Factory Floor Goes Digital: What Exactly Is Egocentric-10K?

The numbers alone should make any computer vision researcher do a double-take:

| Attribute | Value |

|---|---|

| Total Hours | 10,000 |

| Total Frames | 1.08 billion |

| Video Clips | 192,900 |

| Median Clip Length | 180.0 seconds |

| Workers | 2,138 |

| Mean Hours per Worker | 4.68 |

| Storage Size | 16.4 TB |

| Resolution | 1080p (1920×1080) |

But the real story isn’t in the scale, it’s in the methodology. Each worker wore monocular head-mounted cameras with a 128° horizontal field of view, capturing exactly what human operators see as they perform actual manufacturing tasks. The dataset spans 85 different factories, creating what’s essentially a comprehensive visual encyclopedia of industrial work from the worker’s perspective.

Unlike previous egocentric datasets focused on daily activities or kitchen tasks, this is the first massive-scale collection captured exclusively in real industrial environments. The dataset documentation reveals it’s structured in WebDataset format, organized by factory and worker, with each TAR file containing paired video and metadata files:

{

"factory_id": "factory_002",

"worker_id": "worker_002",

"video_index": 0,

"duration_sec": 1200.0,

"width": 1920,

"height": 1080,

"fps": 30.0,

"size_bytes": 599697350,

"codec": "h265"

}

This level of organization means researchers can train models on specific factory environments or worker patterns, a granularity that’s unprecedented in industrial AI.

The Humanoid Robotics Gold Rush Meets Real Data

The timing here is anything but coincidental. As one Reddit commentator noted, “The humanoid robotics companies believe that data is the current limitation. They are buying and amassing large amounts of data to try and get their robots to solve factory and everyday tasks.”

This isn’t theoretical, companies like AgiBot are already demonstrating AI-powered robots learning manufacturing tasks through human training on actual production lines. But until now, they’ve been working with comparatively tiny datasets.

What makes Egocentric-10K so valuable is what robotics researchers call “hand visibility and active manipulation density.” Previous in-the-wild egocentric datasets often suffered from limited hand-object interaction footage. Factory work, by its nature, involves constant manual manipulation, precisely the kind of data that teaching robots to perform physical tasks requires.

The Ethical Powder Keg: Worker Surveillance Versus Robot Training

Here’s where things get ethically… interesting.

When Build AI collected this data, they were presumably focused on creating training data for robotics. But the same technology that teaches robots to perform tasks can also be used to monitor human performance in excruciating detail. As critics on Reddit pointed out: “Please don’t make these people’s lives harder. It’s bad enough when they don’t have something micromanaging them or scrutinizing everything they do.”

Another commenter echoed the concern: “Way before there’s some kind of robot that will do the job so the worker can supposedly vacation on the beach, this AI crap is going to be use to micromanage people.”

They’re not wrong. Computer vision systems trained on this data could theoretically identify “inefficient” movements, track task completion times, and optimize workflows in ways that benefit management but potentially degrade working conditions. The line between “training helpful robots” and “creating the ultimate surveillance tool” is uncomfortably thin.

Yet there’s a compelling counter-argument: These technologies could also eliminate dangerous factory work. As one comment noted, “One of the most significant uses of robots in factories is for dangerous operations that can pose high risks to workers. Some of these operations can lead to disabling injuries, causing many workers to leave the labor market entirely. In reality, numerous workers have lost limbs due to such hazards.”

Democratization or Desperation?

The open-source nature of this release raises another question: Why give away what’s clearly valuable industrial intelligence?

Two theories emerge from the discussion. Either this represents a genuine attempt at democratizing robotics development, allowing smaller players to compete with well-funded corporate labs. Or it’s “flailing because results haven’t been good enough yet for widespread adoption”, as one Reddit commenter speculated.

The truth might be somewhere in between. Robotics has historically suffered from data scarcity, particularly real-world data. By creating an open benchmark, Build AI might be attempting to accelerate the entire field while simultaneously establishing themselves as leaders in industrial AI data collection.

What Comes Next: The Factory of the Future, Today

Loading the dataset reveals its immediate utility:

from datasets import load_dataset, Features, Value

features = Features({

'mp4': Value('binary'),

'json': {

'factory_id': Value('string'),

'worker_id': Value('string'),

'video_index': Value('int64'),

'duration_sec': Value('float64'),

'width': Value('int64'),

'height': Value('int64'),

'fps': Value('float64'),

'size_bytes': Value('int64'),

'codec': Value('string')

},

'__key__': Value('string'),

'__url__': Value('string')

})

dataset = load_dataset(

"builddotai/Egocentric-10K",

streaming=True,

features=features

)

This isn’t just academic research fodder. We’re looking at the training data that could power the next generation of industrial automation, systems that don’t just perform predefined motions but understand context, adapt to variations, and learn from human demonstration.

The implications extend beyond factory walls. This dataset provides a blueprint for collecting similar data in other domains: healthcare, construction, agriculture. Once you have the methodology for capturing and organizing real-world task performance at scale, the applications multiply rapidly.

The Uncomfortable Truth About Open Source Industrial Data

There’s an important philosophical debate buried in this release. As one commenter pointed out: “If robots are going to take jobs, do you want the only thing able to do that to be the 3 megacorps that farmed the data?”

The open-source approach levels the playing field but also accelerates automation across the board. It’s the classic technology double-edged sword: democratization means more people can build competing systems, but it also means those systems will likely displace human workers faster.

Meanwhile, the commercial players aren’t standing still. Companies are already exploring “heavier and more expensive versions involving tele-operated robot datasets, full body tracking suits + POV, and more” according to industry observers. Egocentric-10K represents the “light” version, affordable, scalable, but still incredibly valuable.

The Real Revolution Isn’t the Data, It’s the Methodology

What makes Egocentric-10K genuinely revolutionary isn’t just the terabytes of footage. It’s the proof that we can systematically capture real-world industrial work at scale. The approach, monocular head-mounted cameras across hundreds of workers in dozens of factories, creates a template for collecting similar datasets across virtually any physical work domain.

The dataset’s Apache 2.0 license means both academic researchers and commercial entities can build on this foundation. We’re likely to see specialized models trained on this data within months, followed by real-world deployments not long after.

The factory workers who contributed their perspectives probably didn’t realize they were simultaneously training their replacements and creating the most comprehensive study of industrial work ever compiled. Their 10,000 hours of labor have become someone else’s training data, a perfect metaphor for the AI revolution sweeping through physical work.

One thing is certain: The era of robotics research being constrained by synthetic data is over. The real world, with all its complexity, variation, and nuance, is now the training ground. And for better or worse, your job might be next in line for digitization.