A data engineer with Python, Spark, and Airflow experience takes an ETL Developer II role at a Fortune 500 company. Two weeks in, they’re clicking through a visual interface and writing basic SQL. No PySpark. No infrastructure as code. Just drag, drop, and despair. The post hits r/dataengineering and sparks a 91-point comment that cuts to the bone: "Data engineering is a sexy label on an ETL developer."

This isn’t just another Reddit argument. It’s a referendum on what we value in data teams, what counts as "real" engineering, and whether the proliferation of no-code tools is democratizing data work or diluting it into irrelevance.

The Pythonification of Data Engineering and the Counter-Revolution

The story starts in the 2010s. Everyone wanted to be a data scientist. Udemy courses taught Scikit-learn, which meant learning Python. When those newly minted scientists hit real jobs, they discovered data doesn’t arrive pre-cleaned. So they reached for the only tool they knew, Python, and built pipelines with Pandas, Airflow, and Spark. The industry needed a name for this work. "ETL Developer" sounded too legacy, too Informatica, too corporate. "Data Engineer" had better branding.

But here’s the uncomfortable truth: most data work doesn’t require distributed computing. One commenter describes replacing 30-minute Python scripts with a three-second SQL statement and a view. Another gets rejected from a data engineering role for admitting they prefer SQL over Python, even after building a complete end-to-end pipeline with Airbyte, GCS, DBT, BigQuery, and PowerBI. The hiring manager wanted Python ideology, not problem-solving ability.

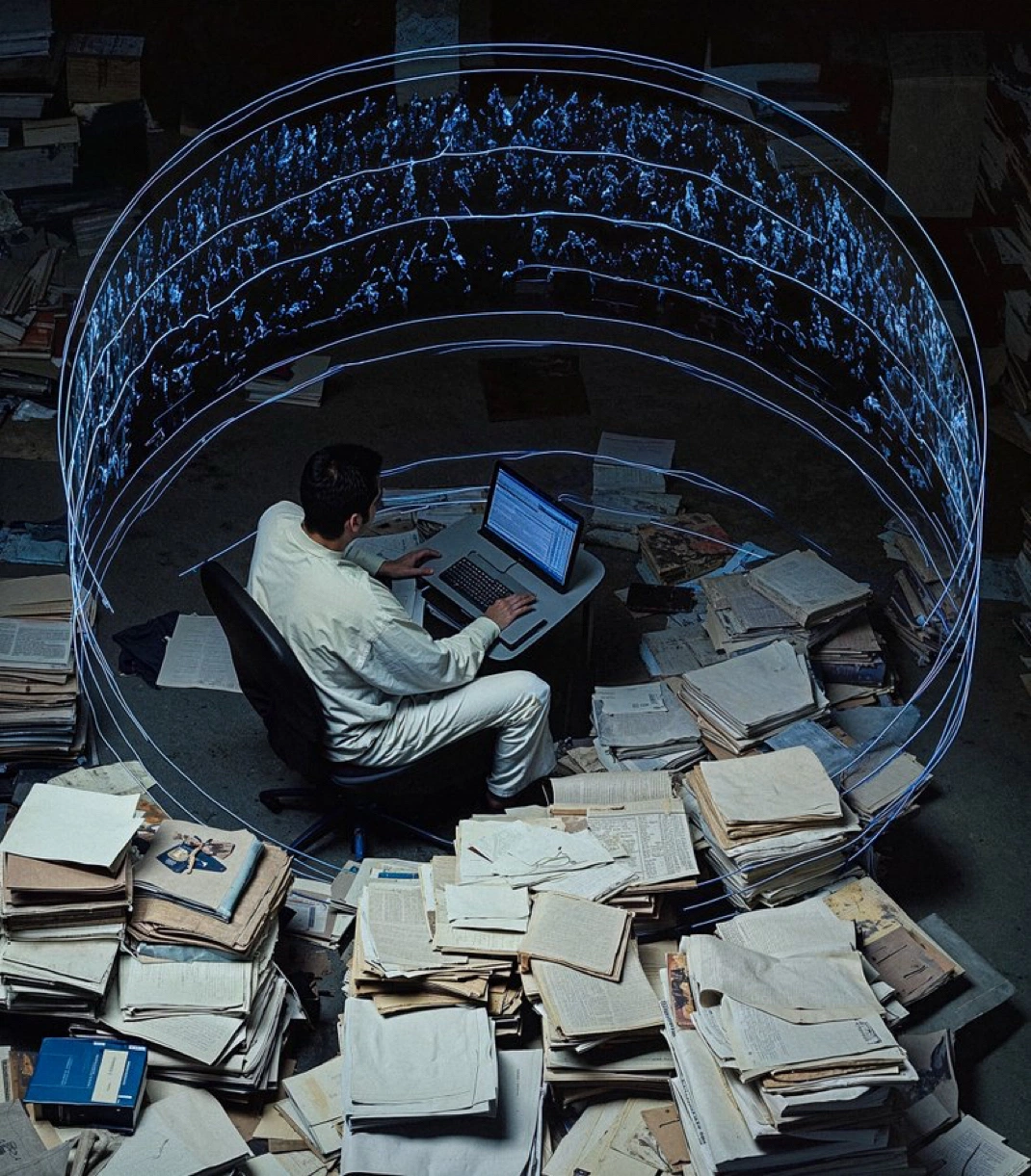

This creates a credentialism trap. Engineers who invested years learning Spark feel threatened when GUI tools accomplish the same outcome. The anxiety is understandable, when your entire skill set can be replaced by a $24/month no-code platform like Coupler.io, it’s natural to question your value.

No-Code Tools: The Great Equalizer or Skill Killer?

The ETL tool market reflects this tension. Modern platforms fall into four categories:

| Setup Complexity | Tools | Price Range | Target User |

|---|---|---|---|

| No-code | Coupler.io, Hevo Data, Skyvia | $24-$399/month | Business analysts, ops teams |

| Low-code | Integrate.io, Fivetran, Matillion | $1,800-$1,999/month | Data analysts, junior engineers |

| Technical | Airflow, Airbyte, AWS Glue | $0.44/DPU-hour + ops cost | Data engineers, DevOps |

| Enterprise | Informatica, Talend, Oracle ODI | Custom ($100K+/year) | Large IT departments |

The no-code segment is growing fastest. Skyvia offers 200+ connectors with visual pipelines starting at $79/month. Integrate.io provides 220+ transformations in a drag-and-drop interface for $1,999/month flat fee. These tools explicitly target "people who need to move data but don’t have ‘software engineer’ on their business card."

The appeal is undeniable. A marketing team can connect Facebook Ads, Google Ads, and LinkedIn Ads to BigQuery without writing a single line of code. The pipeline runs on schedule. Errors trigger alerts. Costs are predictable. For the business, this is pure value.

For the engineer who spent three weeks building a custom Python solution? It feels like erasure.

The Business Reality Check: What Actually Matters

Here’s where ideology meets economics. Companies don’t pay engineers to write code, they pay them to deliver data. If a GUI tool moves customer data from Salesforce to Snowflake with 99.9% uptime and automatic schema drift handling, that’s the correct technical decision. The cost isn’t just the engineer’s salary, it’s the opportunity cost of delayed analytics, the risk of custom code maintenance, and the bus factor of a single person understanding the pipeline.

One senior practitioner puts it bluntly: managing 1,000 Python scripts is harder than managing 1,000 GUI workflows. Visual tools make dependencies explicit. Debugging doesn’t require tracing through someone’s clever abstraction. Onboarding a new hire takes hours, not weeks.

The real skill isn’t the tool, it’s understanding trade-offs: cloud compute costs, performance, failover, supportability. An engineer who knows when to use Python, when to use SQL, and when to click "Add Connector" is more valuable than one who reaches for Spark out of habit.

Career Trajectory: Stagnation or Strategic Diversification?

The original poster’s real concern isn’t tool quality, it’s career velocity. With a resume showing multiple short stints (internships, a layoff, a 1.5-year nonprofit gig), they worry that leaving after two weeks will brand them a job-hopper. The contract is for one year. Should they stay?

The data suggests they’re asking the wrong question. The average tenure for data engineers is already short, market pressure and skill shortages create mobility. More importantly, skills don’t stagnate from tool choice, they stagnate from problem choice. If the role involves designing data models, understanding business logic, optimizing costs, and ensuring reliability, the tool is irrelevant. If it’s mindlessly clicking through the same workflow daily, even Python won’t save them from boredom.

The strategic move is to document the business impact: "Migrated 50+ pipelines from legacy SSIS to cloud-native ELT, reducing runtime by 70% and costs by $12K/month." That’s a story. "Used Python instead of GUI" is just tooling vanity.

The Specialization Spectrum: A New Mental Model

The false dichotomy, ETL Developer vs Data Engineer, obscures a more useful framework. Think of data roles on two axes: technical breadth and business complexity.

- High breadth, high complexity: Staff Data Engineer at Netflix. Builds custom frameworks, manages petabyte-scale streaming, influences company strategy.

- High breadth, low complexity: Platform Engineer at a startup. Maintains Airflow, dbt, and Snowflake, but pipelines are straightforward.

- Low breadth, high complexity: ETL Developer at a healthcare company. Uses Informatica, but must understand HIPAA, clinical data models, and regulatory reporting.

- Low breadth, low complexity: Analyst at a marketing agency. Uses Coupler.io to sync ad data. No code, simple requirements.

All four roles are valid. All four pay differently. The mistake is assuming the top-left quadrant is the only "real" engineer.

What the Tool Explosion Teaches Us

The proliferation of 1,500+ connector platforms like Portable at $1,800/month, or open-source alternatives like Airbyte, signals market maturation. Data integration is becoming commoditized. The value has shifted upstream: data quality, governance, domain modeling, and business alignment.

This is specialization, not dilution. Just as web development split into frontend, backend, DevOps, and SRE, data engineering is fragmenting. The "full stack" data engineer who does everything is giving way to:

– Analytics Engineers who own the dbt layer

– ML Engineers who operationalize models

– Data Platform Engineers who maintain infrastructure

– ETL Specialists who master specific tools for specific domains

Each requires deep expertise. Each is defensible. The key is choosing a path with moats, whether that’s deep Python knowledge OR deep healthcare data modeling OR deep Salesforce integration expertise.

The Verdict: Stop Confusing Tools with Craft

The Reddit poster should stay, not for the full year, but for six months. In that time, they should:

1. Learn the business: Why does the company choose this tool? What constraints drive the decision?

2. Optimize the obvious: Find the 30-minute Python job that can be three-second SQL. Document the savings.

3. Build a sidecar: Use Python for the one thing the GUI tool can’t do. Show the value of hybrid approaches.

4. Track the metrics: Cost per pipeline, time-to-fix, onboarding hours. These matter more than LOC.

Then leave with a story about strategic tool selection, not "GUI tools suck."

The controversy isn’t about ETL Developer vs Data Engineer. It’s about identity attachment to tools rather than outcomes. The best data professionals, the ones who command $300K+ salaries, don’t care whether they’re writing Scala, SQL, or clicking in a UI. They care about solving the right problem at the right cost with the right reliability.

The rest is just branding. And in 2025, the market is calling that bluff.

Your Move: If you’re in a "boring" ETL role, what’s one business metric you could improve in the next 30 days, regardless of tool? Share your answer, it’s more valuable than any framework debate.