If you’ve ever searched for hotels in Dubai and wondered how Booking.com instantly sorts 10,000 properties by price while claiming “real-time availability”, you’re asking the wrong question. The right question is: how many of those prices are stale, estimated, or outright fabricated? The answer is most of them, and that’s not a bug, it’s the architecture.

The Impossible Problem

Consider the challenge: you have 3 million hotels stored in PostgreSQL with static metadata (name, stars, coordinates), but pricing lives in external supplier APIs that are slow, rate-limited, and expensive to call. A user searches for “Dubai, check-in tomorrow, 2 adults” and expects instant results sorted by price.

The math is brutal. If you call all suppliers for all 10,000 Dubai hotels, you’re looking at minutes of latency and hundreds of dollars in API costs per query. The look-to-book ratio in travel is around 3%, meaning 97% of those expensive calls are wasted on users who never click “book.” This is the core tension: accuracy versus economics, and economics always wins.

How Big Platforms Actually Do It (Hint: They Cheat)

The Reddit discussion on this topic reveals an uncomfortable industry secret: customers are NOT always seeing the best, most accurate globally true answer. Large providers like Expedia and Booking.com maintain their own inventory systems where channel managers push prices daily, or even in real-time via change feeds. But this is the exception, not the rule.

Here’s the actual playbook:

1. The Daily Dump and Search Engine Pattern

Major platforms receive daily inventory dumps from suppliers. They don’t call APIs on-demand, they build their own catalog. As one commenter notes, companies get a daily dump of inventory+price to build their catalog, typically only storing common occupancy scenarios (1/2/4 pax). This data feeds a search engine like Elasticsearch, not a relational database.

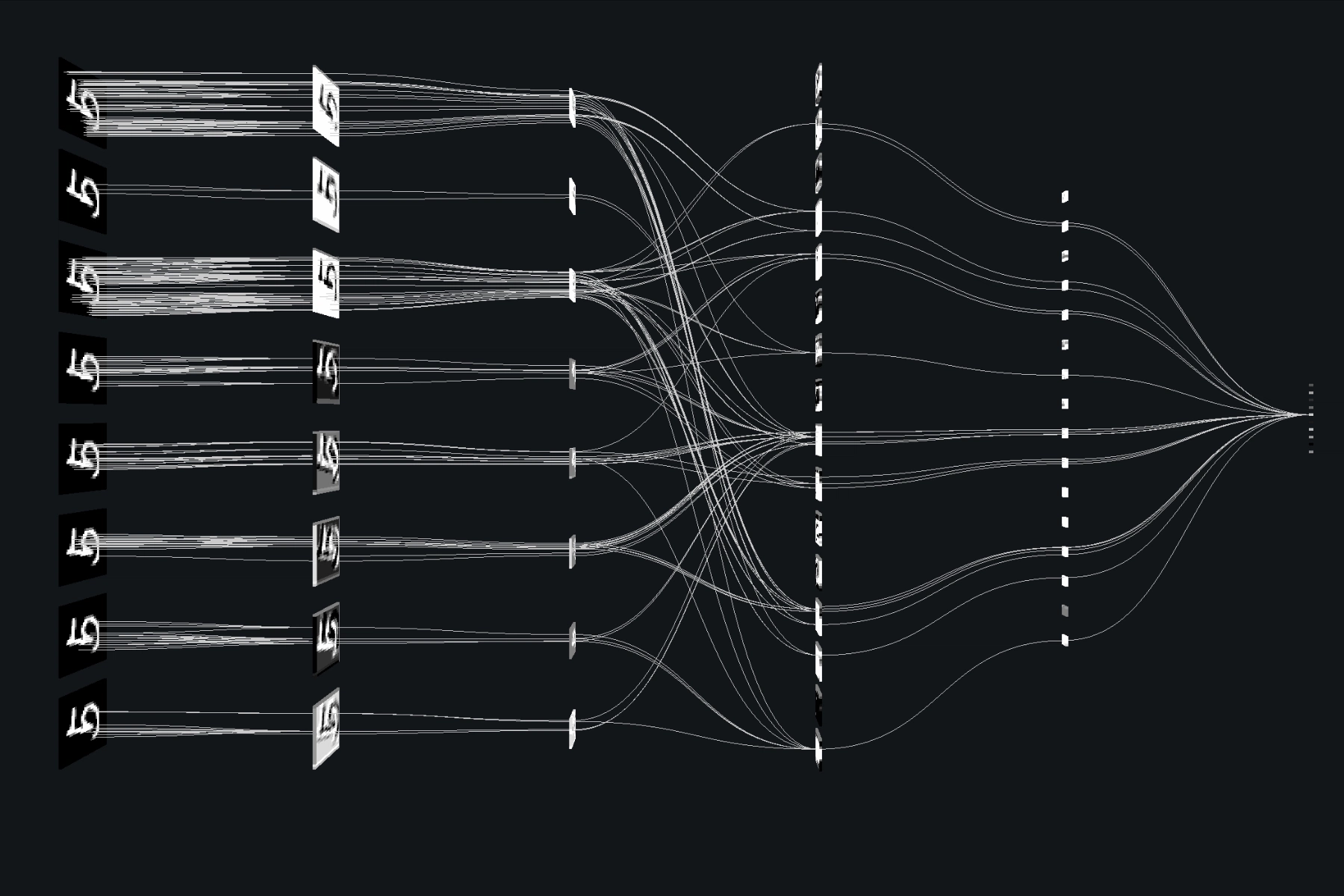

The architecture looks like this:

– PostgreSQL as source of truth for static metadata

– Elasticsearch for search, filtering, and sorting

– Background jobs that update Elasticsearch from supplier feeds

– On-demand pricing refreshes only when users drill into details

2. The Cache-First, Apologize-Later Strategy

For smaller aggregators or dynamic inventory, the pattern is aggressive caching with short TTLs. One approach: cache prices in Redis with 30-60 minute TTLs, use cached prices for search results, and only call suppliers on the hotel detail page.

But caching alone isn’t enough. Smart platforms pre-warm caches for popular routes and date ranges. If you search for Paris hotels next month, there’s a background job that’s already fetched prices for the top 500 properties. When you search, you’re hitting warm cache, not cold APIs.

3. The Subset Pricing Trick

Here’s where it gets spicy: you can’t sort by price if you don’t price everything. The solution? Don’t price everything. Platforms price a larger subset, say the top 500-1,000 hotels by relevance, and sort only within that set. If you’re looking for the absolute cheapest hotel in Dubai, you might not find it. But you’ll find a cheap enough hotel that you won’t notice the difference.

This is product design making hard distributed systems decisions. The UI shows “sorted by price” but the fine print (if you look) says “prices shown are estimates” or “based on recent data.”

4. The Event-Driven Escape Hatch

Polling and caching are wasteful. The sophisticated suppliers provide change feeds that platforms subscribe to. Instead of repeatedly asking “what’s the price?”, they listen to events that notify of price changes. This gives near real-time data without the polling overhead. But this requires supplier cooperation, and not all suppliers are that sophisticated.

The Normalization Nightmare

The research from MDPI on decentralized architectures highlights a critical point: normalization pipelines eliminate format-level and rounding-level nondeterminism. This isn’t academic, it’s the difference between showing $99.99 and $100.00 and having users trust your platform.

When you’re aggregating from TBO, Hotelbeds, and a dozen other suppliers, you’re dealing with:

– Different currency rounding conventions

– Inconsistent amenity naming (“wifi” vs “Wi-Fi” vs “Free Wireless”)

– Partial data (some suppliers include tax, others don’t)

– Schema mismatches (star ratings out of 5 vs out of 7)

The solution is a normalization pipeline that enforces consistency at ingestion time. This isn’t just data cleaning, it’s a distributed systems problem because you need to maintain semantic consistency while allowing for legitimate variation. For example, two suppliers might have different prices for the same room because one includes breakfast. Your normalization pipeline needs to detect and flag these semantic differences, not collapse them into false equivalence.

The Caching Strategy That Actually Works

Based on the Reddit discussion and industry patterns, here’s a concrete caching architecture:

// Redis cache structure

{

"price:hotel:{hotelId}:dates:{checkIn}:{checkOut}:occupancy:{adults}:{children}": {

"price": 19999, // in cents to avoid floating point

"currency": "USD",

"fetchedAt": 1640995200,

"ttl": 1800, // 30 minutes

"supplier": "hotelbeds",

"confidence": "high" // "high" = fresh, "medium" = 1-6 hours old, "low" = >6 hours

}

}

The key insight: cache confidence metadata. When you show a price, you also show how fresh it is. This manages user expectations and builds trust. If a price is stale, you show a “check for updated price” button.

TTL Strategy

- High-demand routes: 15-30 minute TTL

- Standard routes: 1-2 hour TTL

- Long-tail routes: 6-12 hour TTL

- Last-minute bookings (check-in < 24h): 5-10 minute TTL due to higher volatility

Handling Volatility

For last-minute inventory, use predictive caching. Train a simple ML model on historical price patterns to predict when prices are likely to change. When the model predicts high volatility, shorten TTL automatically. This is more efficient than blind short TTLs everywhere.

The Trust Problem: Stale Prices and User Expectations

The biggest pitfall isn’t technical, it’s trust. Users hate seeing one price in search results and a different price on the detail page. Here’s how platforms handle it:

- Show price ranges: “$99-$149” in search results, then narrow to exact price on details

- Confidence indicators: “Price confirmed 2 minutes ago” vs “Price from 4 hours ago”

- Best price guarantees: If the price changes between search and book, honor the lower price

- Look-to-book transparency: Make users click “check price” to see real-time rates, setting expectations upfront

One commenter notes: “The secret here is that customers are NOT always seeing the best most accurate globally true answer to their query. This is just a secret reality. Product design has to make hard decisions like this and account for it.”

The Composite Reality Score

The research on Composite Reliability Score (CRS) for LLMs is surprisingly relevant here. Just as CRS combines calibration, robustness, and uncertainty into a unified metric, you need a Composite Price Accuracy Score for your inventory:

- Calibration: How close are cached prices to actual prices?

- Robustness: How do prices hold up under volatility?

- Uncertainty: What’s the confidence interval around a price?

This framework forces you to think holistically. A platform with 95% price accuracy but poor robustness (prices change frequently) is less trustworthy than one with 90% accuracy but high robustness (prices are stable and predictable).

Implementation: The Three-Layer Architecture

Layer 1: Data Acquisition

- Supplier adapters: Each supplier gets its own adapter that normalizes their API format to your internal schema

- Event listeners: For suppliers with change feeds, listen to price change events

- Batch fetchers: For suppliers without events, use scheduled jobs to fetch prices in bulk (cheaper than individual calls)

Layer 2: Processing & Intelligence

- Azure Functions / Lambda: Timer-triggered pipelines every 15 minutes for active routes

- Data Normalization: Handle heterogeneity, different API response formats, timestamp conventions, currency rounding

- Price Prediction Model: When cache is stale, use ML to predict current price while fresh data is fetched

Layer 3: Search & Caching

- Elasticsearch: Store hotel documents with cached prices, star ratings, amenities

- Redis: Hot cache for current pricing

- PostgreSQL: Source of truth for static metadata

- API Gateway: Route search requests to Elasticsearch, detail requests to price refresh service

// Example search endpoint

async function searchHotels(criteria: SearchCriteria) {

// 1. Query Elasticsearch with filters (stars, amenities)

const hotels = await elasticsearch.search({

query: buildQuery(criteria),

sort: [{ "cached_price": "asc" }],

size: 20,

from: criteria.page * 20

});

// 2. For each result, check Redis cache freshness

const results = await Promise.all(hotels.map(async hotel => {

const cacheKey = `price:hotel:${hotel.id}:...`;

const cached = await redis.get(cacheKey);

if (!cached || isStale(cached)) {

// 3. Trigger async refresh for stale prices

priceRefreshQueue.add({ hotelId: hotel.id, criteria });

}

return {

...hotel,

price: cached?.price || null,

priceConfidence: cached?.confidence || 'low',

isRealTime: !!cached && !isStale(cached)

};

}));

return results;

}

The Hard Lessons

1. Perfect Is the Enemy of Shipped

One commenter who built a metasearch platform admitted: “We went for max accuracy by hitting every API every time… The full result set was several MB for a big city, but that would just sit in memory for some minutes after the session and then get dumped.” They burned money and latency for perfection that users didn’t notice.

Lesson: Ship the smallest thing that works. You can always add real-time accuracy, you can’t recover lost users from slow load times.

2. Transparency Builds More Trust Than Accuracy

Admitting “this price is 2 hours old” feels like failure. But it’s more trustworthy than showing a stale price as if it’s real-time. In distributed systems where “don’t trust, verify” is gospel, methodological transparency is non-negotiable.

3. Incentive Design Is Engineering

How do you prevent suppliers from gaming your system by sending artificially low prices to get top sort position, then raising them on detail pages? You need anti-gaming measures:

- Price volatility tracking: Flag suppliers whose prices change abnormally between search and book

- Supplier reputation scoring: Downrank suppliers with high price discrepancy rates

- Random audits: Periodically fetch real-time prices for comparison and penalize dishonest suppliers

This isn’t a technical requirement, it’s an economic safeguard. Engineering without incentive design creates brittle systems.

The Future: From “Good Enough” to “Actually Good”

The industry is moving toward event-driven architectures and predictive caching, but the fundamental tension remains: you can’t have perfect real-time data at scale without infinite money.

The next evolution is federated learning across platforms. If multiple aggregators share price change signals (not the prices themselves, just the fact that prices changed), everyone gets better cache invalidation without sharing competitive data.

Another direction is blockchain-based inventory oracles. Suppliers commit price updates to a shared ledger that aggregators can subscribe to. This eliminates polling and provides cryptographic proof of price freshness. But this requires industry-wide cooperation, unlikely in the cutthroat travel market.

Conclusion: Embrace the Deception

The uncomfortable truth is that “good enough” search is a feature, not a bug. Users don’t want to wait 30 seconds for perfect results, they want instant results that are close enough. The art is in making “close enough” feel trustworthy.

Your architecture should optimize for:

1. Perceived freshness over actual freshness

2. Stable sorting over accurate sorting (users hate when results reorder on refresh)

3. Transparent uncertainty over hidden inaccuracy

The platforms that win aren’t the ones with the most accurate data, they’re the ones that make users feel confident in their decisions despite the inaccuracy. That’s not just engineering, it’s product psychology.

So the next time you sort by price on a travel site, remember: you’re not seeing the cheapest hotel in Dubai. You’re seeing the cheapest hotel among the 500 hotels the platform bothered to price in the last hour. And that’s probably good enough.

References:

– Reddit: How do large hotel metasearch platforms handle sorting, filtering, and pricing caches at scale?

– MDPI: Decentralized Dynamic Heterogeneous Redundancy Architecture

– Medium: Project BotanicaX – Handling Heterogeneous API Data

– arXiv: Composite Reliability Score Framework