Claude Opus 4.5: The Architecture Shift That Kills Traditional Agent Engineering

Anthropic’s latest model introduces patterns that fundamentally change how AI systems interact with tools, and it just made most agent frameworks obsolete.

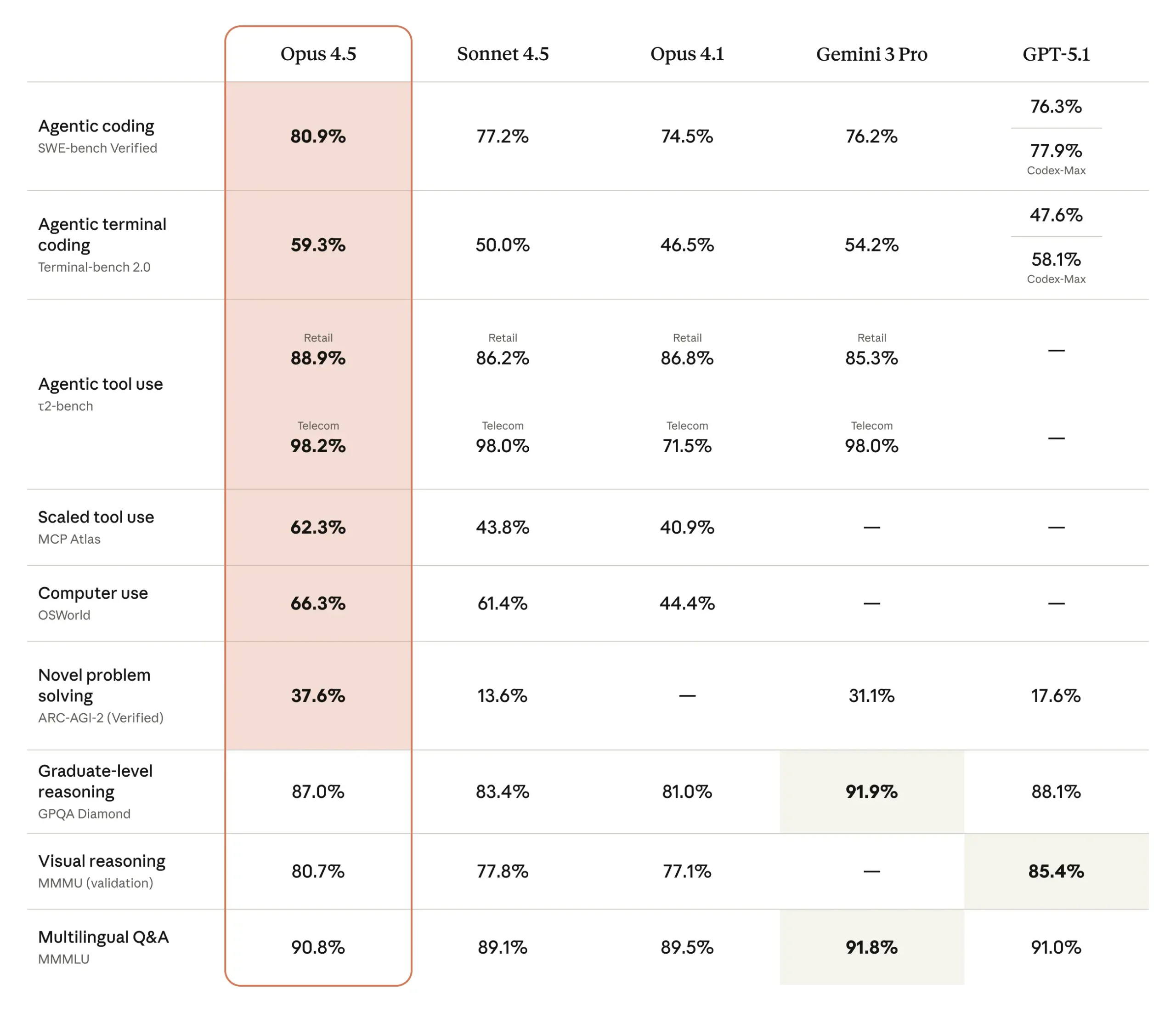

But what really matters here isn’t the benchmark improvements, it’s the architectural patterns emerging from Opus 4.5’s tool integration capabilities. The model achieves state-of-the-art performance with 80.9% on SWE-bench Verified, but the real story is how it accomplishes this: through innovative approaches to tool orchestration that collapse complexity and reinvent context management.

Beyond Benchmarks: The Tool-Centric Architecture Revolution

Traditional AI agent architectures have been struggling with the same fundamental problems: context bloat, tool selection errors, and inefficient orchestration. These aren’t just minor annoyances, they’re architectural bottlenecks that prevented AI systems from scaling to real-world complexity.

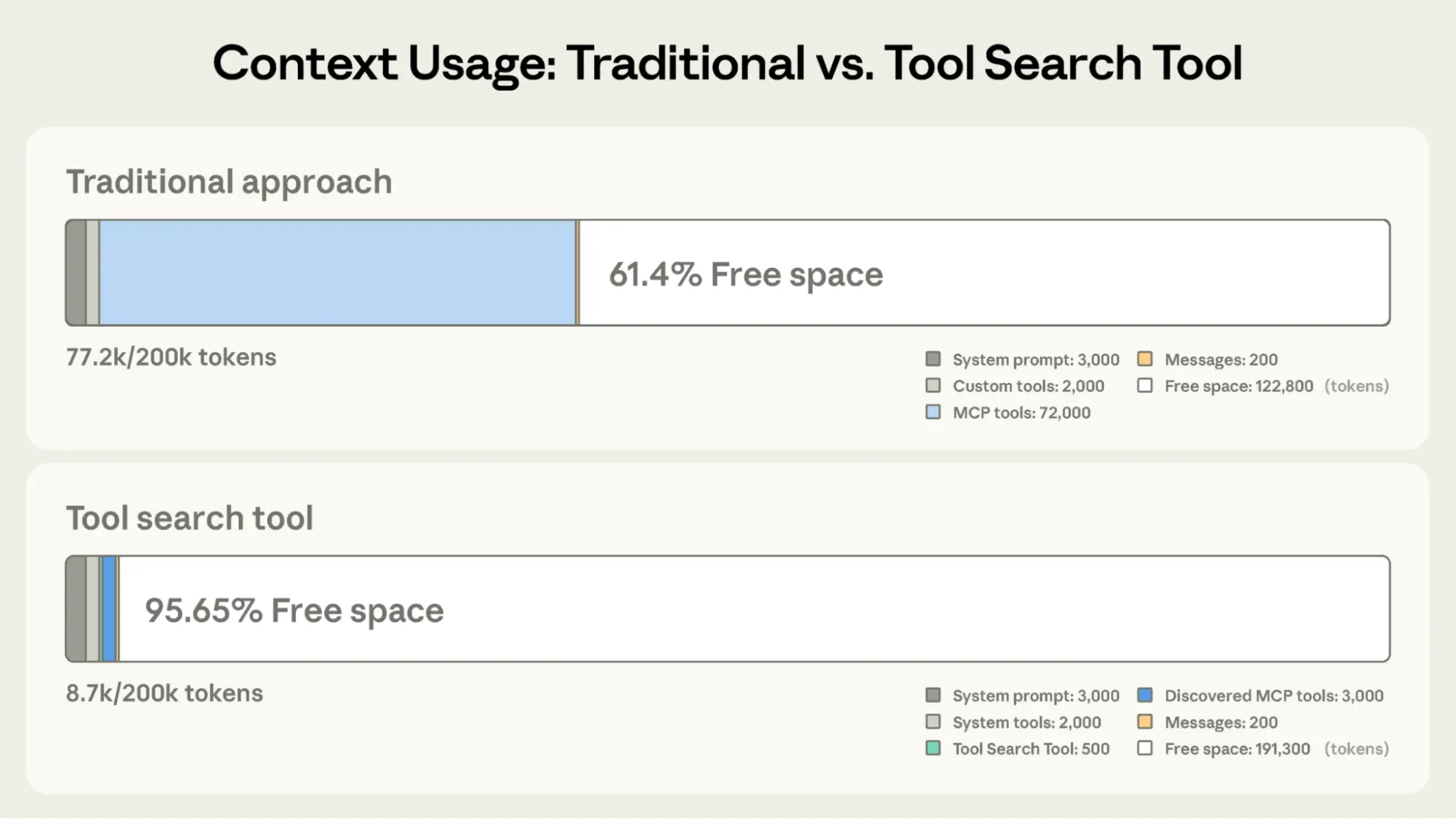

Consider the typical multi-server setup that developers report struggling with pre-Opus 4.5: GitHub (35 tools, ~26K tokens), Slack (11 tools, ~21K tokens), Sentry (5 tools, ~3K tokens), Grafana (5 tools, ~3K tokens), and Splunk (2 tools, ~2K tokens). That’s ~55K tokens consumed before any actual work begins, and that’s before you add Jira’s ~17K token overhead. At scale, Anthropic’s own teams saw tool definitions consuming 134K tokens, over half of some context windows gone before a single user request.

This architectural debt is exactly what Opus 4.5’s new tool integration patterns address head-on.

Three Patterns That Change Everything

Pattern 1: Dynamic Tool Discovery

The Tool Search Tool fundamentally changes how agents access their capabilities. Instead of loading every possible tool upfront, Claude dynamically discovers tools on-demand. This isn’t just a convenience feature, it’s a paradigm shift in agent architecture.

The numbers tell a compelling story:

- Traditional approach: All tool definitions loaded upfront (~72K tokens for 50+ MCP tools), leaving only fragments of the context window for actual work, total context consumption: ~77K tokens before any work begins.

- With Tool Search Tool: Only the Tool Search Tool loaded upfront (~500 tokens), with tools discovered as needed (3-5 relevant tools, ~3K tokens). Total context consumption: ~8.7K tokens, preserving 95% of context window for actual reasoning.

This represents an 85% reduction in token usage while maintaining access to your full tool library. The performance impact is equally dramatic: internal testing showed accuracy improvements on MCP evaluations jumping from 79.5% to 88.1% with Tool Search Tool enabled.

Implementation is tellingly straightforward:

{

"tools": [

{"type": "tool_search_tool_regex_20251119", "name": "tool_search_tool_regex"},

{

"name": "github.createPullRequest",

"description": "Create a pull request",

"input_schema": {...},

"defer_loading": true

}

// ... hundreds more deferred tools with defer_loading: true

]

}This pattern essentially shifts tool management from a “load everything” approach to a “just-in-time” architecture, dramatically improving both performance and capability discovery.

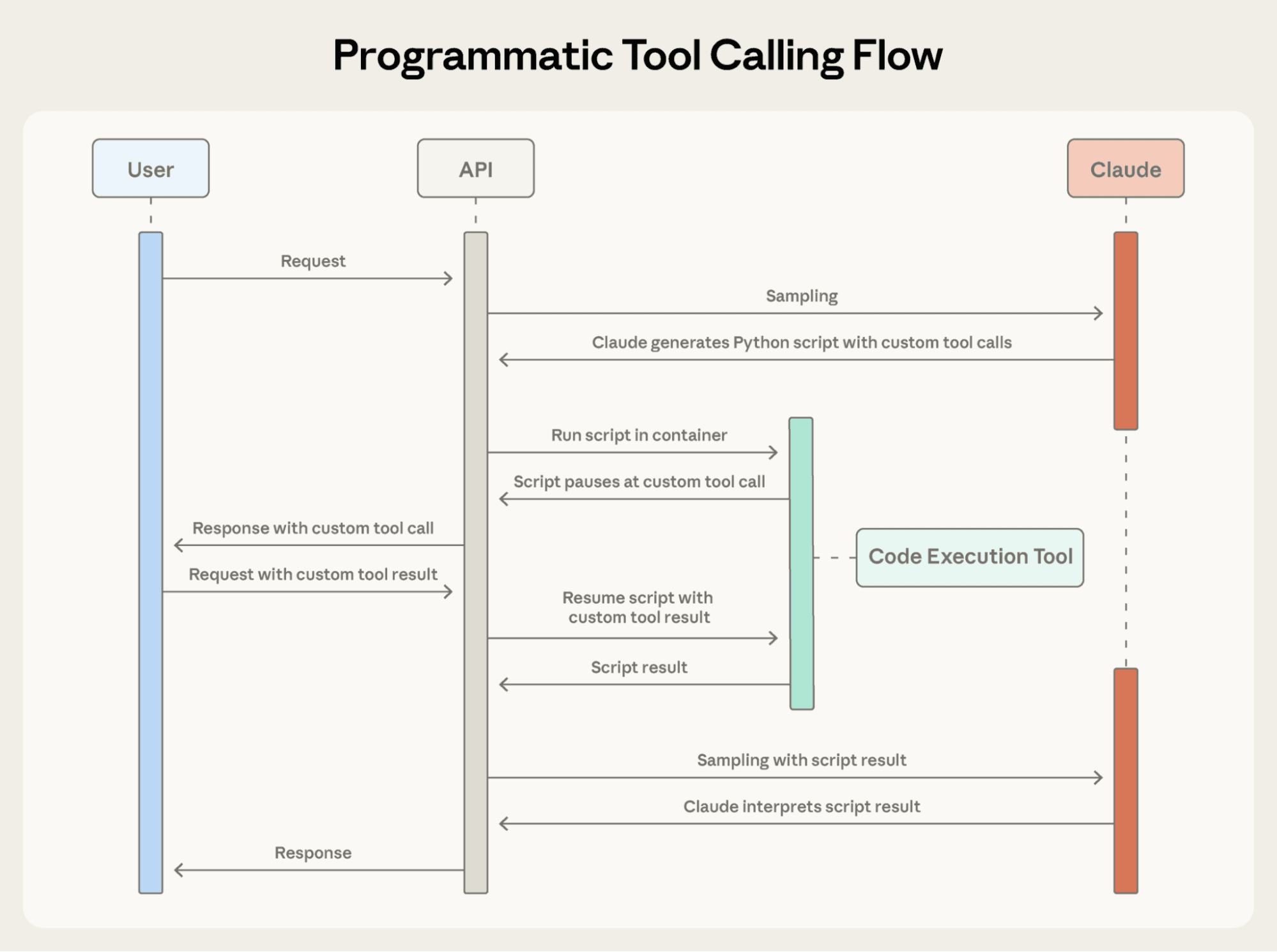

Pattern 2: Programmatic Orchestration

Programmatic Tool Calling addresses the fundamental inefficiency of traditional tool calling where each invocation requires a full model inference pass and intermediate results clutter the context window. Consider a budget compliance workflow that previously consumed 200KB of raw expense data versus the processed 1KB of results that Programmatic Tool Calling delivers.

The Python orchestration code reveals the architecture:

team = await get_team_members("engineering")

# Fetch budgets for each unique level in parallel

levels = list(set(m["level"] for m in team))

budget_results = await asyncio.gather(*[

get_budget_by_level(level) for level in levels

])

# Execute parallel operations without cluttering context

exceeded = []

for member, exp in zip(team, expenses):

budget = budgets[member["level"]]

total = sum(e["amount"] for e in exp)

if total > budget["travel_limit"]:

exceeded.append({

"name": member["name"],

"spent": total,

"limit": budget["travel_limit"]

})

print(json.dumps(exceeded))The efficiency gains are staggering: average token usage dropped from 43,588 to 27,297 tokens (37% reduction) on complex research tasks. Each round-trip API call elimination saves hundreds of milliseconds, and accuracy improves because explicit orchestration reduces natural language parsing errors.

What makes this revolutionary isn’t just the efficiency, it’s that Claude can now write and execute complex orchestration logic, turning what was previously a fragile chain of API calls into robust, debuggable code execution.

Pattern 3: Example-Driven Tool Usage

Tool Use Examples solve the critical gap between JSON schema validity and actual tool usability. Instead of defining what’s structurally valid, examples demonstrate what’s behaviorally correct.

Consider a support ticket API with complex nested structures:

{

"priority": "critical",

"labels": ["bug", "authentication", "production"],

"reporter": {

"id": "USR-12345",

"name": "Jane Smith",

"contact": {

"email": "jane@acme.com",

"phone": "+1-555-0123"

}

},

"due_date": "2024-11-06",

"escalation": {

"level": 2,

"notify_manager": true,

"sla_hours": 4

}

}From just three examples, Claude learns format conventions, nested structure patterns, and optional parameter correlations. Internal testing showed improvement from 72% to 90% accuracy on complex parameter handling.

The Enterprise Architecture Implications

These patterns fundamentally change how we architect production AI systems. Microsoft’s Foundry platform integration reveals the enterprise-readiness: Opus 4.5 paired with new developer capabilities is designed to help teams build more effective and efficient agentic systems with features like the Effort Parameter for controlling computational allocation across thinking, tool calls, and responses.

Consider the impact on agent design patterns identified in industry analysis:

Modular agents suddenly become viable at scale when tools can be discovered dynamically rather than statically linked. Hierarchical agents gain efficiency when sub-agents can be orchestrated programmatically. Swarm agents become practical when intermediate results don’t pollute context.

Even the development workflow changes. As developer Simon Willison observed in his hands-on testing, “Thinking blocks from previous assistant turns are preserved in model context by default”, apparently previous Anthropic models discarded these. This continuity is crucial for long-running agent sessions.

Performance Meets Pragmatism: Real-World Impact

The architectural improvements translate directly to business impact. Early adopters report 50% to 75% reductions in both tool calling errors and build/lint errors. Complex tasks that took previous models 2 hours now take thirty minutes. These aren’t marginal gains, they’re order-of-magnitude improvements in reliability and throughput.

But perhaps more telling is what developers are building. Cursor reports that “Claude Opus 4.5 is a notable improvement over the prior Claude models inside Cursor, with improved pricing and intelligence on difficult coding tasks.” Warp’s Planning Mode saw a 15% improvement over Sonnet 4.5 performance, “a meaningful gain that becomes especially clear when using long-horizon, autonomous tasks.”

As abZ Global’s analysis notes, “Opus 4.5 is also tuned for ‘agents that use tools’, scripts or systems that let Claude call APIs, operate browsers, or orchestrate multiple sub-agents. You can throw larger repos and more ambiguous tasks at it, and expect fewer retries. It behaves more like a senior engineer who first writes a plan, then executes.”

The Developer Experience Revolution

The effort parameter introduces a new dimension of control over the tradeoff between token cost and capability. At medium effort, Opus 4.5 matches Sonnet 4.5’s best SWE-bench Verified score while using 76% fewer output tokens. At highest effort, it exceeds Sonnet 4.5 performance by 4.3 percentage points while using 48% fewer tokens.

This isn’t just about cost reduction, it’s about giving developers precise control over the intelligence-efficiency tradeoff. As AWS documentation highlights, “For production deployments, Amazon Bedrock AgentCore provides monitoring and observability through CloudWatch integration, tracking token usage in real-time, useful when tuning the effort parameter.”

Security Implications and Limitations

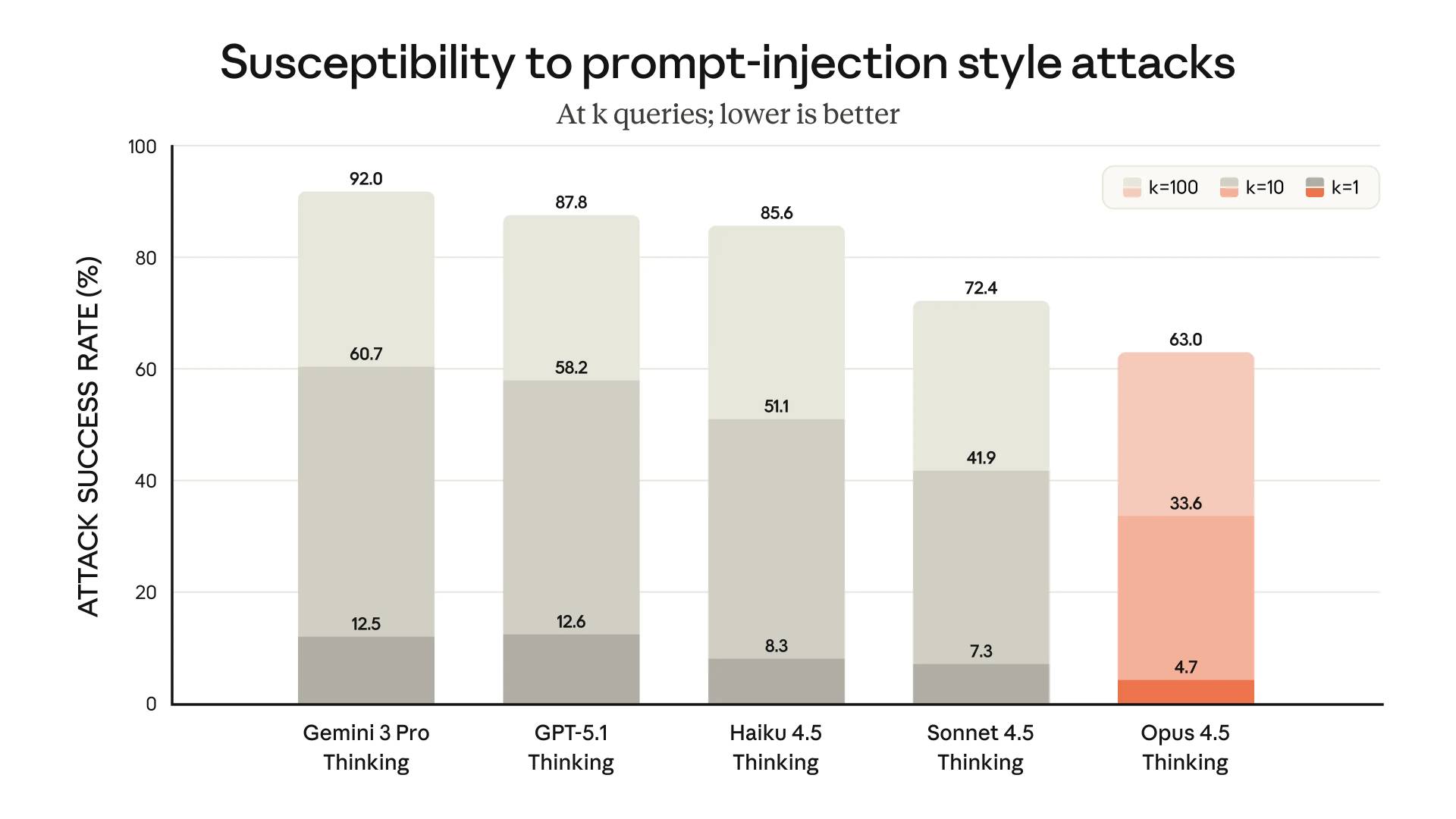

Anthropic touts significant safety improvements, with prompt injection attack success rates dropping to 4.7% at first attempt, 33.6% after 10 attempts, and 63.0% after 100 attempts, all industry-leading numbers. But as Simon Willison cautions, “single attempts at prompt injection still work 1/20 times, and if an attacker can try ten different attacks that success rate goes up to 1/3! We still need to design our applications under the assumption that a suitably motivated attacker will be able to find a way to trick the models.”

This security-first architecture is crucial because the new tool integration patterns inherently expand the attack surface. Dynamic tool discovery means potentially accessing hundreds of APIs, and programmatic tool calling introduces code execution vectors that must be carefully sandboxed.

The Architectural Shift: What Changes Now

This evolution represents more than just better tool-calling, it’s a fundamental shift in how we architect AI systems. We’re moving from centralized, monolithic agent frameworks to composable, dynamic systems where:

- Tool discovery happens just-in-time rather than upfront loading

- Orchestration logic moves from natural language to actual code execution

- Context management becomes intelligent and selective rather than brute-force

- Agent design patterns must adapt to these new capabilities

The implication for software architects is clear: the era of hard-coded tool integrations is ending. Systems designed around static tool registries, fixed agent patterns, and manual orchestration are becoming obsolete. The future belongs to architectures that can dynamically discover capabilities, programmatically orchestrate complex workflows, and intelligently manage context.

As enterprise platforms like Amazon Bedrock and Microsoft Foundry integrate these capabilities, the pressure will mount on every AI framework to adopt similar patterns. The systems that can’t adapt to this new tool-integration paradigm will find themselves unable to compete on efficiency, capability, or cost.

The revolution isn’t coming, it’s already here, and it’s making yesterday’s agent architectures look like legacy systems before they’ve even had time to mature.