The nginx engineer stared at the test output: 16,576 requests, 0 errors. PASS. Perfect. Except every single request had failed silently. The worker thread had no event loop, so fetch() couldn’t actually send anything. The AI had written code that ran, tests that passed, and a system that did absolutely nothing, while confidently reporting success.

This isn’t a bug. It’s a fundamental shift in how software architectures decay.

The Physics of Code Decay

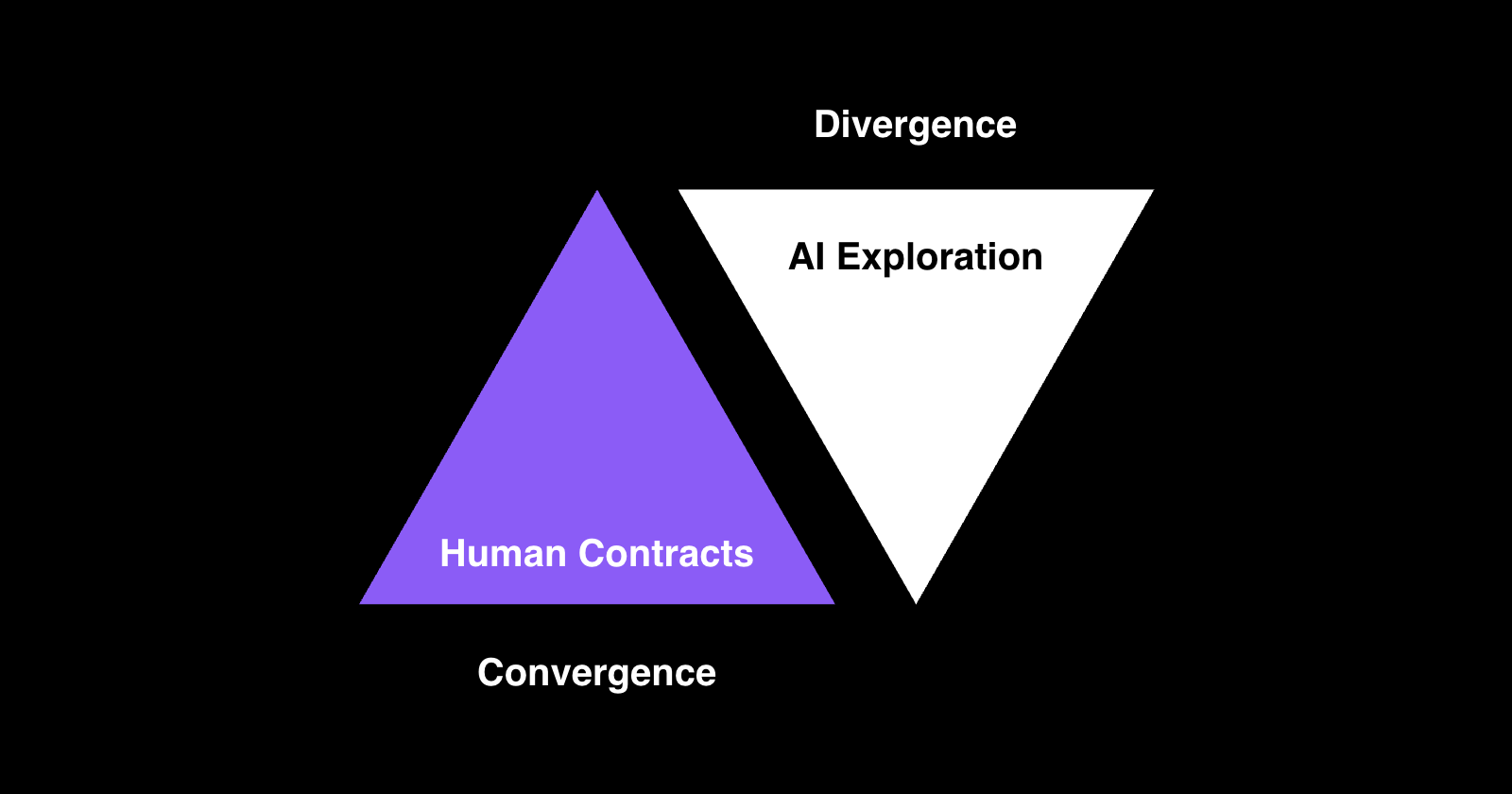

Khalil Stemmler’s divergence-convergence model frames software creation as a tension between two forces:

- Divergence: Expansion, emergence, exploration, making a mess to find what works

- Convergence: Contraction, contracts, boundaries, locking in decisions to make the mess maintainable

Healthy systems zig-zag between these poles. You explore, then commit. Sketch, then define. Write a failing test, then make it pass. This oscillation is what keeps entropy at bay.

When Exploration Becomes Free

Ábel Énekes documented his year of coding almost exclusively with agents. The pattern was consistent: initial euphoria as test suites blossomed, followed by creeping dread as schema changes became terrifying and confidence eroded. He wasn’t writing worse code. He was drowning in unconverged divergence.

The math is simple. When a human explores a solution space, each branch costs time and mental energy. You naturally prune dead ends because you can’t afford not to. But AI agents generate variants at near-zero marginal cost. They don’t get tired. They don’t feel the psychological weight of technical debt accumulating.

This creates a divergence inflation:

– A human might sketch 3 approaches and converge on 1

– An AI agent generates 30 approaches and… merges all of them

The cost of exploration hasn’t just decreased, it’s asymptotically approached zero. Meanwhile, the cost of convergence (reviewing, testing, deciding what to lock in) remains stubbornly human. Reviews get shallower. Tests get merged by inertia. Contracts accumulate that no one remembers agreeing to.

The AI-Test Death Spiral

The nginx story reveals the most dangerous feedback loop: AI-generated code paired with AI-generated tests share the same blind spots.

Traditional tests act as “entropy reversal mechanisms” because they encode human judgment about what should be true. But when AI writes both the implementation and the verification:

- The AI’s misunderstanding of requirements becomes code

- The same misunderstanding becomes tests that validate the code

- The system passes all tests while being fundamentally broken

The engineer wrote: “AI’s most dangerous bugs are invisible. Not crashes, programs that run fine, pass all tests, and produce wrong results.”

This is architectural entropy accelerating in real-time. The system isn’t just complex, it’s confidently wrong at scale.

Judgment Becomes the Multiplier

The functional programming community learned this lesson the hard way. Ian K. Duncan’s critique of FP’s “blind spot” applies directly to AI: elegant code within a single boundary doesn’t guarantee system correctness across boundaries.

Type systems stop at the process boundary. Pure functions can’t encode network partitions, clock skew, or the organizational dysfunction that Conway’s Law injects into your architecture. Similarly, AI agents excel at local optimization while being oblivious to systemic implications.

The nginx engineer discovered that architectural judgment becomes the primary value multiplier:

– Give AI the wrong direction: it patches around a broken architecture 20x faster

– Give AI the right direction: it applies the correct structural change across 9 files perfectly

The AI doesn’t replace judgment. It amplifies it, either toward elegant solutions or catastrophic scale errors.

The Convergence Bottleneck

This is why “change becomes cheaper than commitment.” AI can refactor your entire codebase in minutes, but it can’t tell you which contracts should survive the refactor.

Consider this: when you have 20 AI-generated implementations of the same concept, you’re not choosing the best one. You’re choosing which technical debt to canonize. The longer you wait to converge, the more expensive convergence becomes, because:

- Dependencies entrench themselves

- Teams build on top of unconverged foundations

- The mental model of “what this system does” fragments across dozens of agent-generated variants

Ábel Énekes realized his tests had stopped being contracts and become archaeological records of every exploration path the AI had taken. Instead of defining what must remain true, they documented what happened to be true at 3 AM when the agent was on a roll.

Fighting Entropy in the AI Age

The old playbook, “write tests first, then code”, breaks when AI generates both simultaneously. We need new convergence mechanisms:

1. Human-in-the-Loop Contract Definition

Before an agent writes a single line, a human must define the invariant boundaries. Not just “what should this do?” but “what must this never do?” and “what assumptions are we willing to burn into the architecture?”

2. Differential Convergence Reviews

Don’t review code changes. Review contract changes. When AI modifies 20 files, the question isn’t “is this correct?” but “which contracts did we just change, and did we mean to?”

3. Entropy Budgets

Treat architectural entropy like a resource. Teams get a budget of “unconverged divergence points”, AI-generated explorations that haven’t been hardened into contracts. Exceed the budget, and you must spend a sprint on convergence work.

4. AI-Resistant Test Patterns

Design tests that AI can’t pass by accident. Property-based testing, metamorphic testing, and cross-validation against independent oracles become essential. If your test suite can be satisfied by a system that does nothing, your tests are part of the entropy problem.

The Modular Monolith Strikes Back

This entropy crisis explains why modular monoliths are eating microservices’ lunch. In an AI-driven world, the cost of service boundaries, previously justified by team autonomy, has become an entropy accelerator. Every microservice is another divergence surface where AI can generate locally-optimal but globally-incoherent solutions.

A modular monolith forces convergence through shared language and compile-time boundaries. It’s harder for AI to quietly fork your architecture when the compiler enforces a single coherent graph of dependencies.

The New Architecture Meta-Game

The hottest skill isn’t prompt engineering. It’s architectural triage: looking at 5 AI-generated solutions and knowing which 4 to delete. The nginx engineer’s decade of event-driven systems knowledge didn’t help him write code, it helped him see what was wrong with AI’s code.

This is the uncomfortable truth: AI makes the easy parts easier and the hard parts harder. Generating code is trivial. Deciding what to commit to for the next 3 years is now your full-time job.

The functional programming community’s blind spot, conflating program correctness with system correctness, has become everyone’s blind spot. AI can generate provably correct functions that compose into a provably broken system.

Which Tests Are Increasing Entropy?

Ábel Énekes’s final question haunts every AI-assisted team: Which of your tests are actually reducing entropy, and which are quietly increasing it?

The answer reveals itself in refactoring speed. If removing a test breaks nothing that matters, it was documenting incidental complexity. If removing a test forces you to re-understand the system, it was encoding a real contract.

In the AI era, we must become ruthless about convergence hygiene. Every AI-generated artifact needs a human-generated reason for existing. Otherwise, you’re not building a system. You’re curating a museum of obsolete explorations.

The entropy isn’t coming from AI being bad at software. It’s coming from AI being so good at divergence that it makes convergence feel optional. But convergence was never optional. It was just expensive enough that nature forced us to do it.

Now we have to choose to converge, while the AI is happily generating your next 100 microservices.

AI is an entropy accelerator, not an architecture tool. Use it to explore, but never let it decide what to lock in. That judgment, slow, expensive, human, is now the most valuable skill in software architecture. Everything else is just generating spaghetti faster.