Agentic AI as Colleagues: Redefining Data Science Roles in 2025

A recent BCG/MIT study finds 76% of leaders now anthropomorphize agentic AI as coworkers, sparking debate about how AI autonomy impacts data scientist job functions, team dynamics, and role evolution.

The line between human and machine just got dangerously blurry. According to a new BCG and MIT Sloan study, 76% of executives now describe agentic AI as a “coworker” rather than a tool. When three-quarters of corporate leadership starts anthropomorphizing software, you know something fundamental has shifted in how we think about work, collaboration, and what it means to be a data scientist in 2025.

This isn’t your grandfather’s automation. Agentic AI systems plan tasks, execute multi-step workflows, and adapt based on outcomes autonomously. They’re not waiting for prompts, they’re taking initiative. Scheduling meetings, generating reports, triaging data, coordinating across systems. The subtle but seismic shift from “using AI” to “working with AI” is forcing data teams to confront some uncomfortable questions about their future relevance.

The Data Scientist’s Identity Crisis

Traditional data science roles were built around human intelligence applied to data problems. As one experienced data scientist explained on Reddit, data science is “about applying the scientific method using data”, making observations, finding key questions, generating hypotheses, validating them, and communicating results. But what happens when AI agents can conduct that entire scientific process autonomously?

The reality on the ground is already shifting. Many senior data scientists report moving “from writing features to architecting and supervising autonomous systems”, as the BCG/MIT research notes. The tools haven’t changed, SQL, Python, and “everyone’s favorite, excel/sheets” remain staples, but their application has transformed entirely.

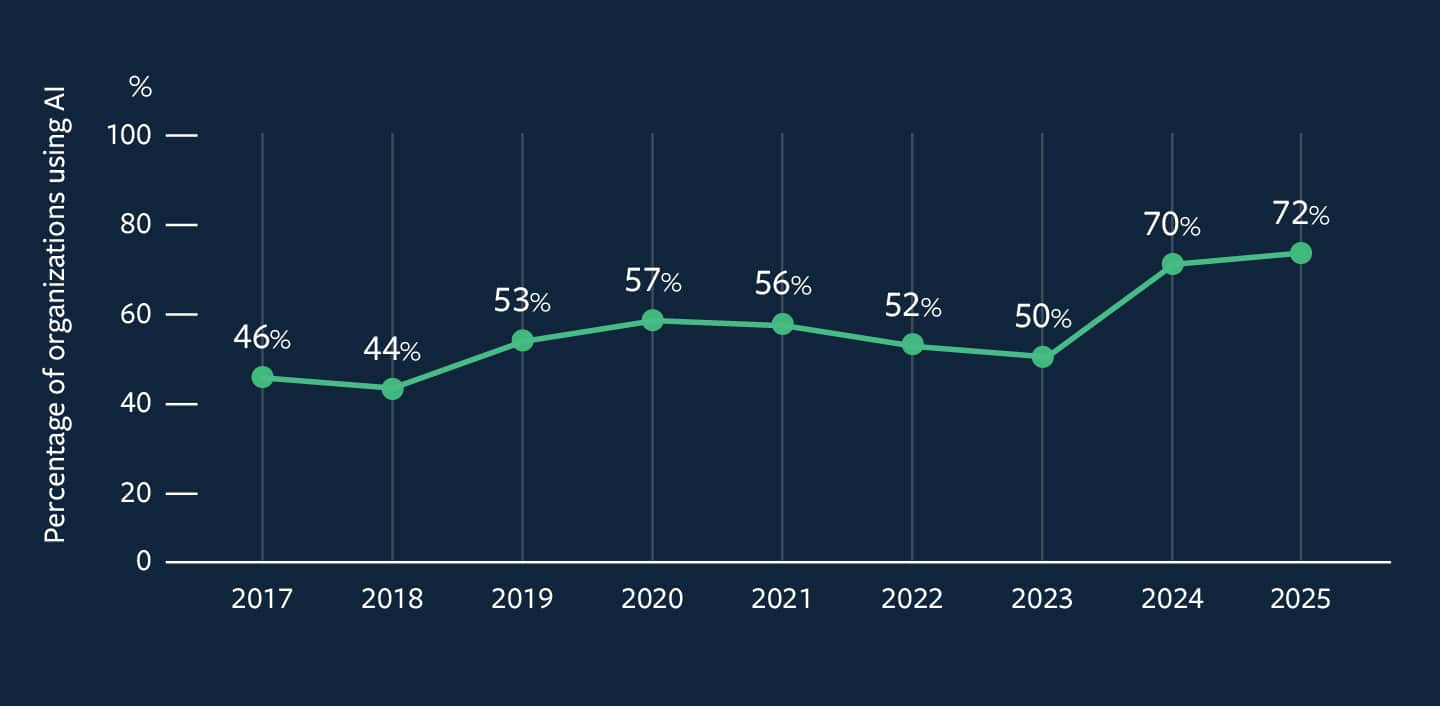

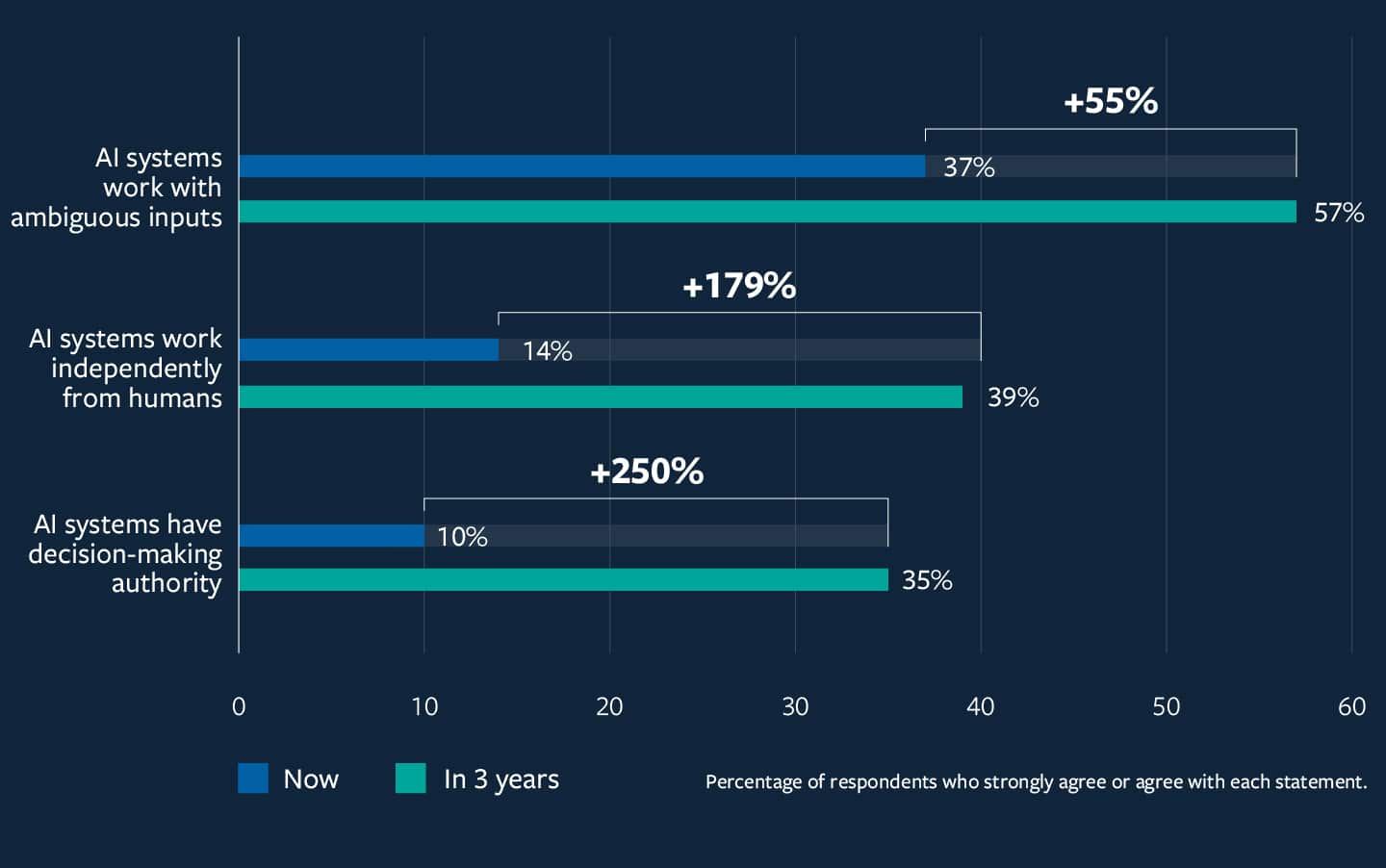

The adoption numbers tell a startling story. While traditional AI took eight years to reach 72% organizational adoption and generative AI hit 70% in just three years, agentic AI has already achieved 35% adoption in merely two years, with another 44% of organizations planning deployment soon. This acceleration suggests we’re not dealing with incremental change but a fundamental restructuring of how analytical work gets done.

Four Uncomfortable Leadership Tensions

1. The Flexibility Paradox: Scalability Versus Adaptability

Human data scientists are maximally flexible, they can switch between statistical modeling, business analysis, and stakeholder communication with ease. Tools scale predictably but lack adaptability. Agentic AI sits awkwardly in between: more adaptable than traditional tools but currently less flexible than human experts.

The immediate challenge for data leaders becomes designing processes that can “oscillate between efficiency and adaptability without breaking”, as the researchers found. Do you optimize your data pipelines for maximum AI efficiency or preserve human-like adaptability for edge cases and system failures?

2. The Investment Dilemma: Experience Versus Expediency

Traditional tools require large upfront costs but deliver predictable returns. Human data scientists represent ongoing variable expenses that appreciate with experience. Agentic AI defies both models, substantial initial development costs combined with ongoing expenses for fine-tuning and data updates, all while potentially appreciating through learning or depreciating through model drift.

As the report notes, Chevron’s chief data officer describes needing to remain “adaptive to emerging tools and updates” because “model updates arrive every couple of months.” This creates a moving-target problem: invest too early and risk technological obsolescence, wait too long and miss strategic advantages.

3. The Control Conundrum: Supervision Versus Autonomy

Data science has always involved judgment calls about which models to trust, when to question outputs, and how to interpret ambiguous results. But agentic AI forces a new question: “How do you supervise something designed to work autonomously?”

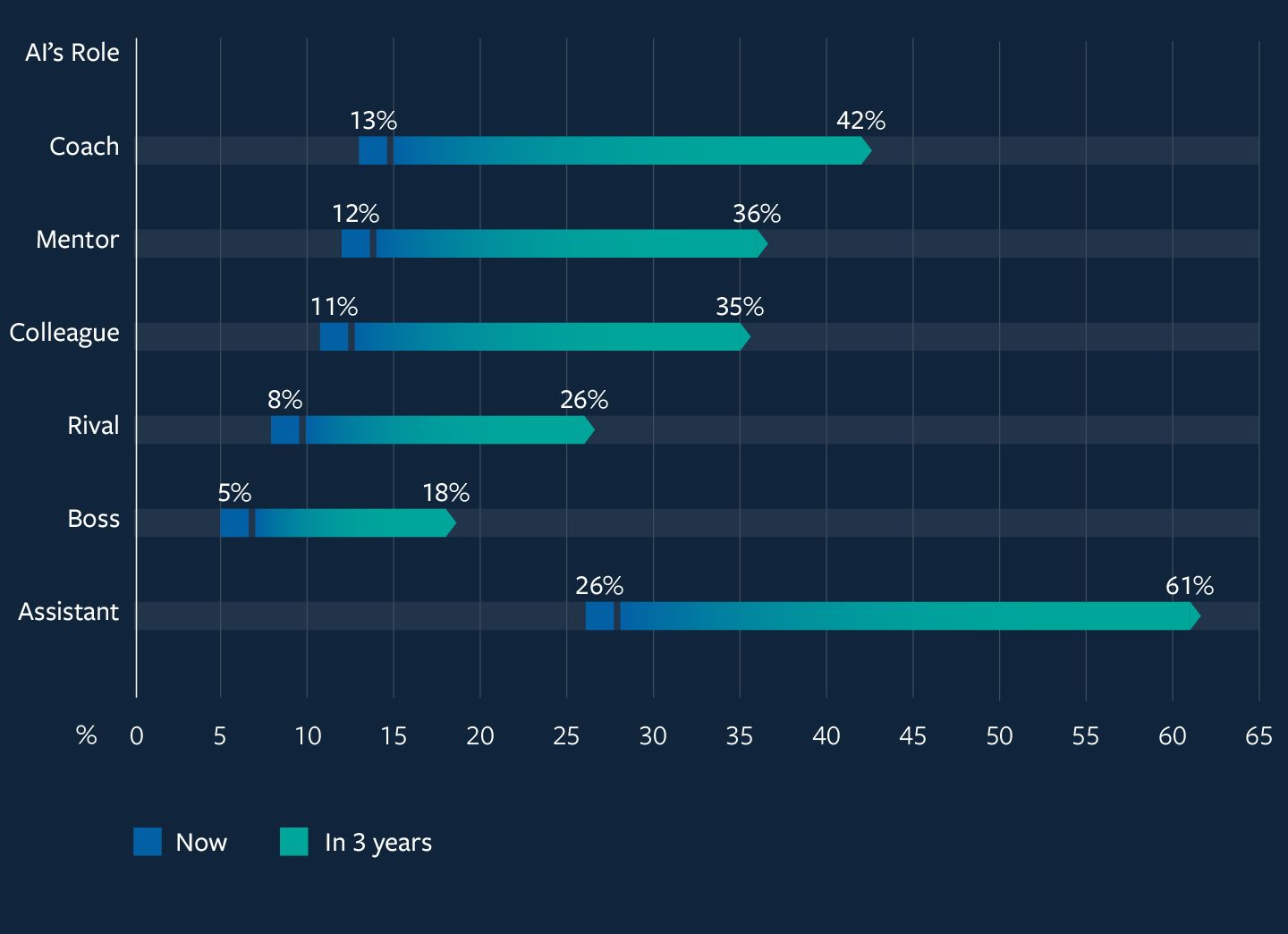

The research shows organizations expect AI’s decision-making authority to grow by 250% in the next three years. Yet data scientists increasingly find themselves in supervisory roles, validating AI-generated insights rather than producing them directly. As one banking data scientist noted, their current role “focuses more on using data and analysis to drive long-term product/business strategy”, essentially becoming orchestrators rather than executors.

4. The Scope Question: Retrofit Versus Reengineer

Perhaps the most pressing question for data teams: Do you incrementally improve existing workflows with AI assistance, or completely reengineer processes around AI capabilities?

Goodwill Industries provides a telling example. What started as using AI to sort textiles revealed opportunities for “complete reengineering of the supply chain”, questioning “the very structure of a decades-old, human-centric workflow.” The same pattern applies to data science: Do we use AI to make our current dashboards faster, or do we rebuild our entire analytical infrastructure around autonomous insights generation?

The New Data Science Job Description

As agentic AI reshapes workflows, the data scientist’s role is evolving from hands-on analyst to AI orchestra conductor. The BCG/MIT research reveals several concrete shifts already underway:

- 45% of organizations with extensive agentic AI adoption expect reductions in middle management layers, including data team leads who traditionally bridged technical work and business strategy.

- 43% anticipate hiring more generalists in place of specialists, suggesting demand is shifting from deep statistical experts to professionals who can span domains and coordinate across AI systems.

- 29% expect fewer entry-level roles, raising concerns about how future data scientists will gain the foundational experience currently built through routine analytical work.

The implications are stark: the traditional data science career ladder is collapsing. Entry-level positions that once taught fundamentals through hands-on analysis may disappear, while middle management roles that coordinated work become redundant as AI agents handle coordination autonomously.

Human Judgment as Competitive Advantage

Despite these shifts, the research reveals an unexpected silver lining: 95% of individuals at leading agentic AI organizations report AI has positively impacted their job satisfaction. This suggests the most successful data scientists aren’t fighting the trend but leaning into their uniquely human capabilities.

As organizations deploy both “human-in-the-loop and human-out-of-the-loop systems depending on risk levels”, as described in the BCG/MIT findings, data scientists increasingly focus on higher-judgment responsibilities: exception handling, ethical oversight, strategic interpretation, and managing the human-AI collaboration itself.

The most valuable data scientists will be those who can ask better questions rather than simply finding better answers, who understand not just the technical implementation but the business context, ethical implications, and strategic consequences of AI-driven insights.

The Governance Gap No One’s Talking About

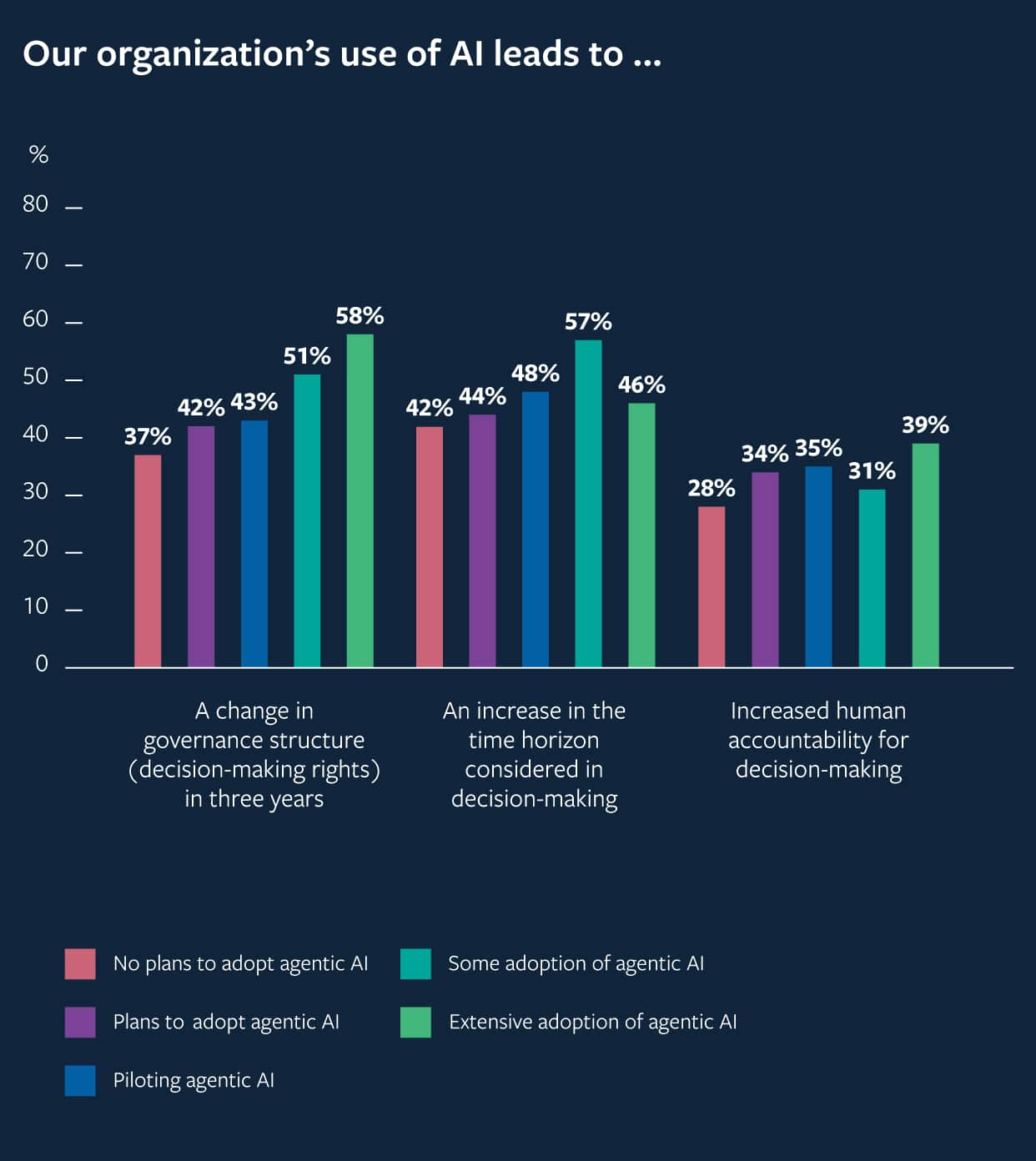

While adoption accelerates, most organizations remain dangerously unprepared for the management implications. The report notes that “most companies have yet to redesign workflows, governance structures, or talent plans to support autonomous agents.” An EY survey from September 2025 found only 14% of organizations have implemented full-scale adoption of agentic AI.

This creates a risky gap: organizations are deploying autonomous systems faster than they’re developing the governance frameworks to manage them. As the researchers warn, agents “can still hallucinate, mis-route data, or misinterpret goals”, making human oversight essential. The Deloitte incident where AI-generated content contained fabricated citations serves as a cautionary tale about what happens when automation amplifies errors without proper guardrails.

Data Science’s Evolution, Not Extinction

The most provocative insight from the research might be this: 58% of agentic AI leaders expect governance structure changes within three years, with AI systems’ decision-making authority growing 250%. This isn’t minor adjustment, it’s wholesale organizational transformation.

Data scientists who thrive in this new environment will need to master skills that sound more like management consulting than technical work: AI orchestration, prompt engineering, model auditing, and judgment-driven oversight. They’ll spend less time writing SQL queries and more time designing systems where AI colleagues handle the querying while humans focus on interpreting results and making strategic recommendations.

As one data scientist noted about their evolving role, it’s shifting “from making observations > finding key questions > generating hypothesis > validating hypothesis > drawing conclusions > communicating results” to supervising AI systems that perform these steps autonomously. The scientific method remains, but who executes it is changing dramatically.

The Uneven Global Adoption Pattern

Interestingly, the anthropomorphization of AI varies significantly by region. While 76% of global executives view agentic AI as coworkers, that number jumps to 82% among African executives, according to regional data from the study. This suggests cultural factors significantly influence how organizations integrate AI into their operational fabric.

The same research shows African respondents expect AI agents to handle 47% of their current workload today and 60% within three years, among the most optimistic projections globally. This regional variation highlights that the AI colleague phenomenon isn’t uniform, and local implementation strategies matter enormously.

Where This Leaves Data Professionals

The great irony of the “AI as colleague” movement is that it’s making data scientists more human, not less. As routine analytical work gets automated, the aspects that remain distinctly human become more valuable: creativity, ethical judgment, strategic thinking, and the ability to ask questions nobody thought to ask.

The data scientists who will thrive in this new environment aren’t those who can out-compute the AI, but those who can leverage AI colleagues to amplify their own uniquely human strengths. They’re not being replaced by robots, they’re becoming robot wranglers, orchestra conductors, and strategic partners in a hybrid human-machine workforce.

As the BCG/MIT researchers conclude, “The challenge of agentic AI is organizational, not technological.” The organizations, and data scientists, who succeed will be those who focus less on the technology itself and more on the human systems that surround it. Because in the end, the most valuable colleagues, artificial or otherwise, are the ones who make us better at what we do best.